Stephen Wolfram’s latest work proposes a unifying theory for why evolved systems—from cells to codebases—exhibit pockets of clear mechanism amid oceans of complexity. By treating evolution as search over a vast ‘rulial’ space of possible rules, he argues that simple goals plus computational irreducibility inevitably produce the mechanoidal, orchestrated behaviors we recognize as life.

Beyond DNA: How Wolfram’s ‘Rulial Ensemble’ Recasts Life as a Computation-First Architecture

When engineers talk about “orchestration,” they usually mean Kubernetes clusters or workflow engines. Stephen Wolfram is aiming several layers deeper.

In his new essay, “What’s Special about Life? Bulk Orchestration and the Rulial Ensemble in Biology and Beyond” (Nov 11, 2025), Wolfram sketches an ambitious computational theory of living—and more broadly, adaptively evolved—systems. It is not a metaphorical comparison between cells and software; it is an attempt to formalize life as an emergent property of search over rule space.

For a technical audience used to wrestling with complexity—distributed systems, large ML models, supply-chain entanglements—Wolfram’s thesis lands with a familiar sting: complexity is cheap, structure is expensive, and purpose is a constraint.

This piece unpacks his core ideas in engineering terms and asks what they imply for how we design AI systems, protocols, architectures, and perhaps even future synthetic life.

Source: Stephen Wolfram, “What’s Special about Life? Bulk Orchestration and the Rulial Ensemble in Biology and Beyond”, writings.stephenwolfram.com, November 11, 2025.

From Random Goo to Bulk Orchestration

Wolfram starts from a biological observation that also reads like a distributed-systems design brief:

- At molecular scale, cells are not just Brownian soup.

- They exhibit pervasive, active orchestration: motors, pumps, checkpoints, signal cascades, compartmentalization.

- This orchestration is not a collection of isolated gadgets; it is a densely layered system in which countless local mechanisms cohere into global function.

He calls this phenomenon “bulk orchestration”: large numbers of simple components whose interactions are not merely stochastic but coordinated toward persistent, robust outcomes.

The central question: can we characterize, in general and mathematically, what kind of rules give rise to this bulk orchestration—without reverse‑engineering every biochemical detail?

His answer leans on two pillars familiar to many in CS and physics:

- Computational irreducibility: for many systems, you can’t shortcut their evolution; the only way to know what happens is to run them.

- The Principle of Computational Equivalence: above a surprisingly low threshold, systems tend to have equivalent computational sophistication.

Left alone, such systems skew toward unpredictability—the analogue of the Second Law, hash functions, chaotic schedulers, or adversarial packet storms. Yet life, software, and engineered infrastructure repeatedly demonstrate islands of predictability and mechanism inside that chaos.

Wolfram’s move is to formalize where those islands come from.

The Rulial Ensemble: Evolution as a Search Over Programs

Instead of thinking about evolution as tweaking configurations (states), Wolfram emphasizes tweaking rules.

- In biology: genotypes encode rules; phenotypes are what happens when you run them.

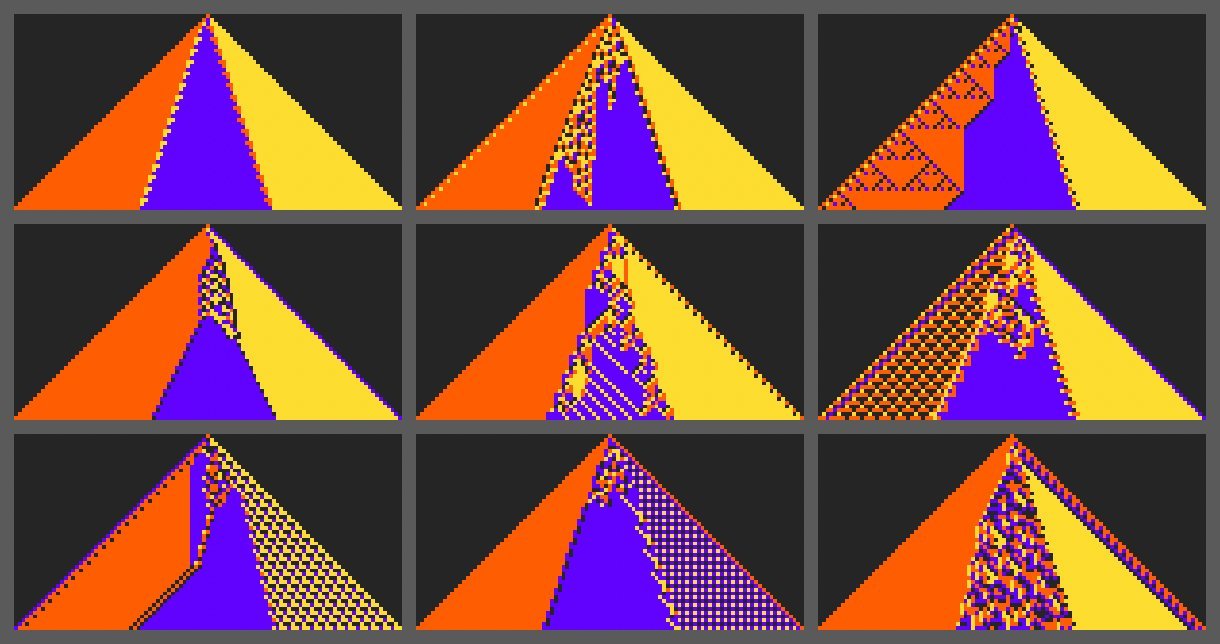

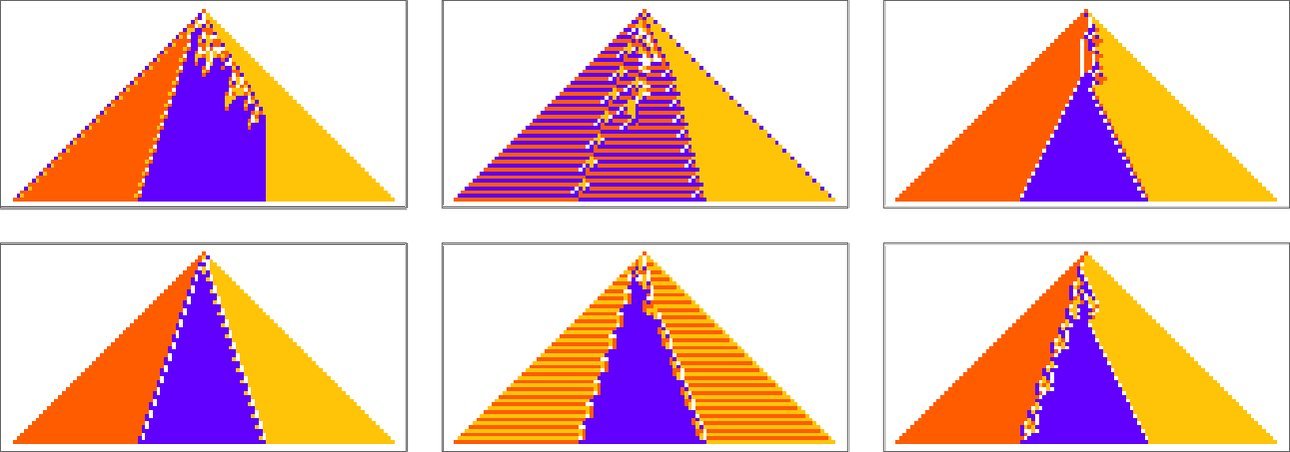

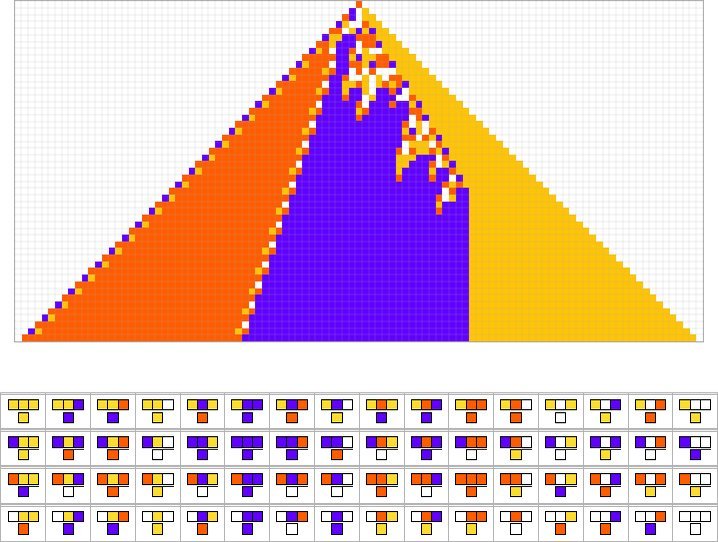

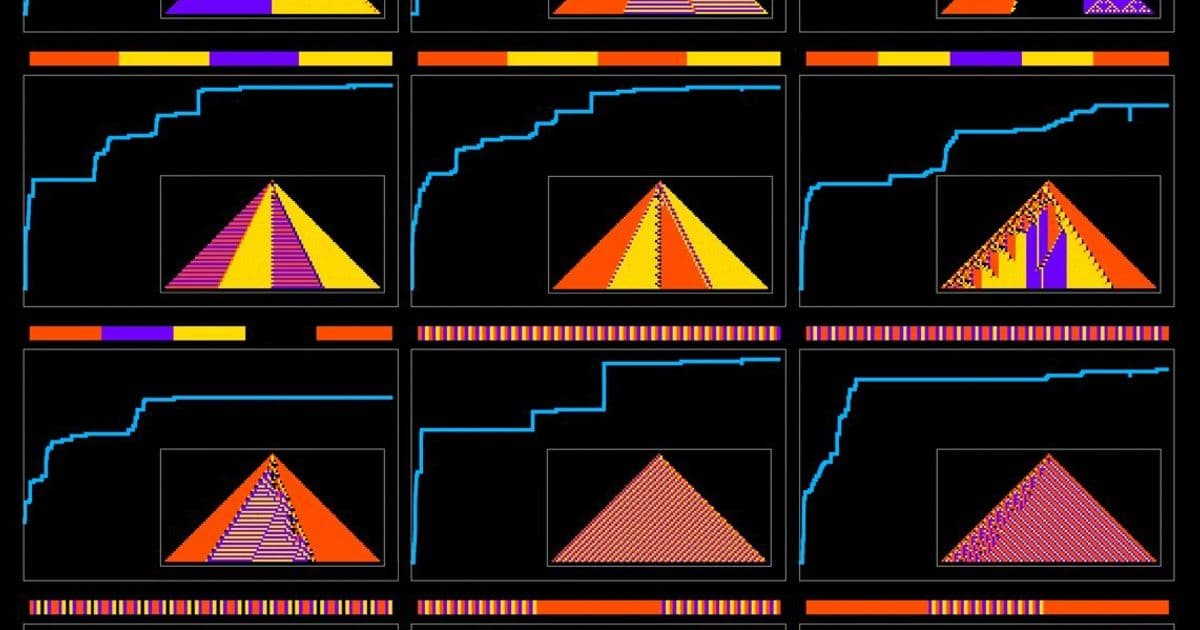

- In his experiments: rules are cellular automaton (CA) transition tables; the resulting space-time patterns are toy phenotypes.

Consider the space of all possible rules of a given type. Wolfram calls this space “rulial space.” A “rulial ensemble” is then the subset of rules singled out by some selection process—in our case, adaptive evolution under a given objective.

Key step: assume only that the objective (or “fitness function”) is computationally simple.

- Examples (in CA terms): produce three equal color bands, match a target bitstring, maintain certain color frequencies, hit a specific lifetime, exhibit a given period.

- Crucially, the simplicity assumption is about evaluability, not triviality. You can quickly check whether the goal is met.

Run evolution in this space:

- Start from some rule.

- Apply small random mutations to the rule.

- Keep mutations that don’t worsen fitness.

- Repeat thousands of times.

What emerges across many such experiments is not a menagerie of maximally weird programs, but a recognizable structural signature.

Mechanoidal Behavior: Islands of Mechanism in a Sea of Computation

Wolfram calls the characteristic signature of evolved-for-a-purpose rules “mechanoidal behavior.” For practitioners, it looks uncannily like good systems architecture:

- Repeated motifs and modules.

- Localized, stable mechanisms that do one thing well.

- Complexity that exists, but is corralled into controllable regions.

- Clear signaling structures—"interfaces" between subsystems.

Contrast:

- Random rules from the same CA space: often either trivially simple or explosively chaotic with no clean handles.

- Evolved rules under simple objectives: hybrid systems where order and chaos are interleaved, but with visible, reusable tooling.

Quantitatively, Wolfram measures this via compression.

- Take the CA space-time pattern; compress it (e.g., Wolfram Language

Compress, gzip, etc.). - Evolved-for-purpose rules tend to yield compressible patterns—but not as trivial as pure repetition.

- Their compressed description length sits between the extremes of random chaos and rigid simplicity.

This is suggestive: mechanoidal behavior corresponds to systems that are

- Structured enough to be summarized,

- Rich enough to be powerful,

- And sculpted not by human design, but by selection under simple constraints.

In other words: a computationally grounded account of why life, and many engineered artifacts, look the way they do.

Mutational Complexity: A Cost Model for Achieving Structure

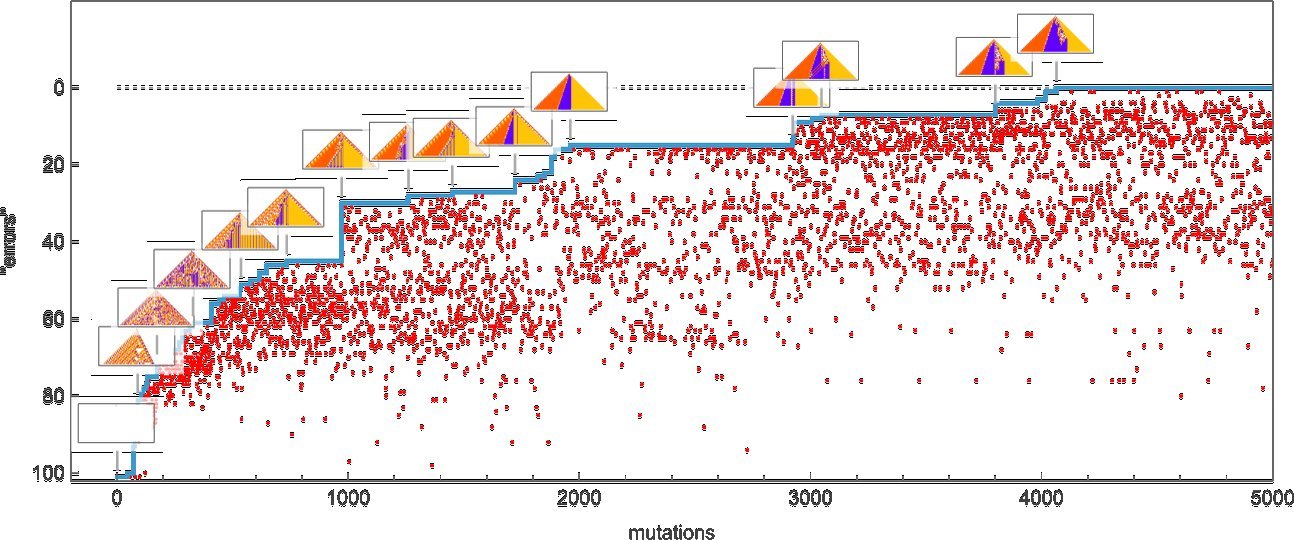

To make this more than hand-waving, Wolfram introduces “mutational complexity”:

- For a given objective, consider many runs of evolutionary search.

- Measure how many mutation steps (on average, or at median) are needed to find a rule that achieves it (or comes within a tolerance).

- That number is the mutational complexity of the objective.

Insight:

- Simple patterns—e.g., basic color blocks, short-period signals—tend to have low mutational complexity. Evolution finds them readily.

- More intricate goals (large periods, tight geometric shapes, stringent robustness) demand more steps; some become effectively unattainable within search limits.

- Many theoretically possible targets are unreachable due to the limited expressive power of the chosen rule class—mirroring algorithmic information theory: some sequences are too complex to be generated by a compact program.

This gives evolution a computational budget line item. A system’s observed behavior is bounded not only by physics or chemistry, but by how much mutational search history it can effectively amortize.

For engineers, this feels very much like:

- Architecture found via gradient descent, evolutionary search, or neural architecture search.

- Some designs are never discovered not because they’re not optimal, but because they’re too “far” in configuration space.

Mutational complexity becomes a unifying way to talk about why certain patterns of mechanism are common in life, AI, and infrastructure—and others, while possible, almost never appear.

The Evolutionary Workload: Taming Irreducibility Without Killing It

Across objective types—target sequences, vertical patterns, color histograms, growth shapes, lifetimes, periodic behavior—Wolfram reports a stable meta-pattern in the search dynamics:

- Early in evolution, complexity spikes: mutations explore messy, computationally irreducible regimes.

- Over time, selection “squeezes” irreducibility, carving out pockets where reliable mechanisms emerge.

- Successfully evolved solutions keep enough structure to hit the goal, but often still contain “sparks” of complexity.

The process is not monotonic.

- Compression measures show that evolutionary trajectories often pass through a peak of apparent randomness before settling into mechanoidal order.

- Think of this as a kind of computational simulated annealing: broad, chaotic exploration followed by consolidation into reusable mechanisms.

Wolfram argues this isn’t a quirk of his toy models—it’s a generic effect of selection acting over rich computational substrates:

- Computational irreducibility guarantees a vast search space with surprising solutions.

- Simple objectives filter this space, forcing the emergence of mechanism-like components that can be reused and orchestrated.

- Over sufficient evolutionary time (enough to “pay” the mutational complexity cost), the surviving rules form a rulial ensemble with consistent structural properties.

This is an appealing lens on biological organization and also on how large AI and software systems evolve:

- Arbitrary complexity is cheap to generate.

- Purposeful, composable mechanism is rare and therefore strongly selected—by natural selection, market forces, SLOs, or training loss.

Why This Matters for AI, Software, and Systems Design

Wolfram frames his work as theoretical biology, but the implications are squarely in the strike zone for developers and tech leaders.

Here are four concrete takeaways:

1. Life as an Existence Proof for Computation-First Architecture

If you believe Wolfram’s framing, the hallmarks of living systems—modularity, feedback control, signal routing, error correction—are not domain-specific biochemical hacks, but universal responses to simple objectives in a computationally irreducible substrate.

For system builders, that suggests:

- The architectures we converge on via iterative refactoring and production incident postmortems are shadows of the same principles.

- Observed "best practices" may admit deeper, rule-space explanations—and better search strategies.

2. A Formal Language for “Design by Optimization”

Modern AI/ML, reinforcement learning, and auto-tuning pipelines already treat code, weights, or graphs as evolvable artifacts under a loss function.

Wolfram’s machinery gives us:

- Mutational complexity: a way to reason about whether an objective is realistically reachable by gradient-free or evolutionary methods.

- Rulial ensembles: a way to talk about the distribution of solutions you should expect when you optimize under a given class of rules.

This suggests practical heuristics:

- Prefer fitness functions that are computationally simple to evaluate but reflect desired global behavior—these are exactly the ones that generically produce mechanoidal, robust mechanisms.

- Be suspicious of objectives that implicitly require solving computationally hard subproblems; evolution may never find them.

3. Robustness as a Selector for Simplicity

One striking experimental pattern: when Wolfram forces not just correctness but robustness—solutions must survive perturbations—evolution is driven toward markedly simpler, more regular rules.

For resilient infrastructure and safety-critical AI, this is clarifying:

- If we bake robustness directly into the objective (rather than as an afterthought), selection pressure will naturally favor architectures that are both interpretable and mechanoidal.

- Many brittle “clever hacks” vanish under robustness-aware evolution because they occupy narrow attractors in rule space.

The message is not “simplicity is good” as a slogan, but “explicit robustness constraints algorithmically bias search toward simpler, more mechanistic, more compressible solutions.”

4. Reading Biological Mechanism as Compiled Evolutionary Code

For computational biologists and bioengineers, Wolfram’s theory reframes why molecular biology keeps discovering machine-like modules instead of homogeneous goo:

- Those modules are what survived a long search through rule space under simple—but non-trivial—fitness objectives.

- Their prevalence is not an accident of chemistry; it is a generic structural consequence of evolution operating in a computational universe.

In practice, that means:

- Looking for mechanoidal motifs (motors, cycles, counters, gating networks) is not just descriptive but theoretically warranted.

- Designing synthetic biological circuits might be more effective if we emulate evolutionary search in rule space, guided by mutational complexity estimates.

Where Physical Law Meets DevOps Culture

Perhaps the most provocative part of Wolfram’s essay is conceptual: he is trying to put life, physics, and mathematics into one computationally unified story.

- In statistical mechanics, ensembles run over states; typicality gives us thermodynamic laws from chaos.

- In his evolutionary picture, ensembles run over rules; selection under simple objectives gives us mechanoidal structure from chaos.

For “observers like us”—computationally bounded agents embedded in this universe—both viewpoints are constraints on what we can reliably see and engineer.

For the tech community, there’s an unexpectedly practical moral hiding in this abstraction:

- Any sufficiently rich computational substrate (runtime, cloud, ML stack, on-chain environment) will generate wild complexity by default.

- If we expose that substrate to long-run selection with simple, checkable objectives (through markets, uptime requirements, regulatory constraints, training signals), we should expect convergent architectural features: modular mechanisms, repeated motifs, bulk orchestration.

Wolfram is effectively arguing that the way your production system “wants” to look—after years of incidents, optimizations, and redesigns—is not mere historical accident. It is constrained by the same mathematics that shapes living cells.

That’s a big claim. But it’s also a useful one: it suggests we can analyze and guide our evolving stacks not only with ad hoc best practices, but with principled expectations about what kinds of structure are discoverable and stable in rulial space.

Toward an Engineering Science of Evolved Systems

Wolfram himself is explicit that this program is just beginning. The experiments are stylized, the models idealized. Yet for readers steeped in complex systems, formal methods, program synthesis, and AI alignment, the outline is tantalizing:

- Define objective classes by their computational evaluability.

- Characterize the corresponding rulial ensembles.

- Quantify mutational complexity to understand feasibility.

- Measure mechanoidal structure (e.g., via compression, modularity, symmetry) as an indicator of purposeful organization.

Do this right, and you don’t just get prettier cellular automata. You get:

- A principled language for talking about evolved architectures in large codebases.

- New tools for designing robust AI systems via selection over mechanism-rich rule spaces.

- A computational foundation for why biological machinery looks engineered, without invoking design.

If the 20th century gave us information theory for communication and complexity theory for algorithms, Wolfram is betting that the 21st needs a similar-level framework for evolved, orchestrated computation.

Whether or not one buys every step of his metaphysics, developers and system architects can treat this as a research agenda: a way to move from anecdotes about “emergent behavior” and “organic architectures” to something you can probe, simulate, and eventually optimize.

Because if he’s even partially right, the mechanoidal patterns you’re debugging in production aren’t just bugs or quirks. They’re signatures of where your systems sit in rulial space—and of how far your evolutionary search has gone toward taming the chaos without extinguishing its power.

Comments

Please log in or register to join the discussion