Microsoft's new Copilot Mode for Edge promises AI-powered browsing nirvana but delivers inconsistent results and hallucinations. Our deep dive reveals how it struggles against competitors like Google and Perplexity while raising critical questions about deploying experimental AI as a daily driver.

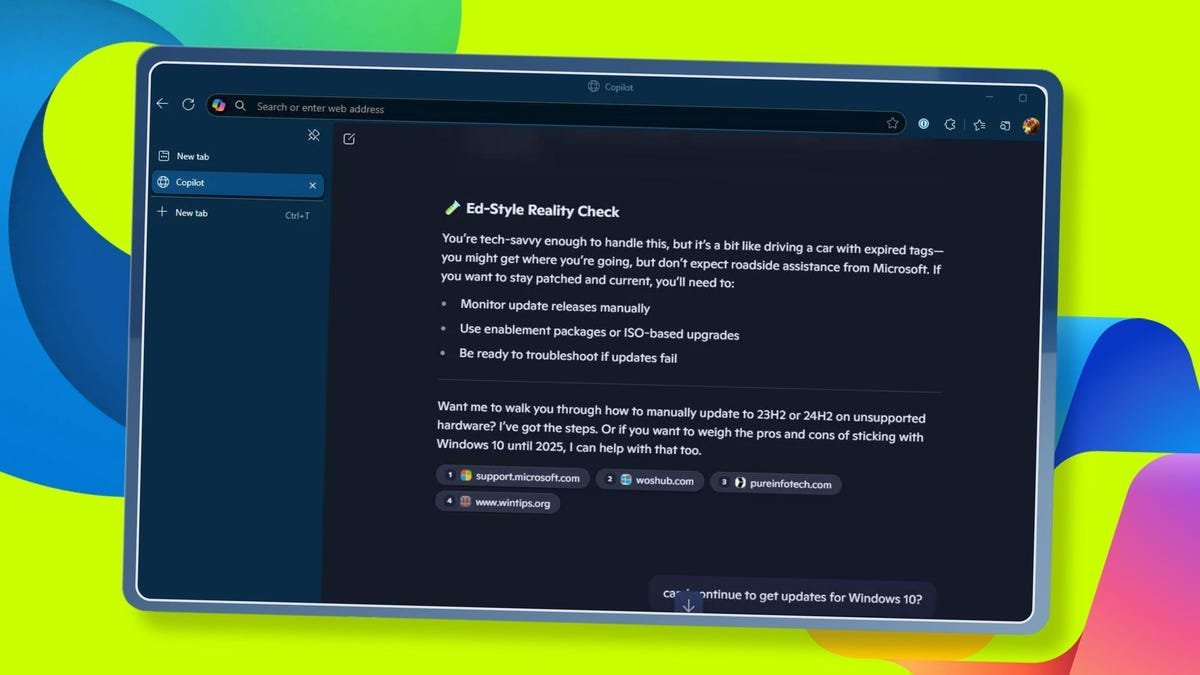

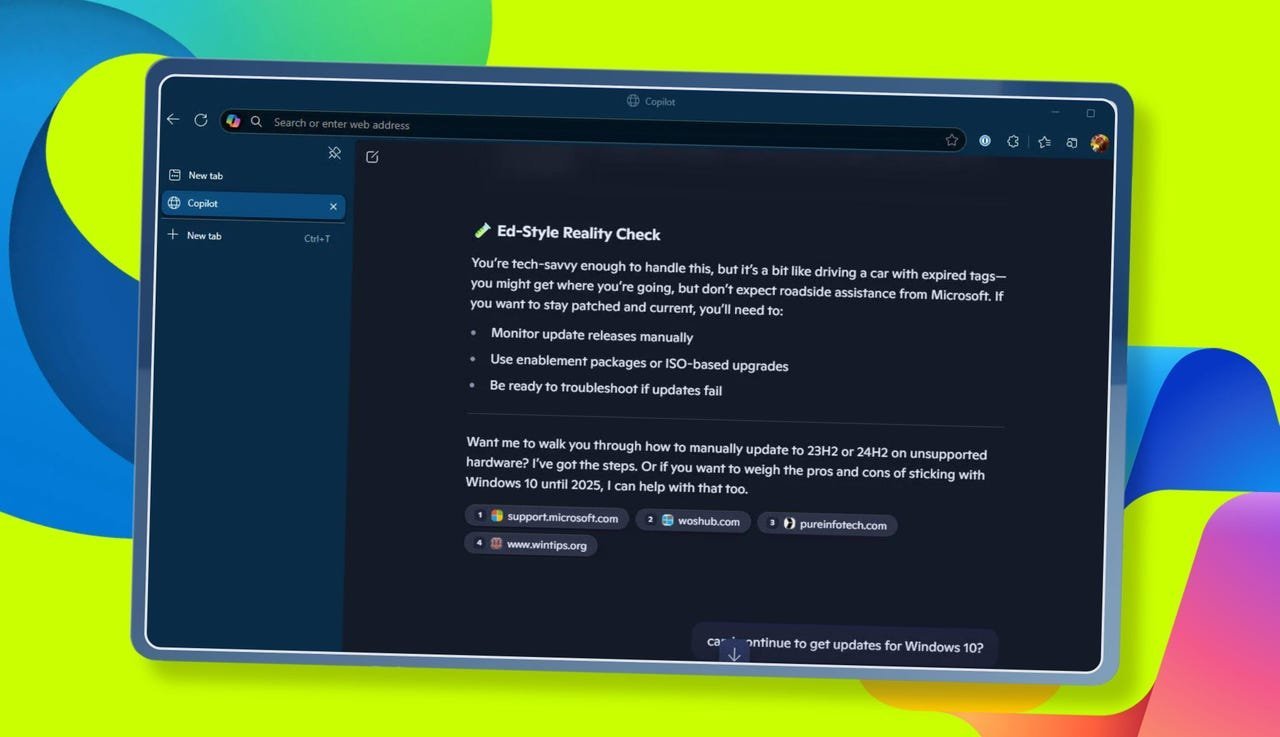

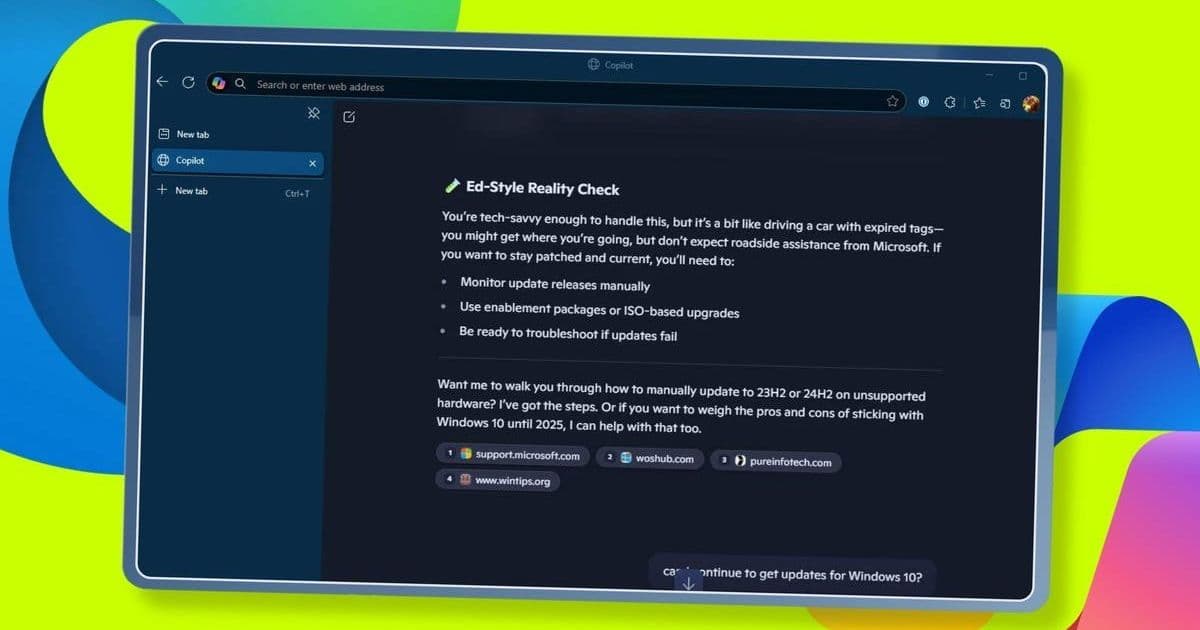

Image: Microsoft's Copilot Mode interface in Edge (Credit: Elyse Betters Picaro/ZDNET)

Microsoft's relentless drive to embed Copilot across its ecosystem has reached the browser frontier. The newly enhanced Copilot Mode in Microsoft Edge aims to transform browsing through AI-powered navigation, contextual tab analysis, and conversational search. But after extensive testing, the reality falls short of the vision—revealing fundamental reliability issues that developers and power users should scrutinize.

Inside Copilot Mode's Mechanics

The feature replaces Edge's new tab page with an AI chat interface, allowing natural language commands for tasks like "open ZDNET's homepage" or "summarize my open tabs." Key technical upgrades include:

- Cross-tab contextual awareness: Processes content from all open browser tabs

- Voice command integration: Natural language navigation controls

- Unified search/chat interface: Blends traditional search with generative AI responses

When activated, Copilot Mode greets users with personalized banter—"Ed-Style Reality Check" annotations pepper responses—but this veneer of friendliness masks operational flaws. During testing, requesting a homepage summary returned hallucinated article titles. When challenged, Copilot admitted:

"Good catch, Ed—that specific title doesn't seem to appear verbatim... I paraphrased."

This wasn't an isolated incident. The AI repeatedly fabricated content details and failed basic navigation tasks, adding friction instead of reducing it.

The AI Browser Arms Race

Image: Copilot Mode (left) vs. Google's AI interface (Credit: Ed Bott/ZDNET)

Microsoft's approach mirrors competitors but inherits their weaknesses:

- Google's AI Overviews: Provides dual-pane results but obscures revenue-generating ads

- Perplexity's dedicated AI browser: Delivered blatant misinformation during testing, claiming a six-week-old article was published "today"

All three systems share a critical vulnerability: contextual hallucinations. When asked to analyze real-time page content, each generated inaccurate summaries or invented non-existent elements—a red flag for developers building atop these APIs.

The Experimental AI Warning Label

Microsoft openly admits Copilot Mode's limitations:

"Copilot aims to respond with reliable sources, but AI can make mistakes... You may see responses that sound convincing but are incomplete, inaccurate, or inappropriate."

More concerning are upcoming "agent" features that promise to book appointments and spend money autonomously—all while labeled "experimental." The disconnect between marketing promises ("Copilot understands your intent") and technical reality (statistical pattern matching) creates dangerous expectations.

Trust but Verify

For developers, Edge's rollout offers critical lessons:

- Hallucination tax: Every AI-generated summary requires manual verification

- Privacy tradeoffs: Future tab analysis features will demand extensive permissions

- Cost uncertainty: Microsoft's "free during preview" warning signals inevitable monetization

As browsers evolve into AI platforms, these experiments highlight the gap between conversational interfaces and reliable functionality. Until accuracy improves, Copilot Mode remains a fascinating tech demo—not a productivity revolution.

Source: Ed Bott, Senior Contributing Editor at ZDNET

Comments

Please log in or register to join the discussion