Typing a URL into your browser triggers a complex ballet of protocols, caches, and connections that deliver content in milliseconds. This deep dive reveals the step-by-step process, from DNS lookups to page rendering, and why it’s essential knowledge for system designers. Understanding these mechanics not only demystifies web performance but also informs scalable system architecture.

From URL to Rendered Page: The Hidden Orchestration of Web Loading

When you enter a URL like https://www.bbc.co.uk/news/technology and press Enter, the page appears almost instantly. Yet beneath this fluid experience lies a sophisticated interplay of distributed systems working in unison. For developers and engineers, grasping this process is crucial—not just for optimizing web performance, but for designing robust systems at scale.

This article, inspired by insights from Stephane Moreau's explanation on System Design But Simple, breaks down the journey from keystroke to rendered content. We'll explore each phase and its implications for modern software engineering.

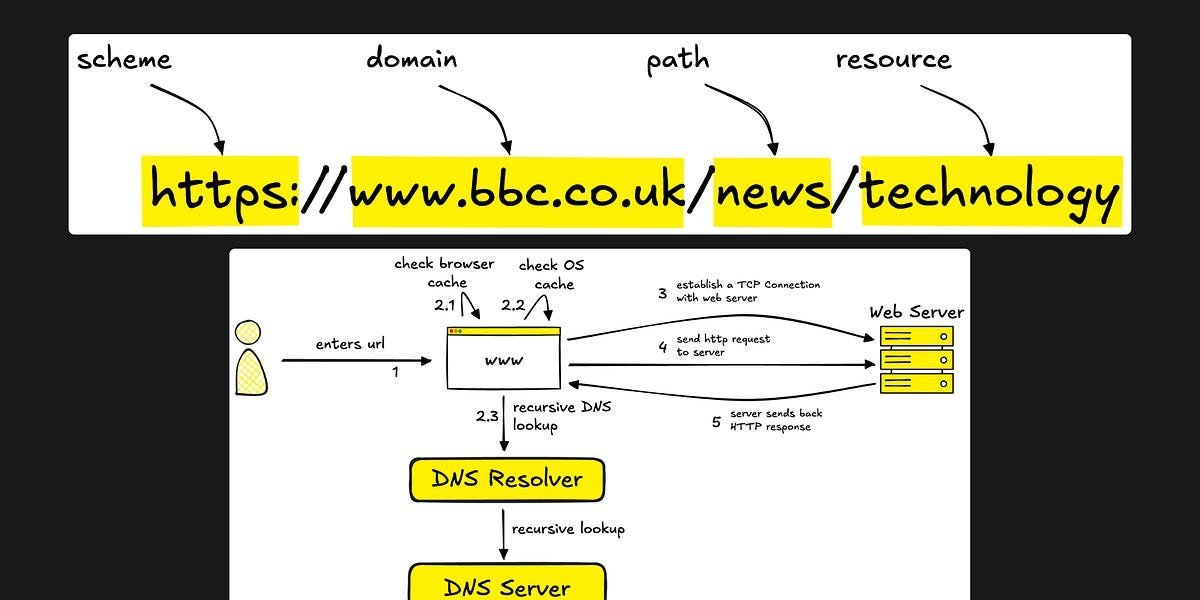

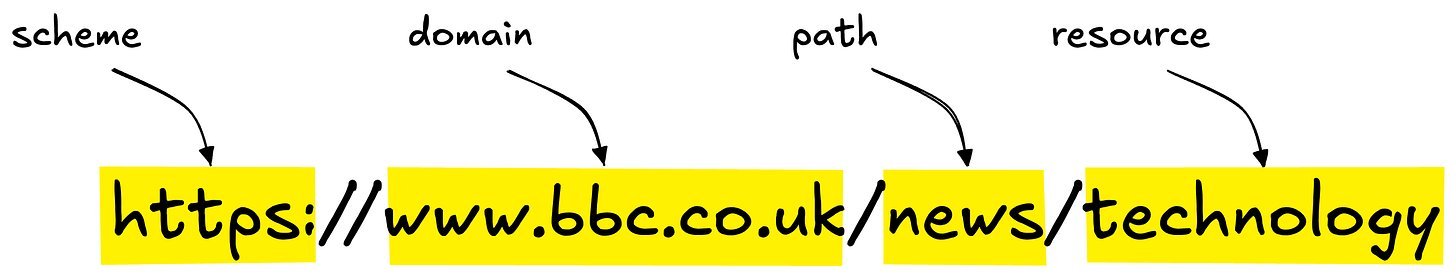

Decoding the URL: The Starting Point

A URL isn't merely an address; it's a blueprint for accessing resources. Take https://www.bbc.co.uk/news/technology:

- Scheme (https://): Specifies the protocol, ensuring encrypted communication via HTTPS.

- Domain (www.bbc.co.uk): The readable name that must be resolved to an IP address.

- Path (/news): Directs to a specific directory on the server.

- Resource (/technology): Identifies the exact content or file requested.

Computers operate on IP addresses, not domain names, so the browser must first translate this human-friendly identifier into a machine-readable one.

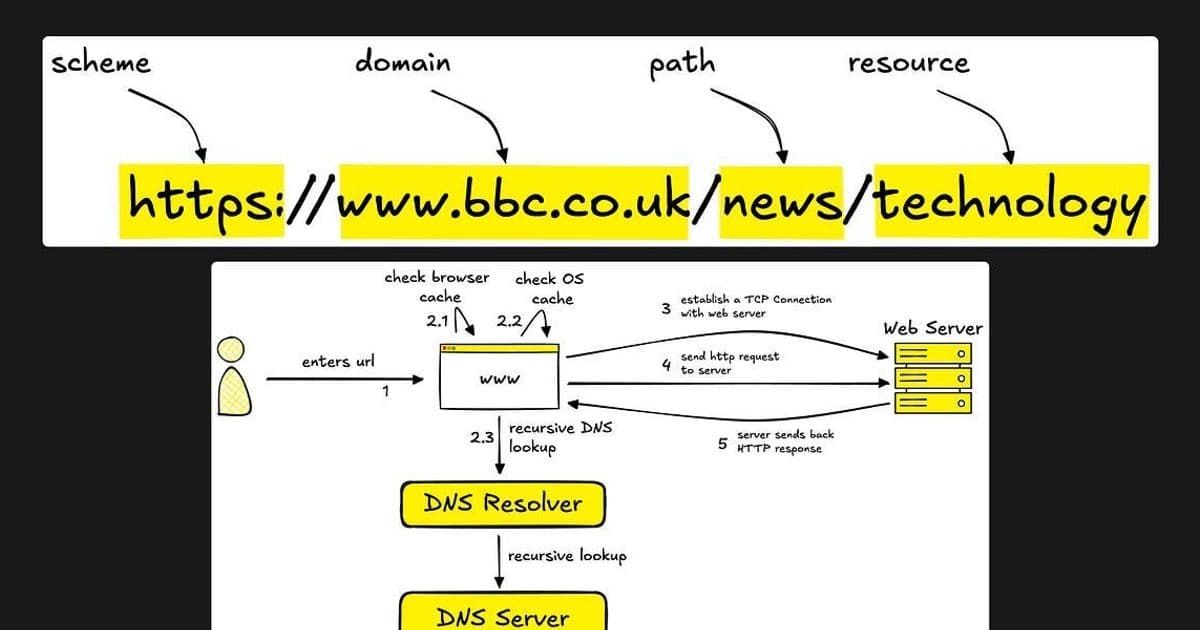

Step 1: DNS Lookup – The Internet's Address Book

The Domain Name System (DNS) acts as the internet's directory service, converting domains to IP addresses. To minimize delays, DNS employs a multi-tiered caching strategy:

- Browser Cache: The browser first checks its internal store for a recent resolution.

- Operating System Cache: If absent, it queries the OS's cache.

- DNS Resolver: As a last resort, it contacts the resolver (often ISP-provided), which traverses from root servers to authoritative name servers.

Each level caches the result, accelerating future lookups. This hierarchical approach exemplifies fault-tolerant design, with redundant servers ensuring reliability even if one fails.

Step 2: TCP Connection and TLS Handshake

With the IP in hand, the browser initiates a TCP connection via a three-way handshake: SYN, SYN-ACK, ACK. This establishes a reliable data stream.

For HTTPS, a TLS/SSL handshake follows, negotiating encryption keys to secure the channel. Though resource-intensive, optimizations like connection keep-alive and SSL session resumption reuse prior handshakes, slashing latency for subsequent requests.

These mechanisms highlight load balancing and scalability principles: browsers manage multiple connections efficiently, much like servers handle concurrent users.

Step 3: HTTP Request and Server Response

Over the established connection, the browser dispatches an HTTP request detailing the resource needed. The server processes this, retrieves files (HTML, CSS, JS), and responds with status codes and content.

Modern web apps often involve dynamic generation, where servers query databases or microservices before assembling responses. This step underscores the importance of efficient backend design to keep response times low.

Step 4: Browser Rendering and Resource Fetching

Upon receiving HTML, the browser parses it into a DOM tree. As it encounters linked resources—CSS for styling, JavaScript for interactivity, images for visuals—it triggers parallel fetches.

Each fetch repeats the DNS, TCP, and HTTP cycles, but caches and connection reuse mitigate overhead. The browser then constructs the render tree, applies styles, and executes scripts, finally painting the page.

This iterative process explains why minimizing resources and leveraging CDNs (Content Delivery Networks) is vital for performance. Unoptimized sites can balloon load times due to cumulative round trips.

Why This Process Shapes System Design

The web loading flow encapsulates core distributed systems concepts:

- Caching Layers: From browser to DNS, caching reduces latency and load on upstream systems.

- Connection Reuse: Keep-alive and session resumption optimize resource use.

- Geographic Load Balancing: DNS can route to nearest servers via anycast or geo-DNS.

- Fault Tolerance: Redundant infrastructure prevents single points of failure.

For engineers interviewing or building at scale, this model provides blueprints for handling millions of requests. Whether designing an API gateway or a global CDN, these patterns ensure systems remain performant and resilient.

As web technologies evolve—with HTTP/3 promising further gains in speed—these fundamentals remain timeless. Mastering them empowers developers to craft experiences that feel as seamless as they are complex.

Source: Adapted from Stephane Moreau's article on System Design But Simple, published November 17, 2025.

Comments

Please log in or register to join the discussion