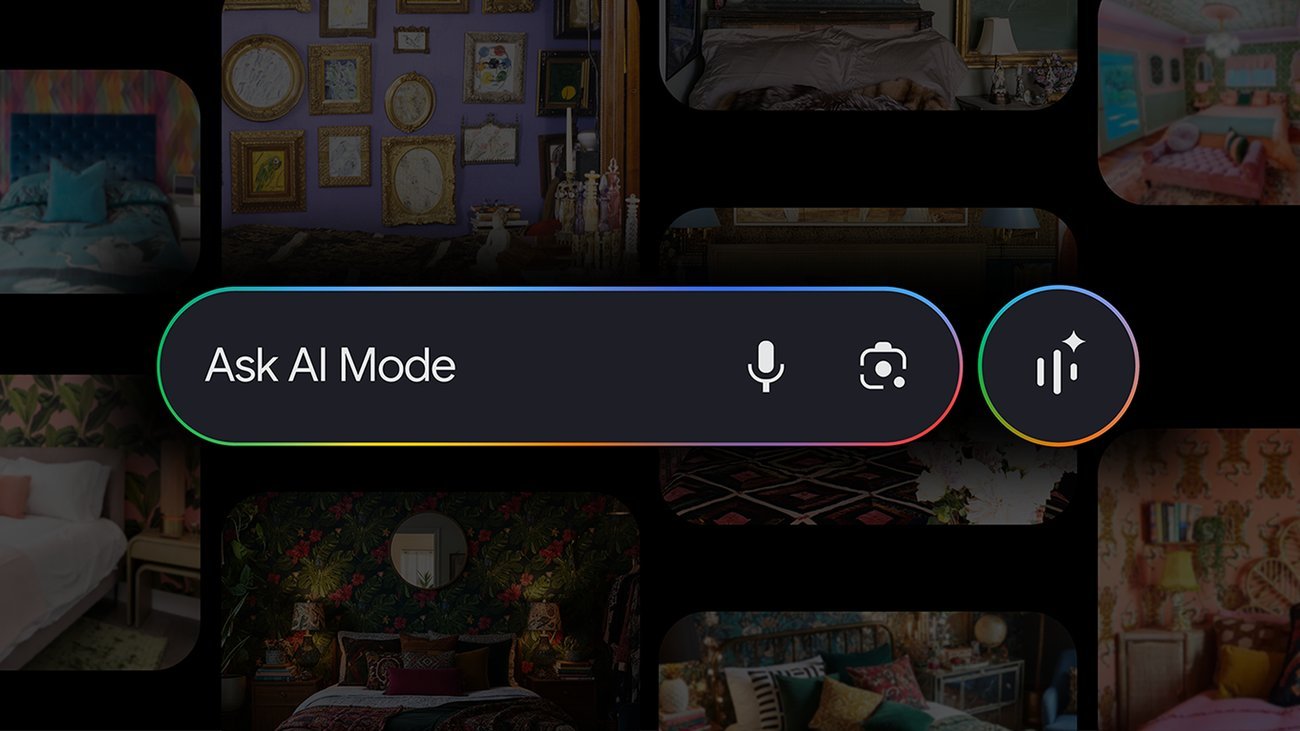

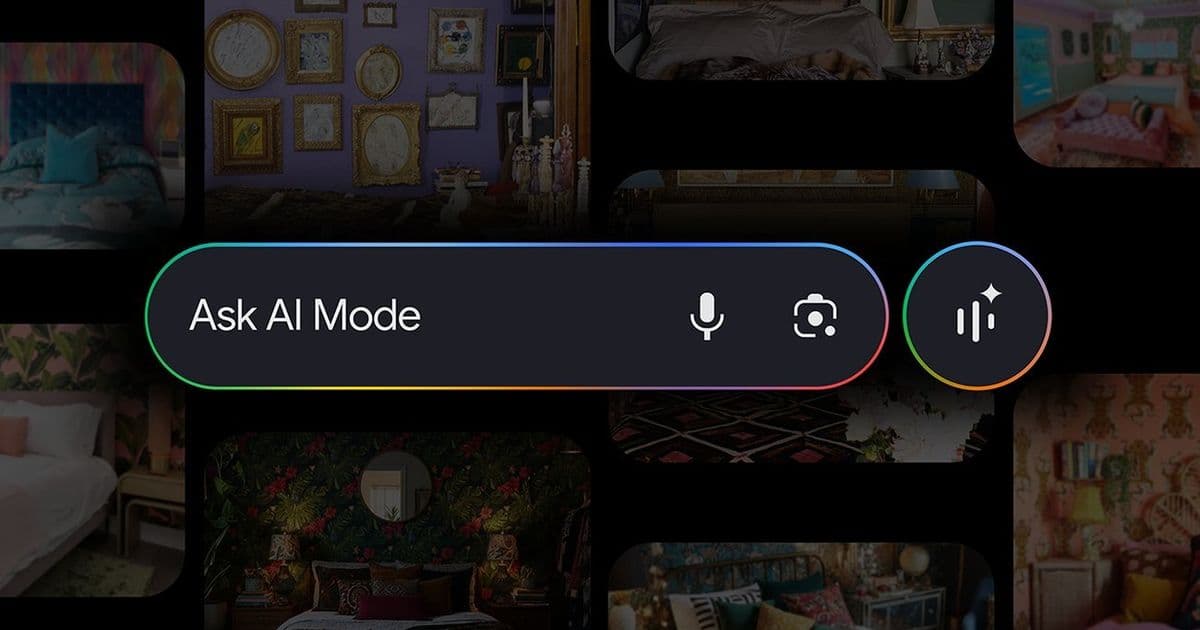

Google has transformed its AI Mode in Search with a groundbreaking visual exploration feature, allowing users to find and refine results through conversational queries and image-based interactions. Powered by Gemini 2.5's advanced multimodal capabilities and a novel 'visual fan-out' technique, this update promises to redefine discovery and shopping by understanding nuanced context without traditional filters.

We've all faced the frustration of searching for something elusive—a dream apartment vibe, the perfect fall coat—where words fall short. Google's latest AI Mode update in Search tackles this head-on by introducing a visual-first approach, turning vague ideas into tangible results. Rolling out this week in English for U.S. users, the feature leverages cutting-edge AI to make exploration intuitive and conversational, signaling a seismic shift in how we interact with search engines.

Beyond Keywords: Conversational and Visual Discovery

Gone are the days of wrestling with search terms. Now, users can ask questions naturally—like "maximalist design inspiration for my bedroom"—and receive rich visual grids in AI Mode. Each result is actionable, with links to dive deeper when an image resonates. Crucially, refinement is seamless: follow up with phrases such as "more options with dark tones and bold prints" to iteratively hone in on your vision. This multimodal experience also accepts image uploads or photos as starting points, bridging the gap between imagination and reality.

The magic intensifies in shopping scenarios. Describe needs conversationally—"barrel jeans that aren’t too baggy"—and AI Mode surfaces curated, shoppable options without manual filtering. As VP of Product Robby Stein notes, "You can simply describe what you’re looking for—like the way you’d talk to a friend." Results draw from Google's Shopping Graph, a real-time database of over 50 billion product listings refreshed hourly, ensuring users see current deals, reviews, and availability across global retailers.

The Tech Powerhouse: Gemini and Visual Fan-Out

At its core, this innovation stems from Google's fusion of its Lens-powered visual understanding with Gemini 2.5's multimodal prowess. The breakthrough is a new "visual search fan-out" technique. Unlike standard image recognition, this method runs multiple background queries to analyze subtle details and secondary objects within an image, capturing full context. As Lilian Rincon, VP of Consumer Shopping, explains, it allows AI Mode to "understand the nuance of your natural language question," delivering hyper-relevant results. On mobile, users can even ask follow-up questions about specific images, deepening the interactive experience.

Why This Matters for Tech and Developers

For the developer community, this leap underscores the growing dominance of multimodal AI in consumer applications. The visual fan-out approach showcases how large language models can evolve beyond text to interpret complex visual data, opening doors for similar innovations in AR, e-commerce APIs, and accessibility tools. However, it also raises questions about computational demands—Gemini 2.5's processing of high-fidelity images requires robust infrastructure, hinting at cloud cost implications for enterprises adopting similar tech. As visual search becomes mainstream, expect a surge in demand for skills in multimodal AI integration and ethical visual data handling, reshaping everything from UX design to backend optimization.

In an era where discovery is often fragmented, Google's update redefines search as a collaborative, visual dialogue—proving that the future of finding what we need lies not in typing, but in seeing and speaking.

Source: Google Blog

Comments

Please log in or register to join the discussion