In a landscape where AI development costs are skyrocketing, LiteAPI emerges as a game-changer, promising up to 50% savings on premium large language models through innovative credit sourcing. By offering a single gateway compatible with the OpenAI API, it simplifies multi-provider management while ensuring top-tier security and performance. Developers and tech leaders can now scale their AI projects without the financial strain, backed by real-world testimonials of dramatic cost reductions.

LiteAPI: Revolutionizing LLM Costs with Seamless Integration and Enterprise-Grade Reliability

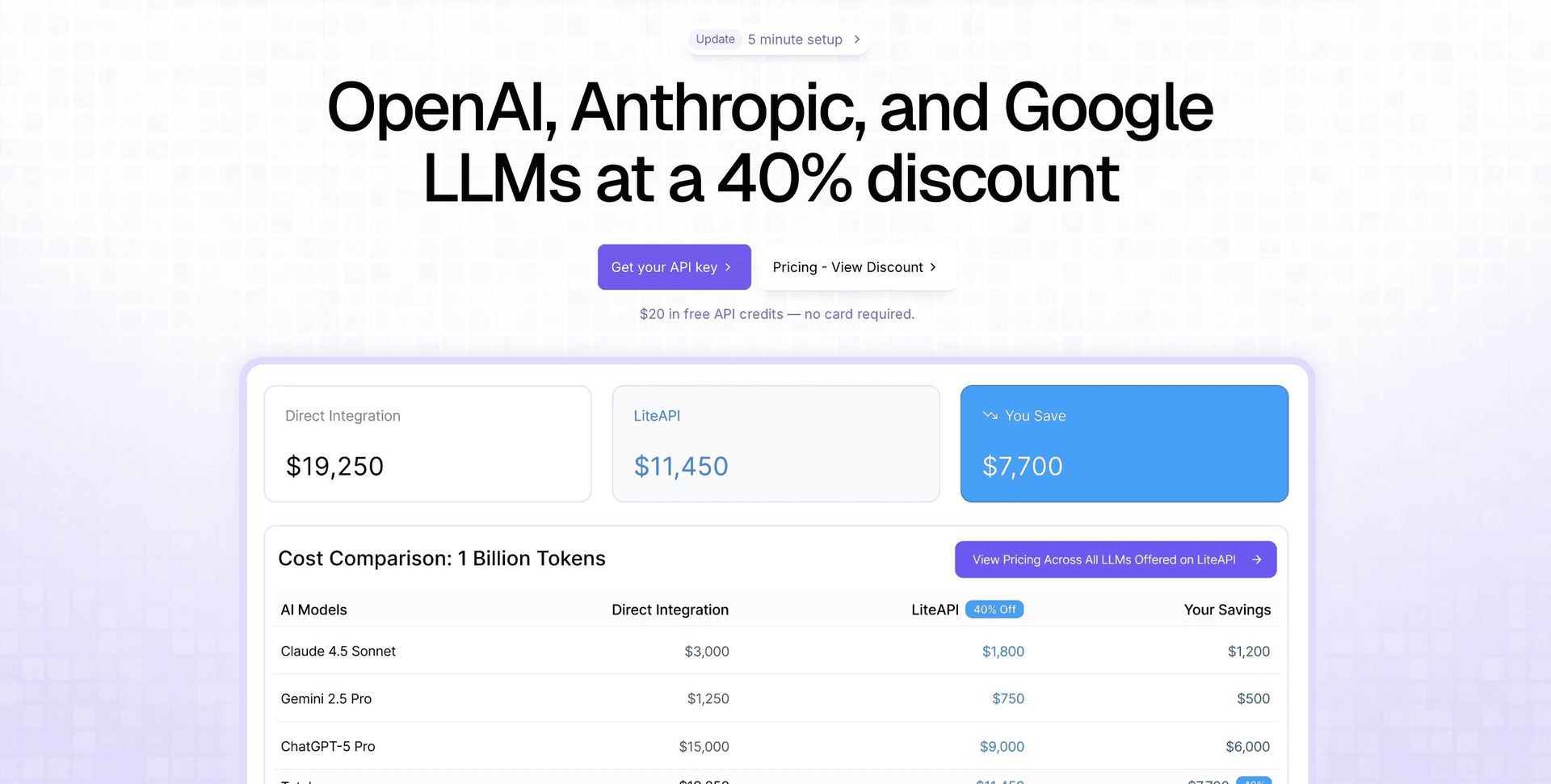

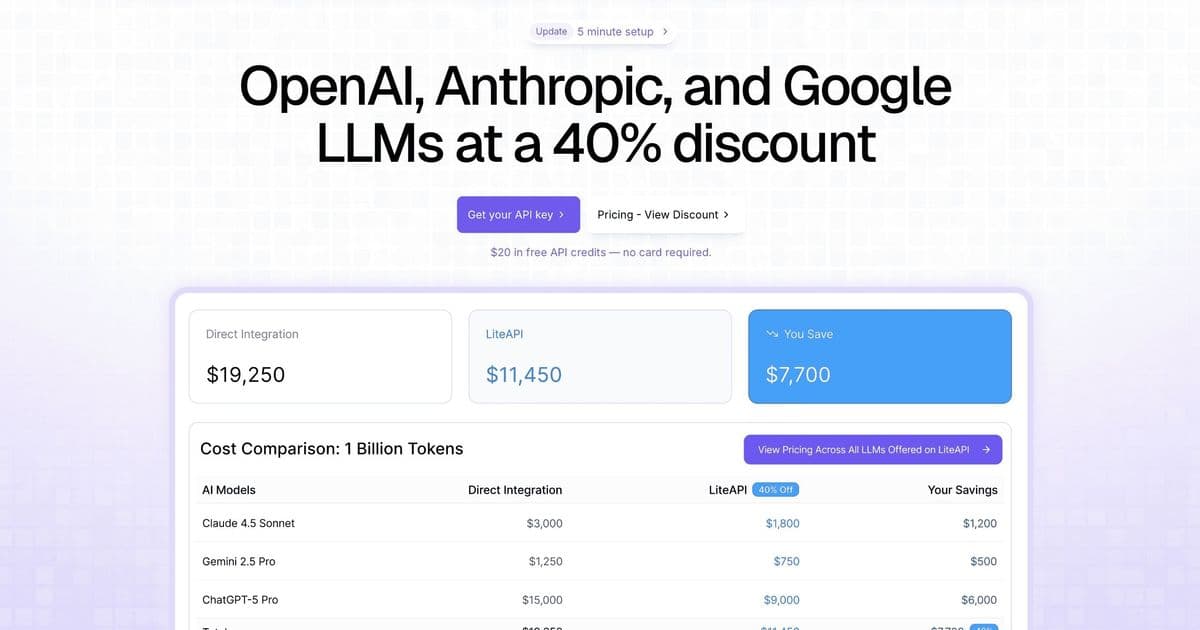

In the fast-evolving world of artificial intelligence, where large language models (LLMs) power everything from chatbots to code generation, one challenge looms large for developers and enterprises: cost. As adoption of models from OpenAI, Anthropic, and Google surges, so do the expenses. Enter LiteAPI, a new platform designed to slash LLM spending by up to 50% while maintaining enterprise-grade performance and ease of use. By acting as a unified gateway to premium providers, LiteAPI not only reduces bills but also streamlines integration, making it a must-consider tool for tech teams looking to optimize their AI workflows.

The Cost Crisis in AI Development

AI isn't cheap. Training and inference costs for LLMs can quickly spiral, especially for teams experimenting with multiple models or scaling applications. According to industry reports, LLM usage can account for a significant portion of cloud budgets, often exceeding expectations as token counts climb. LiteAPI addresses this head-on by securing discounted credits through its venture capital network and cloud partnerships, passing savings directly to users. Unlike broader marketplaces like OpenRouter, LiteAPI focuses exclusively on high-quality, enterprise models, ensuring no compromise on capability for the sake of cost.

The platform's value proposition is clear: cut your LLM spend by 40% or more without changing your codebase. Testimonials from early adopters underscore this impact. Martin Ekstrom reported switching in just five minutes and instantly reducing his team's bill by over a third. Similarly, Marilyn George called it 'the easiest win we’ve had this year,' saving tens of thousands. Cristofer Levin praised the straightforward dashboard and seamless migration, noting unexpected savings on monthly spends.

Seamless Setup and Broad Compatibility

What sets LiteAPI apart is its developer-friendly approach. Setup is as simple as updating your API endpoint—no rewriting code, no matter the language or framework. It's fully compatible with the OpenAI API format, enabling effortless migration for existing projects. This means teams can connect to various LLM providers through a single gateway, tracking requests, tokens, response times, and costs in one place.

Key features include:

- Enterprise-Grade Performance: Edge deployment with automatic failover for best-in-class uptime.

- Team Collaboration: Shared API credits empower teams to experiment and build without individual limits.

- Comprehensive Monitoring: Detailed insights into usage across providers help optimize spending and performance.

- Full Model Support: Handles text, vision, embeddings, and function calling, mirroring provider capabilities.

For security-conscious enterprises, LiteAPI employs TLS 1.3 encryption for all traffic and AES-256 for key storage. Crucially, it never logs or stores prompt data, nor uses it for training or analytics, addressing privacy concerns that plague many AI services.

Implications for Developers and Tech Leaders

In an era where AI is integral to software development, tools like LiteAPI could democratize access to premium models. Developers no longer need to choose between innovation and budget constraints; they can iterate faster with shared credits and reliable uptime. For tech leaders, the cost transparency and custom discounts for high-volume users (over $50,000/month) offer a scalable path to AI integration.

This isn't just about savings—it's about efficiency. By abstracting provider complexities into a single interface, LiteAPI reduces vendor lock-in risks and fosters a more agile development environment. As AI models evolve, platforms that prioritize both performance and affordability will shape the future of how we build intelligent applications.

As teams push the boundaries of what's possible with LLMs, LiteAPI stands as a pragmatic ally, turning potential cost barriers into opportunities for growth.

Comments

Please log in or register to join the discussion