Meta and Hugging Face are standardizing how AI agents see and touch the world with OpenEnv, an open hub and spec for agentic environments. It’s an ambitious bid to turn today’s brittle tool-calling demos into a coherent ecosystem where agents can be trained, evaluated, and deployed against shared, secure sandboxes.

Building the Open Agent Ecosystem: Inside OpenEnv’s Bid to Standardize Agentic Environments

For all the noise around “AI agents,” most real systems today are fragile scripts held together by bespoke glue code, ad hoc APIs, and implicit assumptions. Models hallucinate tools that don’t exist, vendors expose sprawling, un-audited capabilities, and every team quietly reinvents the same sandboxing and orchestration patterns.

Meta and Hugging Face are betting that this mess is not inevitable.

With the launch of the OpenEnv Hub and the OpenEnv 0.1 specification, the two are drawing a sharp line between models and the worlds they act in. Their vision: a shared, open standard for “agentic environments” that define—precisely and transparently—what an agent can see, what it can do, and how those interactions are mediated.

This isn’t just another framework. If it works, OpenEnv becomes the substrate on which serious open agents are trained, evaluated, and deployed.

Source: Original announcement and technical details from Meta & Hugging Face — “Building the Open Agent Ecosystem Together: Introducing OpenEnv” (Hugging Face Blog, Oct 23, 2025).

Why Agentic Environments Matter Now

The core problem OpenEnv tackles is straightforward and overdue:

Modern LLM-based agents are being asked to autonomously handle thousands of tasks across codebases, clouds, CI pipelines, SaaS stacks, and internal systems. A model alone cannot do this. It needs controlled access to tools, APIs, credentials, and execution sandboxes.

Naively exposing those capabilities is:

- Unsafe — too many tools, no isolation, unclear blast radius.

- Opaque — nobody (including the model) has a clear contract of what’s available.

- Unreliable — each team designs its own environment schema; nothing composes.

“Agentic environments” are the counterpoint: constrained, semantically clear contexts that enumerate exactly what an agent can use to complete a task—and nothing more.

Concretely, an agentic environment should provide:

- Clear semantics: a well-defined task space, action space, and observation model.

- Sandboxed execution: isolation boundaries, resource limits, and safety guarantees.

- Tool/API integration: authenticated access to real systems, but on principled, inspectable rails.

OpenEnv’s thesis is that this abstraction needs to be:

- Standardized, so tools interoperate.

- Open, so the community can audit, extend, and reuse.

- Portable, so the same environment powers both training and deployment.

The OpenEnv Hub: A Registry for Agent Worlds

OpenEnv arrives in two parts: a spec and a hub.

The OpenEnv Hub, hosted on Hugging Face, is a shared catalog of environments that conform to the OpenEnv spec. Any compatible environment you publish there automatically gains interactive and programmatic capabilities:

- Run as a human-in-the-loop playground: developers can step through tasks manually.

- Enlist a model: point an LLM-based agent at the environment and watch it attempt tasks.

- Inspect capabilities: see which tools are exposed, what observations look like, and how interactions are structured.

These environments are not marketing demos; they’re artifacts you can:

- Use in RL post-training.

- Reuse across benchmarks and research projects.

- Deploy in production as the exact same sandbox your models were trained in.

By turning environments into first-class, versioned, shareable units, OpenEnv squarely targets one of the most painful fractures in the current ecosystem: the gap between carefully curated “research environments” and the heterogeneous chaos of production.

The Spec: A Minimal, Composable Primitive

Underneath the hub is the OpenEnv 0.1 Spec (released as an RFC), which leans on a familiar but powerful abstraction: environments that implement reset(), step(), and close().

This choice is deceptively important: by echoing battle-tested RL interfaces while layering in modern agent needs, OpenEnv aims to be recognizable to RL practitioners and flexible enough for complex tool-using systems.

At a high level, the RFCs under review define:

RFC 001 — Core entities and relationships

- Formalizes how

Environment,Agent,Task, and related concepts fit together. - Encourages a consistent mental model across libraries and frameworks.

- Formalizes how

RFC 002 — Environment interface, packaging, and isolation

- Specifies the environment lifecycle (

reset,step,close). - Defines packaging formats and communication channels, often containerized (e.g., Docker), to ensure reproducible, portable sandboxes.

- Specifies the environment lifecycle (

RFC 003 — MCP tools via environment boundaries

- Integrates with the Model Context Protocol (MCP) and similar tool abstractions.

- Ensures that tools are surfaced through environment-defined isolation layers, not bolted on ad hoc.

RFC 004 — Unified action schema for tools and CodeAct

- Normalizes how agents express actions, including classic tool calls and code-execution paradigms.

- Lets different kinds of agent architectures (tool callers, code planners, hybrid systems) operate on a common action vocabulary.

For developers, the promise is simple: implement the spec once, and:

- Your environment works locally via Docker.

- It runs on the OpenEnv Hub.

- It interoperates with supported RL and agent frameworks without bespoke adapters.

Where OpenEnv Fits in the Post-Training Stack

OpenEnv isn’t trying to replace RL, orchestration frameworks, or inference servers. It’s the connective tissue.

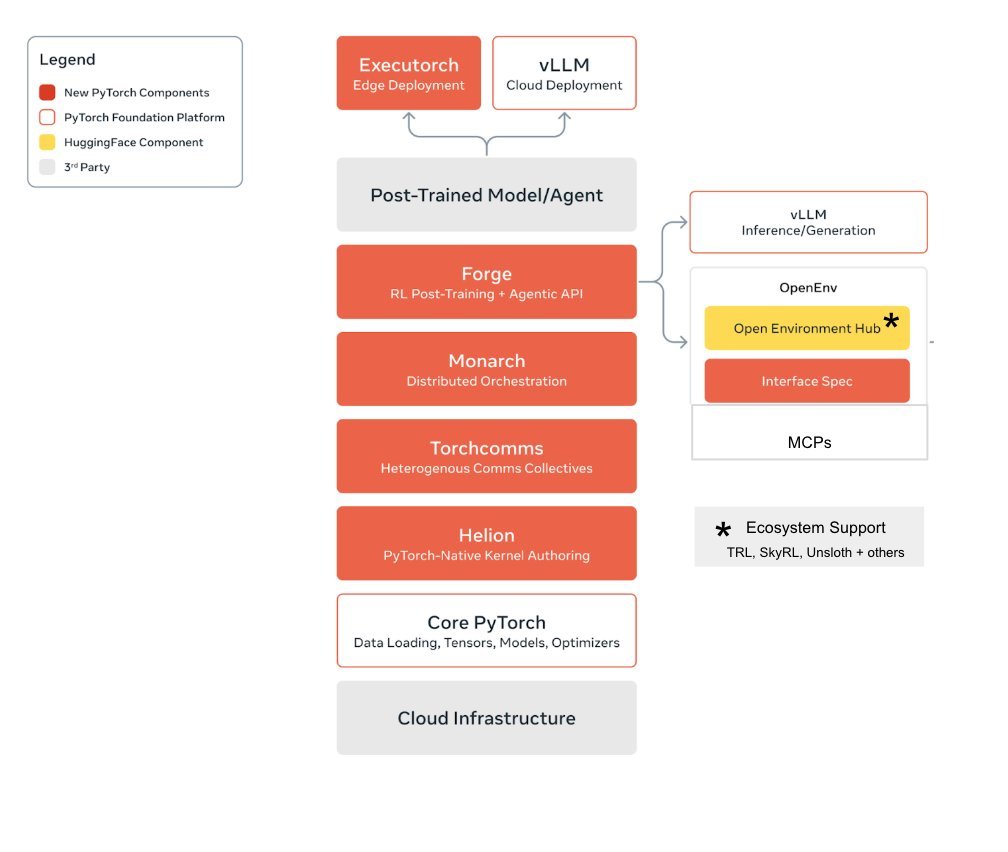

The launch slots directly into a growing open-source stack emerging around Meta-PyTorch and Hugging Face:

- TRL, TorchForge, VeRL, SkyRL, Unsloth, and others for RL post-training.

- Orchestrators and tool frameworks that manage real-world actions.

- Hosting platforms and CI/CD systems that expect reproducible, inspectable workloads.

With OpenEnv:

- RL libraries can consume standardized environments directly.

- Researchers can benchmark methods on shared, realistic environments (not contrived toys).

- Production teams can finally align training and deployment: the same environment object defines both.

This is a strong opinion about architecture: environments are the unit of agent capability, not opaque, one-off plugins.

Concrete Use Cases Developers Should Care About

The initial OpenEnv ecosystem is explicitly tuned for practitioners. Some high-value patterns:

RL Post-Training for Agents

- Pull environments from the Hub (e.g., code editing, API orchestration, document workflows).

- Train agents using TRL, TorchForge+Monarch, VeRL, SkyRL on those environments.

- Directly compare strategies because they share an underlying, open environment definition.

Environment Creation as a First-Class Artifact

- Define your internal environment that encapsulates:

- Which repos an agent may edit.

- Which CI endpoints it may trigger.

- Which cloud APIs (and scopes) it may call.

- Validate it interactively on the Hub.

- Hand it to partners, customers, or the community with a clear contract and no credential leakage.

- Define your internal environment that encapsulates:

Reproducing SOTA Methods

- Plug in environments modeled after real evaluations like FAIR’s Code World Model or complex tool-use scenarios.

- Share exact setups so others can reproduce results, rather than chasing incomplete “we used some tools” descriptions.

Train-once, Deploy-on-same-World

- Use the exact same OpenEnv definition for training and inference.

- Reduce the class of bugs where the model was trained with one tool schema and deployed with another.

- Strengthen safety reviews by centralizing policy and capability definition in the environment.

For teams already investing in agent frameworks, this is leverage. Instead of inventing yet another closed environment spec, you get a path that interoperates with the broader open-source ecosystem—and is likely to be integrated by default into emerging tools.

Security, Safety, and the Semantics of Trust

One of the more consequential aspects of OpenEnv is its stance on boundaries.

By construction, an OpenEnv environment is meant to:

- Encapsulate credentials instead of scattering them in prompts or orchestrator configs.

- Confine execution (e.g., via containers, restricted file systems, network policies).

- Provide explicit semantics: what counts as an allowed action, what success looks like, what observations reveal.

In a world drifting toward autonomous systems that touch source code, infrastructure, and sensitive data, this is not a nice-to-have. It’s the line between:

- “The model did something weird” and

- “The environment allowed this specific action under these reviewed constraints.”

If OpenEnv gains traction, expect it to become a natural anchor for:

- Policy review and compliance sign-off.

- Red-teaming and safety evaluations.

- Marketplace-like exchanges of verified environments for specialized domains.

OpenEnv as a Community Standard, Not a Walled Garden

Crucially, OpenEnv is being launched as an open RFC, not a closed product spec.

Developers can today:

- Explore the OpenEnv Hub on Hugging Face and interact with seeded environments.

- Install from PyPI and run through a full end-to-end example in the provided notebook (including Colab support).

- Experiment with Docker-based local environments.

- Join community channels (Discord, conferences, meetups) to shape the direction of the spec.

This open posture matters. The last thing the ecosystem needs is another proprietary agent DSL. By putting the spec, reference implementations, and hub in the open, Meta and Hugging Face are inviting:

- Framework authors to integrate natively.

- Platform vendors to support OpenEnv as a first-class deployment target.

- Researchers to treat OpenEnv environments as shareable scientific artifacts.

For an ecosystem already coalescing around tools like TRL, TorchForge, and MCP, OpenEnv is a natural next convergence point.

From Hype Agents to Hard Infrastructure

The agent story so far has largely been UI and hype: assistants that “can use tools” in ways that don’t survive contact with real infrastructure, real policies, or real teams.

OpenEnv is a pivot away from that.

By crystallizing environments as auditable, reusable, and interoperable units, Meta and Hugging Face are asking the community to treat agents the way we treat services and workloads: defined by contracts, constrained by sandboxes, and tested against shared standards.

If you’re building serious AI systems—whether internal code agents, autonomous evaluators, or complex multi-tool workflows—the question is no longer whether you’ll define an environment. It’s whether you’ll define it alone, or on top of a common, open substrate that others can plug into.

OpenEnv is an argument for the latter. And it’s an argument that, for once in the agent hype cycle, feels like infrastructure.

Comments

Please log in or register to join the discussion