OpenAI has disabled ChatGPT's 'discoverable chats' feature after users accidentally made private conversations public and searchable on Google. The incident highlights critical flaws in privacy interface design for AI applications with billion-user scale. This follows similar controversies at Meta AI, raising urgent questions about default settings in conversational AI platforms.

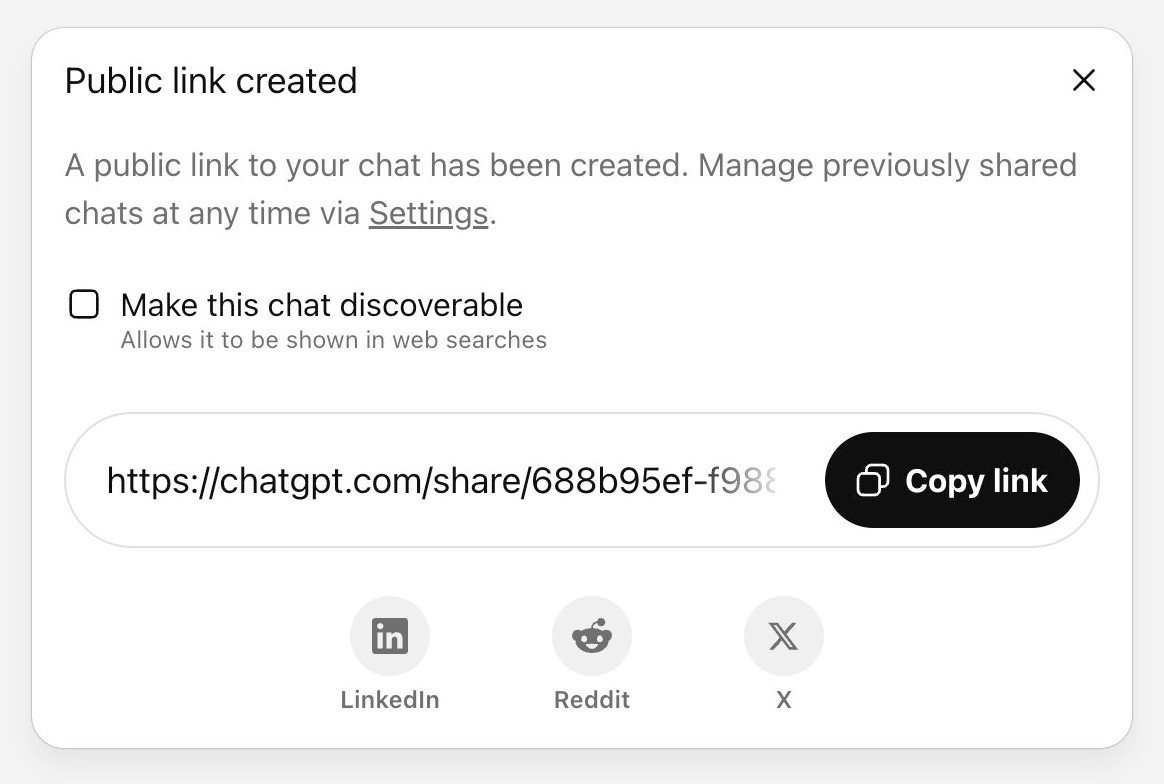

OpenAI made an emergency removal of ChatGPT's conversation sharing feature this week after discovering sensitive user chats were appearing in Google search results. The "make this chat discoverable" toggle—intended to let users publicly share helpful discussions—instead exposed private conversations containing medical details, relationship advice, and confidential work information when users misunderstood the setting.

The now-removed 'discoverable' toggle in ChatGPT's interface. (Source: Simon Willison)

The fallout was swift: searches for site:chatgpt.com revealed deeply personal exchanges, prompting coverage from TechCrunch, TechRadar, and PCMag. OpenAI CISO Dane Stuckey confirmed on Twitter: "We think this feature introduced too many opportunities for folks to accidentally share things they didn’t intend to."

Why Users Got Trapped

Technical audiences might dismiss this as user error, but the interface failed fundamental UX principles:

- Jargon overload: "Discoverable" is an industry term meaningless to most of ChatGPT's billion-plus users

- Incomplete consequences: The description "Allows it to be shown in web searches" doesn't clarify who can see it or how easily

- Attention blindness: Users optimize for task completion, not reading dialogs—especially when AI interactions feel conversational

As Simon Willison observed: "When your users number in the millions, some will randomly click things without understanding the consequences."

The Meta AI Parallel

This isn't isolated. Meta AI faces identical issues with its "Post to feed" button, where even clear microcopy ("Visible to anyone on Facebook") fails. Futurism reported users were "publicly blasting their humiliating secrets" despite the warning. Unlike OpenAI, Meta retains the feature—prioritizing engagement over privacy failsafes.

Developer Takeaways

- Assume zero literacy: For mass-market apps, design for users unfamiliar with web indexing or privacy models

- Test extreme cases: How would this feature break if 0.1% of users misunderstand it? At scale, that's millions of incidents

- Default to private: Public sharing should require deliberate multi-step consent, not a single checkbox

While OpenAI's removal was necessary, the deeper issue remains: conversational AI interfaces create unprecedented intimacy while inheriting legacy web sharing models. As Willison notes, when users perceive chatbots as private confidants, the cognitive dissonance of suddenly encountering a "share publicly" option becomes catastrophic. The industry must evolve beyond checkboxes toward contextual privacy that matches user expectations—before regulatory intervention forces clumsy solutions.

Comments

Please log in or register to join the discussion