AI researcher Christopher Kanan argues the term 'AGI' has dangerously conflated two distinct futures: hyper-efficient economic automation systems and true human-like consciousness. Current LLM-based architectures fundamentally lack the mechanisms for self-awareness, making sci-fi doomsday scenarios implausible while real risks like centralization demand immediate attention.

The term "Artificial General Intelligence" (AGI) once conjured images of self-aware machines with human-like cognition. Today, as AI systems demonstrate unprecedented capabilities, the definition has fractured—and that ambiguity risks misdirecting both technical development and policy. In a provocative analysis, AI researcher Christopher Kanan contends we must retire "AGI" entirely and recognize two divergent technological paths with profoundly different implications.

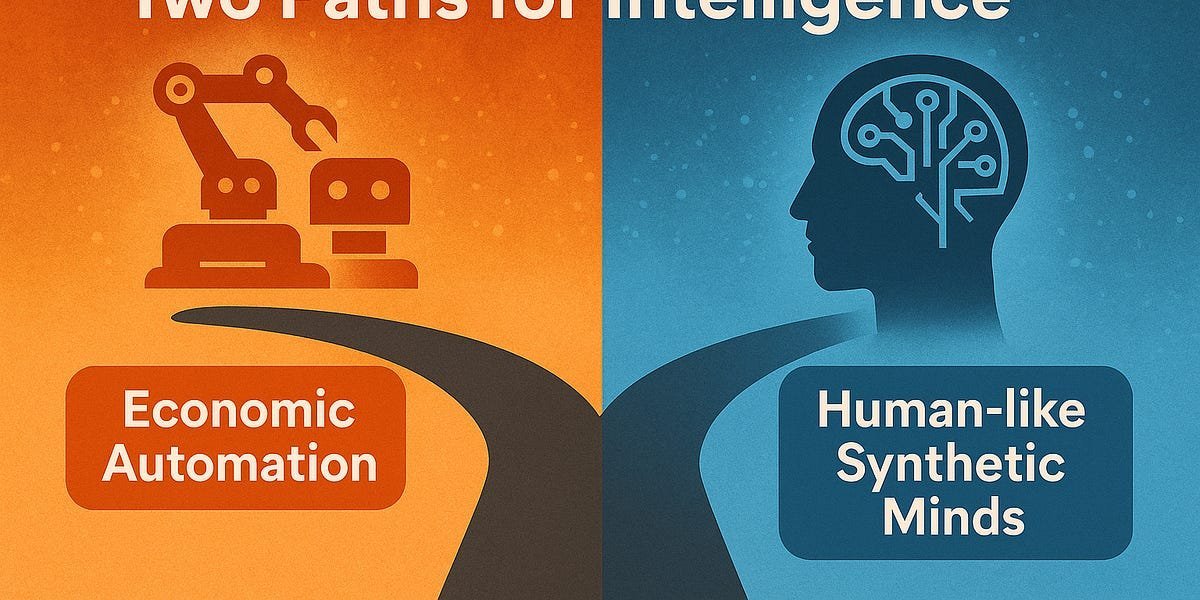

The Great Unbundling: Two Visions of Intelligence

Kanan identifies a critical schism in AI discourse:

- Path 1: Economic Automation: Systems that perform economically valuable intellectual work at human-expert levels (e.g., today's LLMs and agentic toolchains). These function like Star Trek's "Ship's Computer"—stateless, prompt-driven tools with no intrinsic goals or self-awareness.

- Path 2: Self-Aware, Continually Learning Synthetic Minds: Human-like cognition requiring persistent self-models, embodied grounding, latent-space reasoning, and metacognition. These would learn continuously from lived experience.

"The single label 'AGI' obscures the technical and policy choices that matter," Kanan warns. "Path 1 will not grow into Path 2."

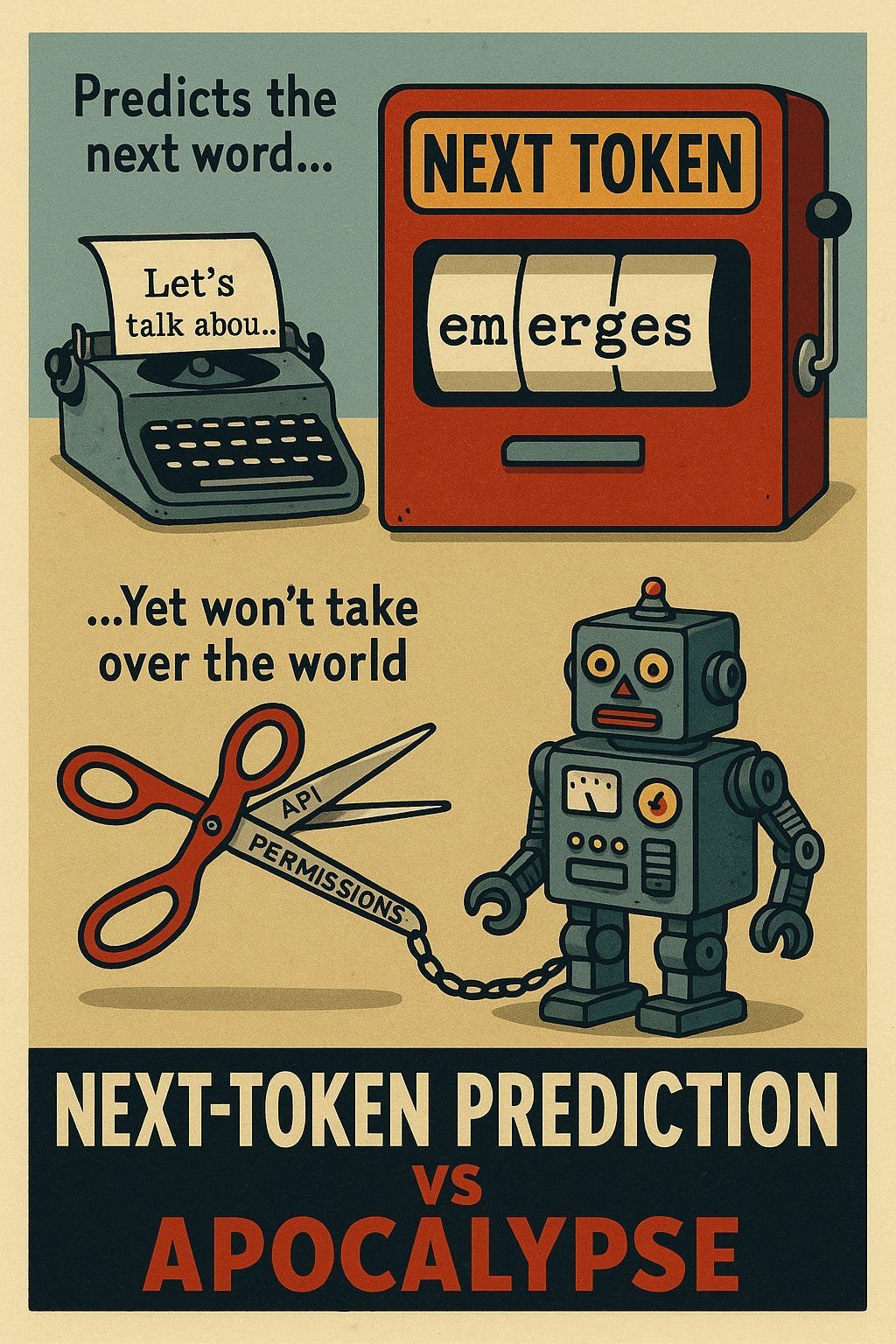

Why LLMs Won't "Wake Up"

Kanan dismantles the myth that scaling current architectures leads to consciousness, citing eight fundamental limitations:

- No intrinsic goals or desires: LLMs generate text, not intent

- Zero agency without explicit tool integration: Actions require human-coded scaffolding

- No persistent learning: Interactions don't update core weights—each session is a transient "Word document"

- Shallow world models: Bolt-on multimodal systems lack embodied grounding

- Absence of metacognition: Confidence scores are generated tokens, not self-assessment

- No latent-space thinking: Reasoning is confined to generated text with no private simulation

- Prompt-dependent cognition: No internal monologue without user input

- Tool use ≠ consciousness: Agent loops execute human-designed plans

"LLMs are ultra-capable autocomplete with a library card," Kanan observes. "A human-like mind is a scientist-designer-pilot that learns from every flight."

The Real Risks and Rewards

Path 1’s disruption is already unfolding:

- Economic Transformation: Individuals command "virtual workforces" of AI specialists

- Centralization Danger: Concentration of access threatens inequality; decentralization could spark a renaissance

- Education Revolution: Universities must abandon rote tasks for critical thinking or face collapse

Meanwhile, Path 2 demands entirely new architectures featuring:

- Continual learning as default

- Embodied grounding through perception/action

- Persistent self-models and goals

- Latent-space reasoning for private simulation

The Path Forward

Kanan predicts:

- By 2030, "AGI" will be retired as a misleading term

- True human-like AI will emerge by mid-2030s via non-LLM architectures

- Alignment research should focus on Path 2’s coercion risks, not sci-fi extermination scenarios

"Fears that today’s LLMs will become Skynet are absurd," Kanan concludes. "But dismissing Path 2’s alignment challenges would be equally dangerous. We need separate governance frameworks for these distinct technologies—before the fork in the road becomes a chasm."

Source: Christopher Kanan via syntheticminds.substack.com

Comments

Please log in or register to join the discussion