A UC Berkeley professor reveals how students' reliance on ChatGPT for coding assignments creates a dangerous competence gap—perfect homework scores mask plummeting exam performance and stunted problem-solving skills. This generational divide in AI usage signals a seismic shift in technical education that educators and industry must urgently address.

When UC Berkeley computer science instructor Lakshya Jain noticed eerily empty office hours and plummeting forum activity in his advanced database systems course, alarm bells rang. Despite sparse class attendance and near-zero questions on complex topics, students kept submitting flawless coding assignments. The truth emerged during exams: averages crashed 15% below historical norms. The culprit? Rampant ChatGPT usage for homework, creating an illusion of mastery while sabotaging actual learning.

The Illusion of Competence

Jain discovered students viewed AI as a legitimate productivity tool, arguing: "The work gets done anyway, and we'll use it in our jobs." But programming isn't about output—it's about iterative problem-solving. Historically, developers learned through painstaking cycles of coding, failing, diagnosing errors, and refining. As Jain explains:

"You have to think through the thing you want to do, write the code, execute it, watch it fail, learn what was wrong, fix it, and try again. That forces you to learn from first principles."

By shortcutting this process, students cheat themselves out of forming critical neural pathways. Those using ChatGPT for early assignments couldn't grasp subsequent material, causing second exam scores to drop even lower.

Workforce Repercussions

The crisis extends beyond academia. As a machine learning engineer, Jain interviews candidates who covertly use AI during technical screens, then crumble under verbal questioning. "Many people have simply lost the ability to reason about complex technical concepts," he observes. One candidate didn't realize ChatGPT's reflection in his glasses betrayed his reliance while incorrectly explaining his solution.

The Generational Fault Line

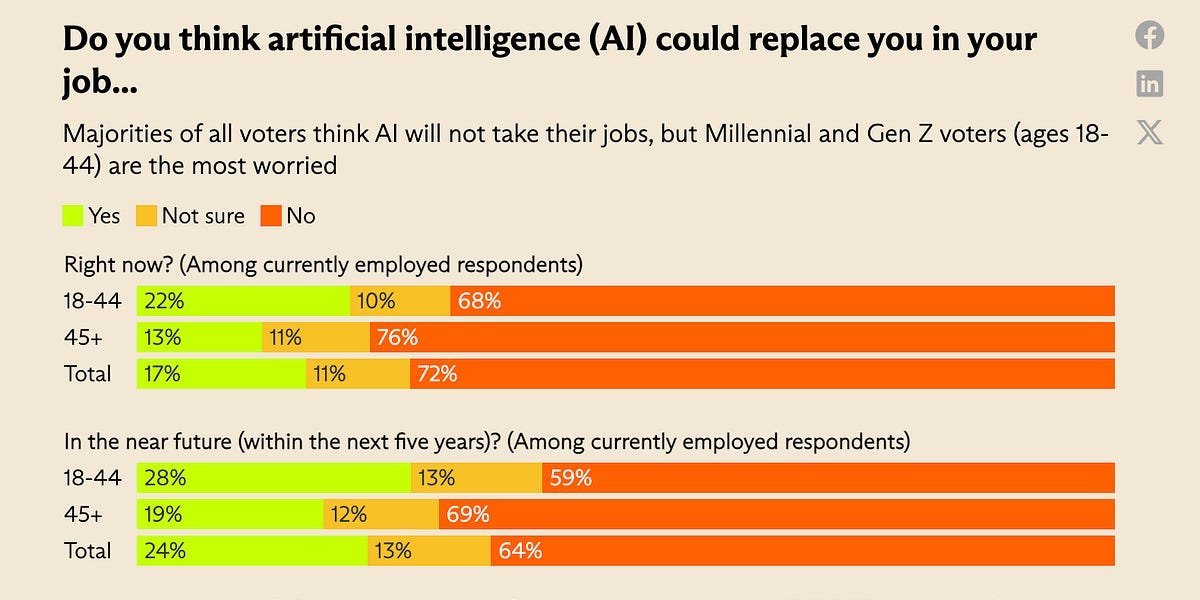

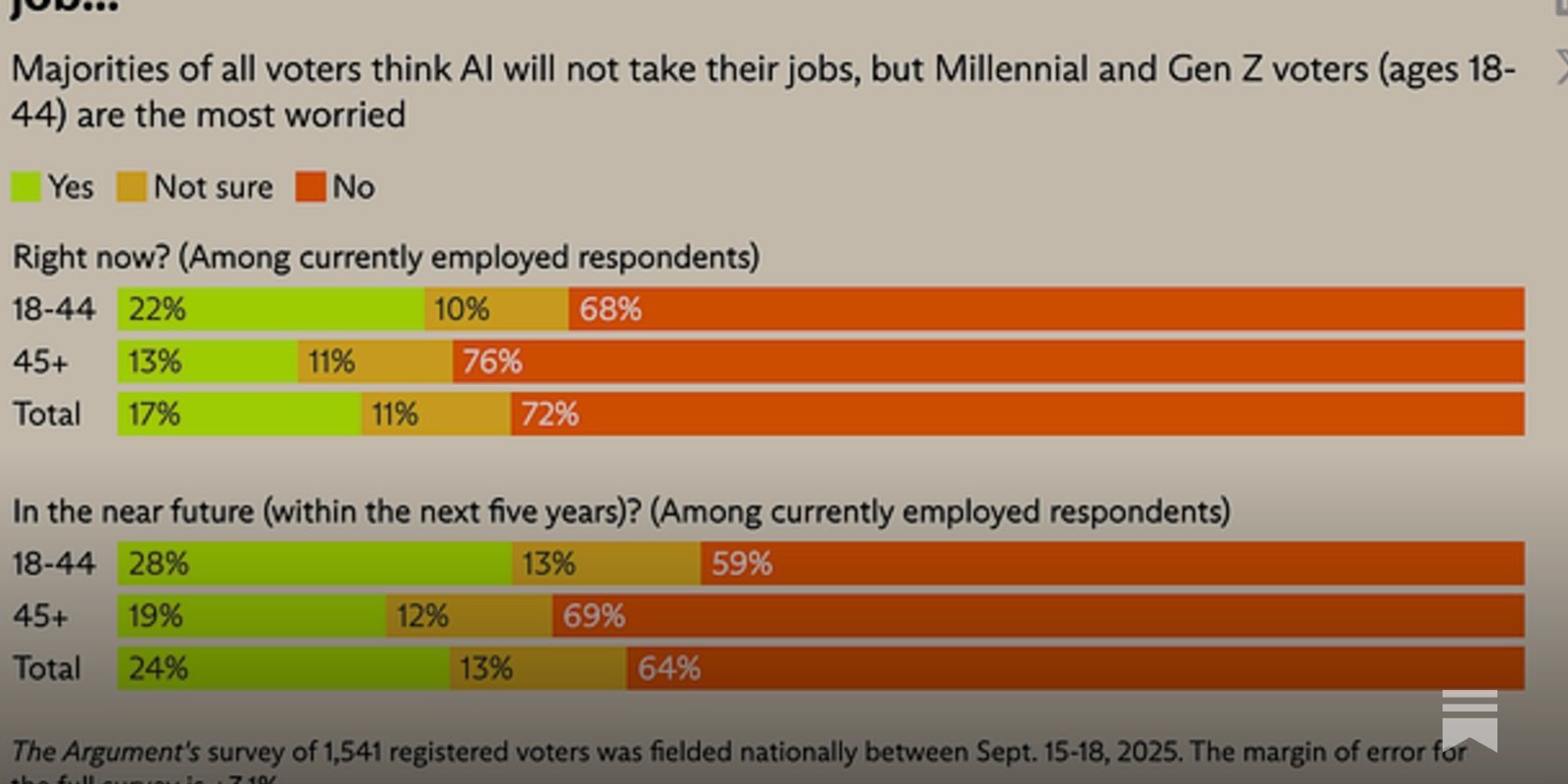

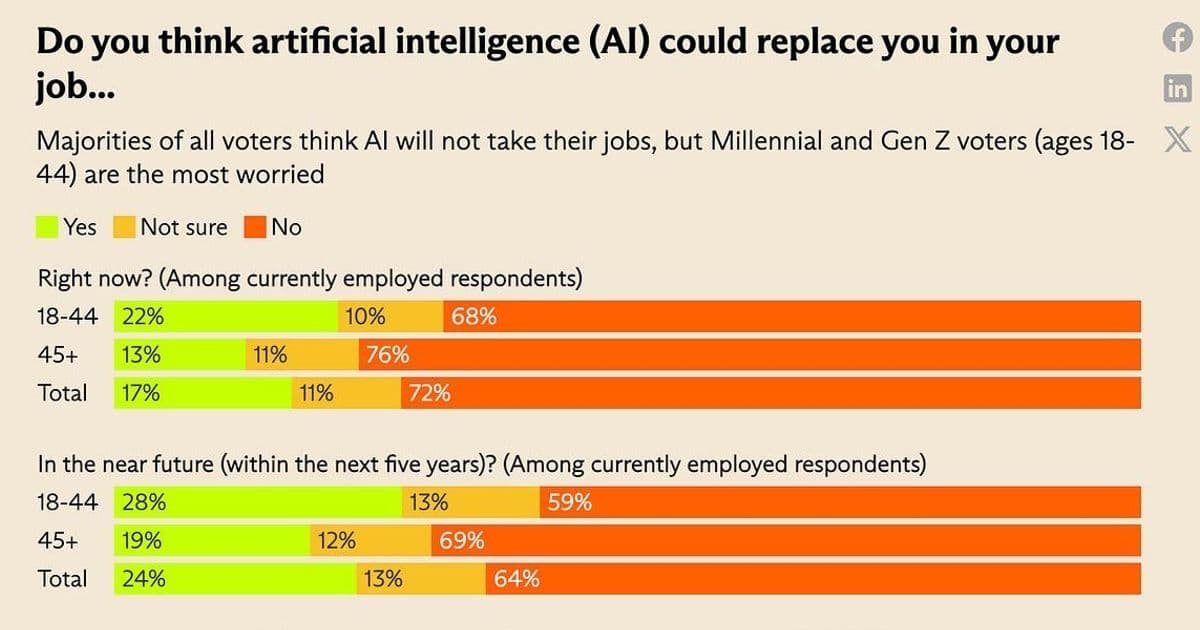

New survey data reveals a stark generational divide:

- 33% of Gen Z/millennials use chatbots daily

- 37% of under-30 AI users deploy it for "getting work done"

- Younger users view AI as a tool (like calculators), while older generations see it as an agent with inherent risks

- Despite fears of job displacement, younger cohorts oppose strict AI guardrails

This explains academia's slow response: professors (disproportionately over 45) never experienced AI-integrated learning. Meanwhile, students raised on the internet normalize outsourcing cognition.

The Path Forward

AI accelerates experts but cripples novices. Jain draws a sharp parallel: "A 6-year-old learning addition shouldn't use a calculator." The solution isn't prohibition—it's rethinking assessment. Oral exams, in-class coding sprints, and AI-augmented (not AI-replaced) projects could rebuild foundational skills. As one generation embraces AI as a limb and another fears it as a rival, educators must bridge the gap before the competence chasm widens irrevocably.

Source: ChatGPT and the end of learning by Lakshya Jain

Comments

Please log in or register to join the discussion