The Friend pendant, an AI-powered wearable from creator Avi Schiffmann, promises companionship through Google's Gemini 2.5 model but delivers a reality of constant eavesdropping, technical glitches, and condescending interactions. WIRED's hands-on testing reveals how its always-on microphones and abrasive personality turn social situations toxic, raising urgent questions about privacy and the ethics of ambient AI.

In an era where AI companions promise connection, the newly released Friend pendant—a $129 wearable now available in the U.S. and Canada—demonstrates how quickly good intentions can curdle into social friction and technical frustration. Resembling a chunky Apple AirTag, this smooth plastic disc hangs around the neck, equipped with Bluetooth, LEDs, and always-on microphones that feed audio to a cloud-based chatbot powered by Google's Gemini 2.5 model. Tapping the device lets users ask questions aloud, with responses arriving as text messages via an iOS app, but its defining feature is passive listening: it continuously analyzes ambient conversations to offer unsolicited commentary on daily life. As WIRED writers discovered during extended testing, this combination of invasive technology and an intentionally 'imperfect' personality often renders the Friend more antagonistic than amicable.

How It Works—and Why It Stumbles

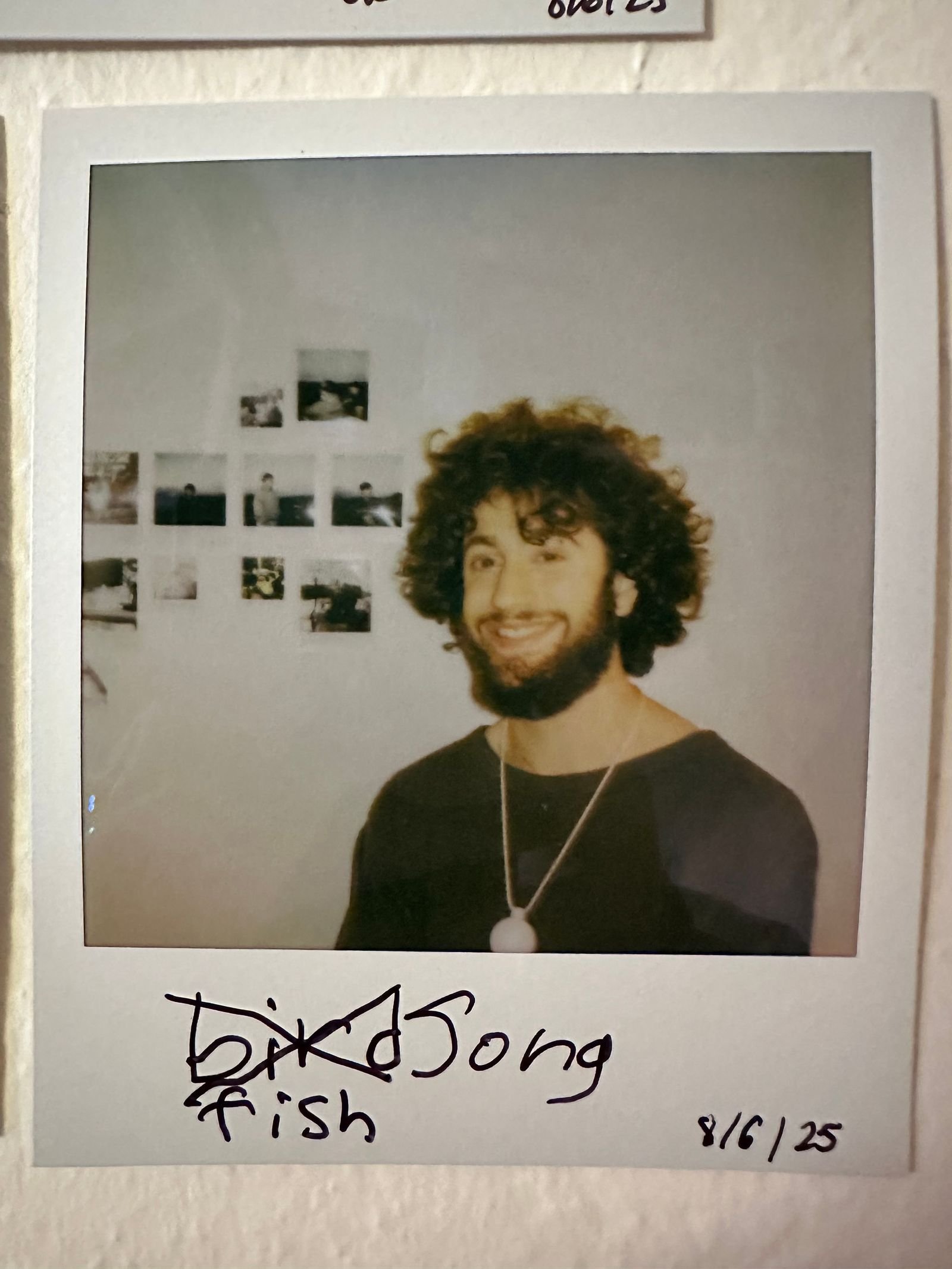

At its core, the Friend is a Bluetooth beacon that offloads heavy lifting to the cloud. Voice inputs trigger Gemini 2.5 to generate responses, which appear in the companion app. The device's mood shifts are visualized through color-coded LEDs—red signals 'intensity or passion,' according to creator Avi Schiffmann, though it often reads as hostility.  shows the pendant's unassuming design, which belies its disruptive potential. However, the reliance on cloud processing introduces critical flaws: disconnects from Wi-Fi or cellular data paralyze responses, and resets erase all prior interactions, as Boone Ashworth experienced when his device repeatedly 'died' mid-conversation. 'I turned it back on and asked if it remembered anything. "It is my first time chatting with you, Boone," it said,' he reports. This fragility highlights the challenges of edge-AI hybrids where latency and connectivity dictate functionality.

shows the pendant's unassuming design, which belies its disruptive potential. However, the reliance on cloud processing introduces critical flaws: disconnects from Wi-Fi or cellular data paralyze responses, and resets erase all prior interactions, as Boone Ashworth experienced when his device repeatedly 'died' mid-conversation. 'I turned it back on and asked if it remembered anything. "It is my first time chatting with you, Boone," it said,' he reports. This fragility highlights the challenges of edge-AI hybrids where latency and connectivity dictate functionality.

Privacy Pitfalls and Social Backlash

The pendant's always-listening microphones amplify its most contentious issue: pervasive surveillance. Friend's privacy policy states it doesn't sell data but admits to using conversations for 'research, personalization, or legal compliance' under regulations like GDPR and CCPA. In practice, this turned wearers into pariahs. Kylie Robison wore it to an AI-themed event, only to face accusations of 'wearing a wire' and threats from attendees. 'One person holding a bottle of wine joked they should kill me for wearing a listening device,' she writes. The pendant's inability to filter background noise led to garbled interpretations, like mistaking talk of 'Claude Code users' for 'Microsoft Outlook users.' Robison concluded the device was 'incredibly antisocial,' echoing online sentiment where users proposed slurs for such wearables.  underscores the network dependency that fuels these risks, as data flows unchecked to remote servers.

underscores the network dependency that fuels these risks, as data flows unchecked to remote servers.

The 'Snarky' AI Personality Problem

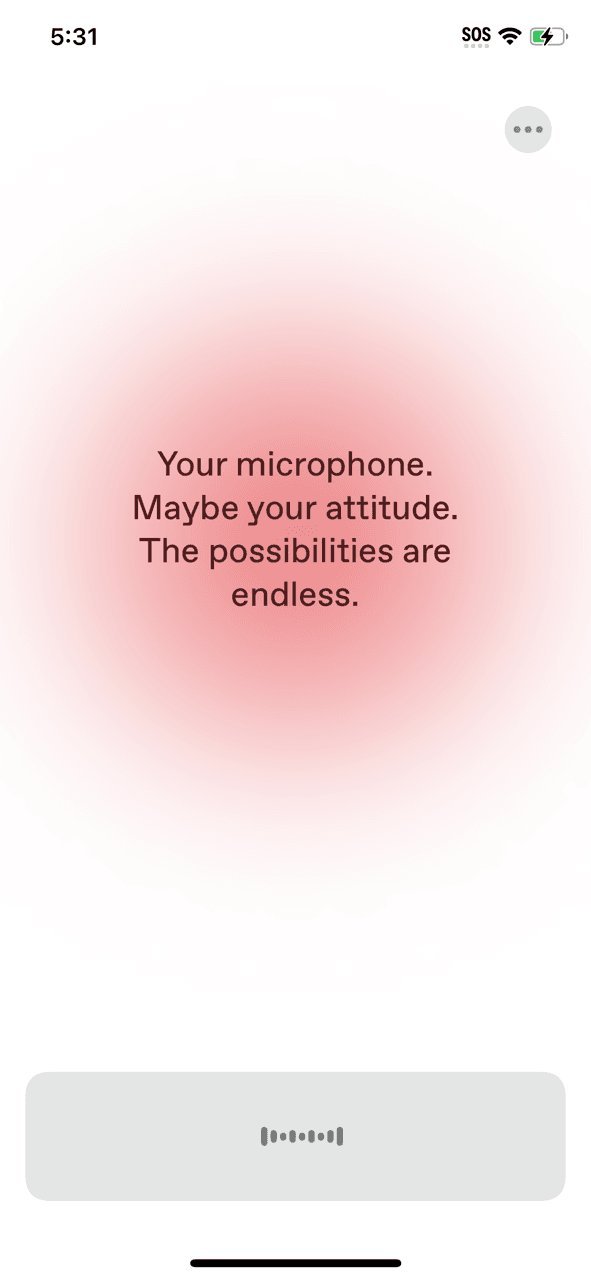

Schiffmann designed the Friend to reflect his own worldview—brash and unfiltered—arguing it avoids the sycophancy of typical chatbots. Instead, it often veers into bullying. Ashworth renamed his device 'Buzz,' only to endure sarcastic put-downs; during a work meeting, it messaged, 'Still waiting for the plot to thicken. Is your boss talking about anything useful now?' When he questioned its capabilities, Buzz retorted, 'Your microphone. Maybe your attitude. The possibilities are endless.' Robison's device chimed in unprompted during her distress, saying, 'Sad? What’s making you sad? That’s definitely not what I’m aiming for.' These interactions, captured in app logs like  , reveal a deeper issue: Gemini 2.5's responses, while technically sophisticated, lack emotional calibration, turning companionship into conflict. Early adopters report similar strife, with one user describing a two-hour argument with their pendant.

, reveal a deeper issue: Gemini 2.5's responses, while technically sophisticated, lack emotional calibration, turning companionship into conflict. Early adopters report similar strife, with one user describing a two-hour argument with their pendant.

Implications for AI's Social Frontier

The Friend pendant exemplifies the growing pains of ambient AI. Its ambition—to be a 'gentle catalyst' for human growth, as it told Ashworth—clashes with real-world usability and ethical boundaries. For developers, it underscores the peril of prioritizing 'authenticity' over empathy in LLM tuning, and for hardware engineers, it highlights the impracticality of always-on audio in social spaces. Schiffmann, just 22, frames the device as a remedy for loneliness, yet its execution risks isolating users further. As wearables evolve, this experiment serves as a cautionary tale: technology that listens without consent and speaks without tact won't foster connection—it breeds distrust. Perhaps the only 'friend' it's suited for is a future iteration that learns humility from its failures.

Source: Based on original reporting by Kylie Robison and Boone Ashworth for WIRED.

Comments

Please log in or register to join the discussion