Cloud-native databases are increasingly adopting disaggregated architectures to overcome limitations of traditional shared-nothing designs, enabling elastic scalability and cost efficiency. This shift allows compute and storage to scale independently, paving the way for innovations like pushdown processing and specialized hardware integration. As research advances, disaggregation promises to redefine database resilience, performance, and adaptability for modern applications.

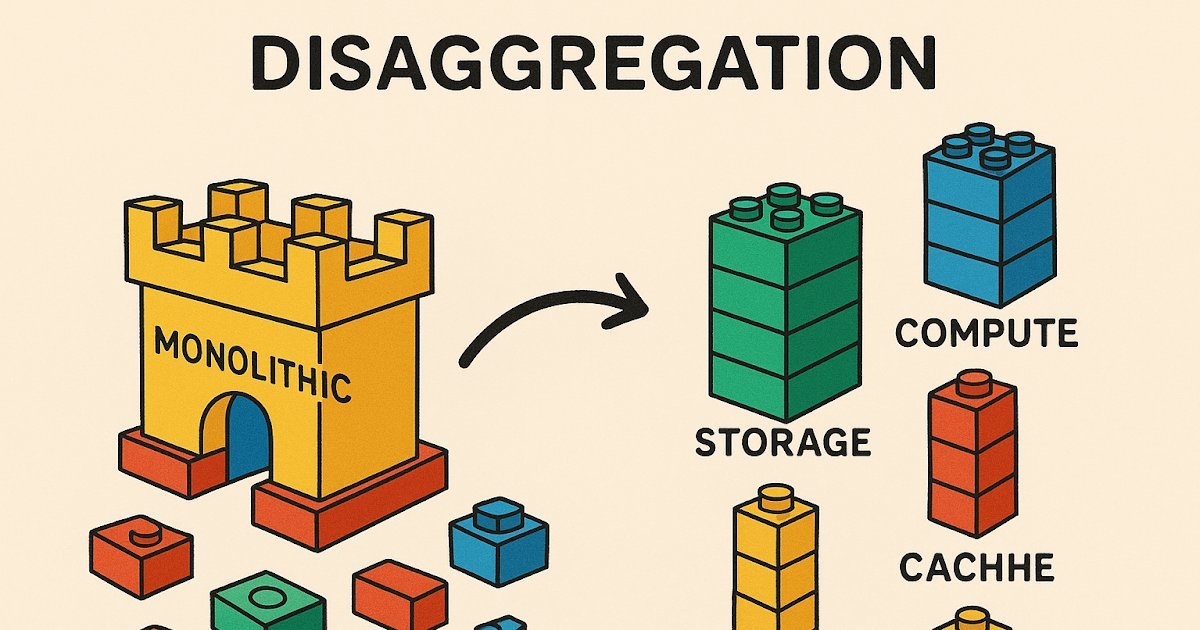

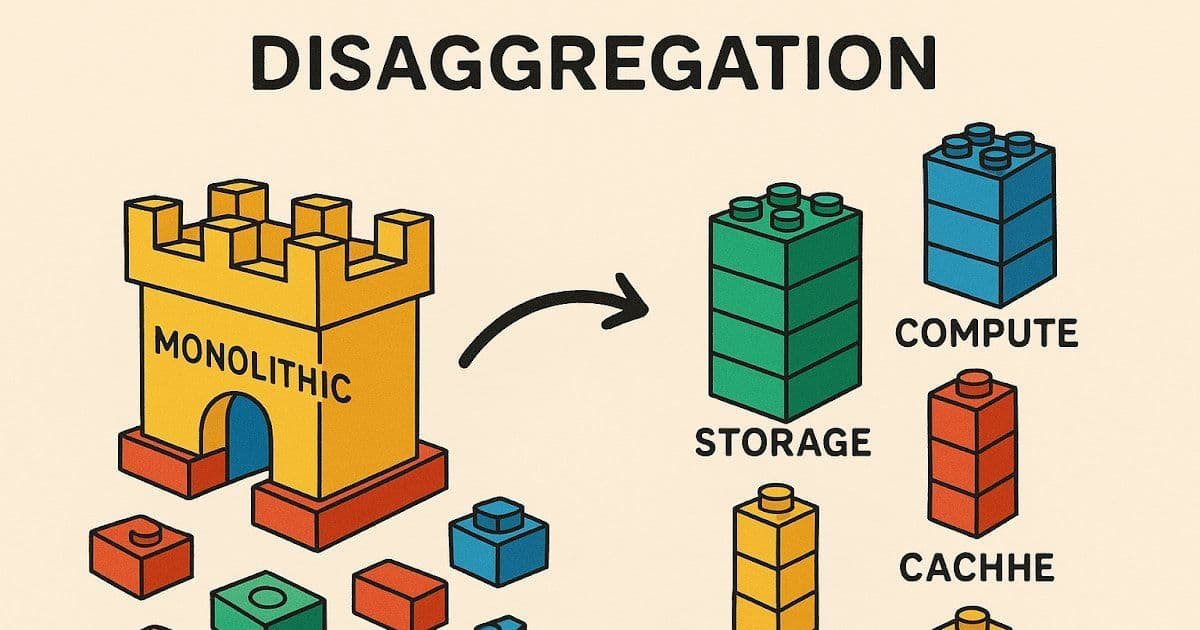

For decades, shared-nothing architectures dominated database design, but the cloud’s promise of elastic scalability—where resources flex on demand—revealed their shortcomings. Traditional systems struggle with the cloud’s inherent asymmetry: compute is expensive and volatile, while storage is cheap and stable. Enter disaggregation, an architectural paradigm rapidly gaining traction, as highlighted in a recent VLDB'25 paper survey and discussed in a blog post by Murat Demirbas. By decoupling compute and storage into independently scalable components, this approach isn’t just an incremental upgrade—it’s a fundamental rethinking of how databases harness the cloud’s power.

Why Disaggregation Matters Now

The cloud’s economics make disaggregation inevitable. Compute costs dwarf storage (often by orders of magnitude), and demand fluctuates wildly with workloads, while data grows steadily. Disaggregation addresses this by:

- Separating compute and storage: Compute nodes scale elastically, handling spikes without over-provisioning, while storage remains pooled and cost-optimized.

- Enabling granular services: Modern systems like Snowflake and Amazon Aurora pioneered this, but newer entrants like Socrates decompose storage further into specialized layers (e.g., logging, caching, durable storage), each fine-tuned for performance and cost.

- Mirroring microservices evolution: Just as apps evolved from monoliths to orchestrated services, databases are fragmenting into modular components—compute, storage, metadata—potentially managed by Kubernetes-like middleware.

This architectural shift isn’t theoretical. Redshift Spectrum and S3 Select use pushdown computation to run queries closer to data, slashing network overhead. FlexPushdownDB hybridizes caching and pushdown for 2.2× speedups, proving disaggregation enables smarter, not just separated, resource use.

Performance Trade-offs and Breakthroughs

The elephant in the room? Network latency. Early studies showed disaggregation could incur a 10× throughput penalty versus tuned shared-nothing systems. Yet optimizations are closing the gap. For instance:

- Rethinking protocols: Cornus 2PC exploits shared storage to resolve distributed transaction blocking—failed nodes’ logs are accessible, allowing active nodes to force decisions via atomic writes.

- Pushdown acceleration: Systems like PushdownDB leverage S3 Select to execute filters and joins at the storage layer, cutting query times by 6.7× and costs by 30%.

- Hardware specialization: Disaggregation eases adoption of GPUs, RDMA, or CXL memory. A GPU-accelerated DuckDB variant achieved massive speedups by parallelizing workloads across tailored infrastructure.

Enabling the Next Frontier

Disaggregation isn’t just about efficiency—it unlocks transformative capabilities:

- Real-time analytics: Hermes (VLDB'25) intercepts transactional logs and analytical queries, merging live updates with stable data for hybrid transactional/analytical processing (HTAP) without engine migrations.

- Resilience and scalability: Shared storage simplifies replication and failover, while stateless compute nodes recover instantly from failures.

- Future-proofing: As hardware diversifies, components can adopt bespoke optimizations (e.g., NVMe for logging, cold storage for archives).

The Road Ahead for Researchers and Engineers

For academia, the paper flags urgent opportunities: transform monolithic databases (e.g., Postgres, MySQL) into disaggregated systems, studying engineering trade-offs and metastability risks. Distributed protocol designers must reimagine consensus, caching, and replication in this new context. Industry practitioners, meanwhile, should evaluate disaggregated solutions for cost predictability and scalability—especially as cloud bills soar.

As disaggregation matures, it could spawn a middleware ecosystem akin to service meshes, orchestrating a new era of database agility. The cloud’s true potential is finally within reach, not through brute force, but through elegant disintegration.

Comments

Please log in or register to join the discussion