Unikernels offer a radical departure from traditional operating systems by merging applications with minimal OS components into single lightweight executables. We explore their performance advantages, deployment on AWS with Nanos, and whether they're ready for mainstream adoption despite ecosystem limitations.

Imagine renting a private villa where every resource—pool, beach, staff—is exclusively yours. No shared spaces, no noisy neighbors. Now apply that concept to application deployment: unikernels provide this isolated, resource-dedicated environment for software. Unlike bloated traditional OSes that juggle multiple processes, unikernels strip away unnecessary layers, merging application and kernel into a single lightweight executable that boots in milliseconds and runs with minimal overhead.

Why Traditional OSes Fall Short

Traditional operating systems like Linux or Windows manage CPU, memory, and I/O for countless simultaneous processes—a design that introduces significant overhead. Background tasks, context switching, and unused drivers consume resources your application doesn't need. For cloud-native workloads, this inefficiency translates to:

- Higher memory footprints

- Slower boot times

- Larger attack surfaces

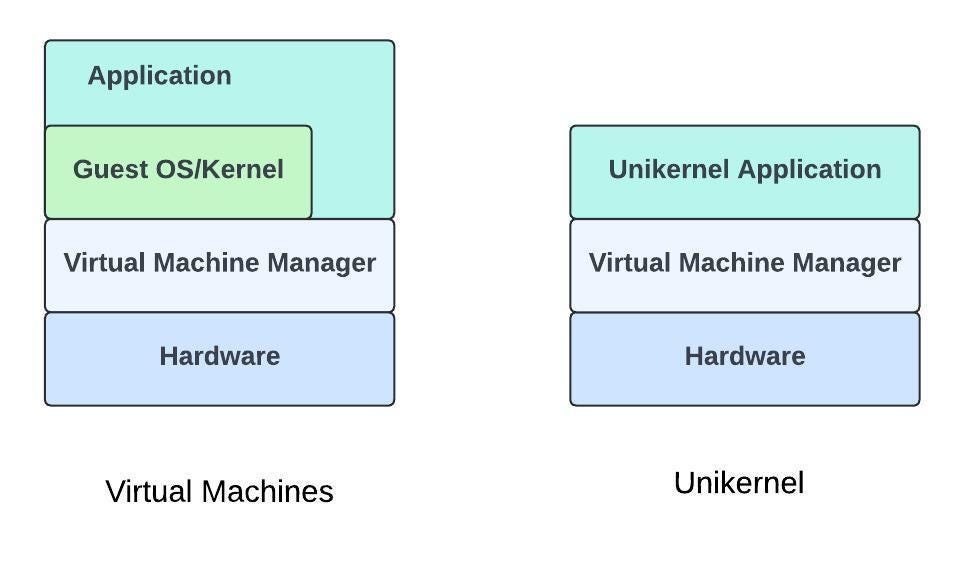

Unikernels solve this by letting applications interact directly with hardware via hypervisors (Xen, KVM, AWS Nitro). The result? A stripped-down, purpose-built VM containing only the libraries and drivers your app requires.

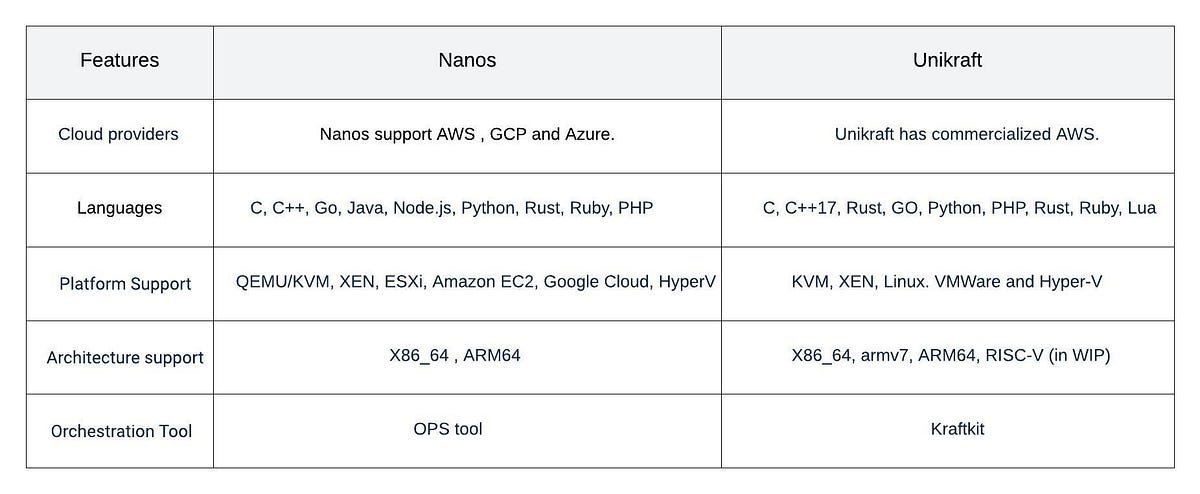

Nanos vs. Unikraft: The Contenders

While multiple unikernel frameworks exist, two stand out for production readiness:

| Feature | Nanos | Unikraft |

|---|---|---|

| Deployment | Cross-cloud (AWS, GCP, Azure) | Unikraft Cloud (bare-metal focus) |

| Tooling | OPS CLI | Custom toolchain |

| Network Stack | Lightweight IP (lwIP) | lwIP or custom |

Nanos' broader cloud support makes it accessible for mainstream use—which we tested firsthand.

Building and Deploying on AWS: A Reality Check

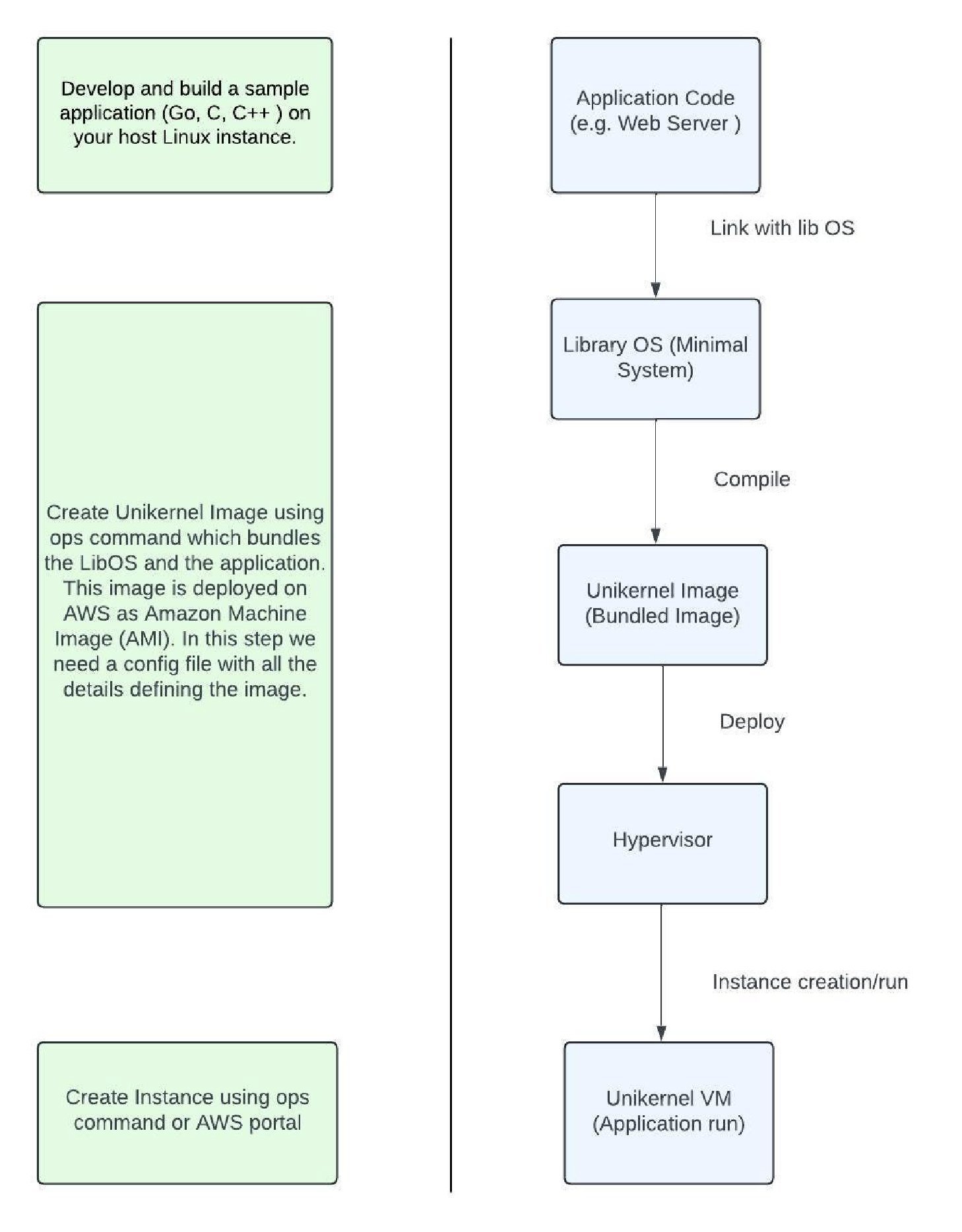

Deploying a Nanos unikernel involves:

Deploying a Nanos unikernel involves:

- Compiling from source (Go toolchain, GCC, OPS CLI)

- AWS configuration (credentials, S3 bucket for images)

- Image creation via JSON manifests defining resources

- Instance deployment specifying ports, VPCs, and regions

While functional, the process reveals limitations:

# Sample Nanos OPS commands

ops image create -c config.json -t aws -i my_app

ops instance create -i my_instance my_app -t aws

The toolchain feels nascent—no multi-instance deployment flags, inconsistent I/O performance, and sparse debugging utilities.

Performance: Raw Numbers Don't Lie

We compared Nanos against Linux using CPU and I/O benchmarks:

We compared Nanos against Linux using CPU and I/O benchmarks:

- CPU-bound task (prime number calculation):

- Nanos: ~4.2 seconds (consistent)

- Linux: ~5.8 seconds (variable)

- 2MB File I/O operations:

- Nanos: 0.15 ms avg/op (high variance)

- Linux: 0.22 ms avg/op (stable)

Results show promise but also inconsistency—especially under I/O load, likely due to lwIP stack limitations.

Use Cases vs. Challenges

Ideal fits:

- Lightweight microservices

- Ephemeral functions (e.g., serverless backends)

- High-security workloads (reduced attack surface)

Critical hurdles:

- Debugging nightmares: No gdb/strace equivalents

- Ecosystem gaps: Limited libraries and community support

- Network bottlenecks: lwIP struggles with high concurrency

- Operational rigidity: Manual scaling, minimal cloud integration

The Verdict

Unikernels aren't just theoretical—they slash resource use and boot times, proving invaluable for niche deployments. But tooling immaturity and debugging complexity hinder widespread adoption. As frameworks like Nanos evolve, they could reshape how we think about cloud efficiency. For now, they remain a compelling—if demanding—option for performance-critical, single-purpose workloads.

Comments

Please log in or register to join the discussion