New work from Tohoku University shows that cortical circuits don’t respond the same way throughout the day—even to identical inputs. For technologists building adaptive systems, neuromorphic chips, or closed-loop brain interfaces, this is more than biology trivia: it’s a design constraint, and an opportunity.

Our industry loves the metaphor of the brain as hardware: dense wiring, electrical spikes, layered abstractions. But that metaphor quietly misleads us. Real neural tissue doesn’t behave like a stable circuit under constant power—it behaves like a codebase under continuous deployment, modulated by an internal scheduler we rarely model.

A new study from Tohoku University makes that point with unusual clarity. By optogenetically probing visual cortex in rats across the 24-hour cycle, the researchers show that cortical excitability and plasticity oscillate in a structured, time-of-day–dependent way. For developers of AI systems, neuromorphic hardware, and brain-computer interfaces (BCIs), this isn’t just neuroscience color—it’s a live signal that our abstractions of “the brain as static compute” are leaving performance and reliability on the table.

Source: Tohoku University press release, “The Flexible Brain: How Circuit Excitability and Plasticity Shift Across the Day” (Nov 11, 2025), summarizing Donen, Ikoma, and Matsui, "Diurnal modulation of optogenetically evoked neural signals," Neuroscience Research, 2025. DOI: https://doi.org/10.1016/j.neures.2025.104981

What the researchers actually measured

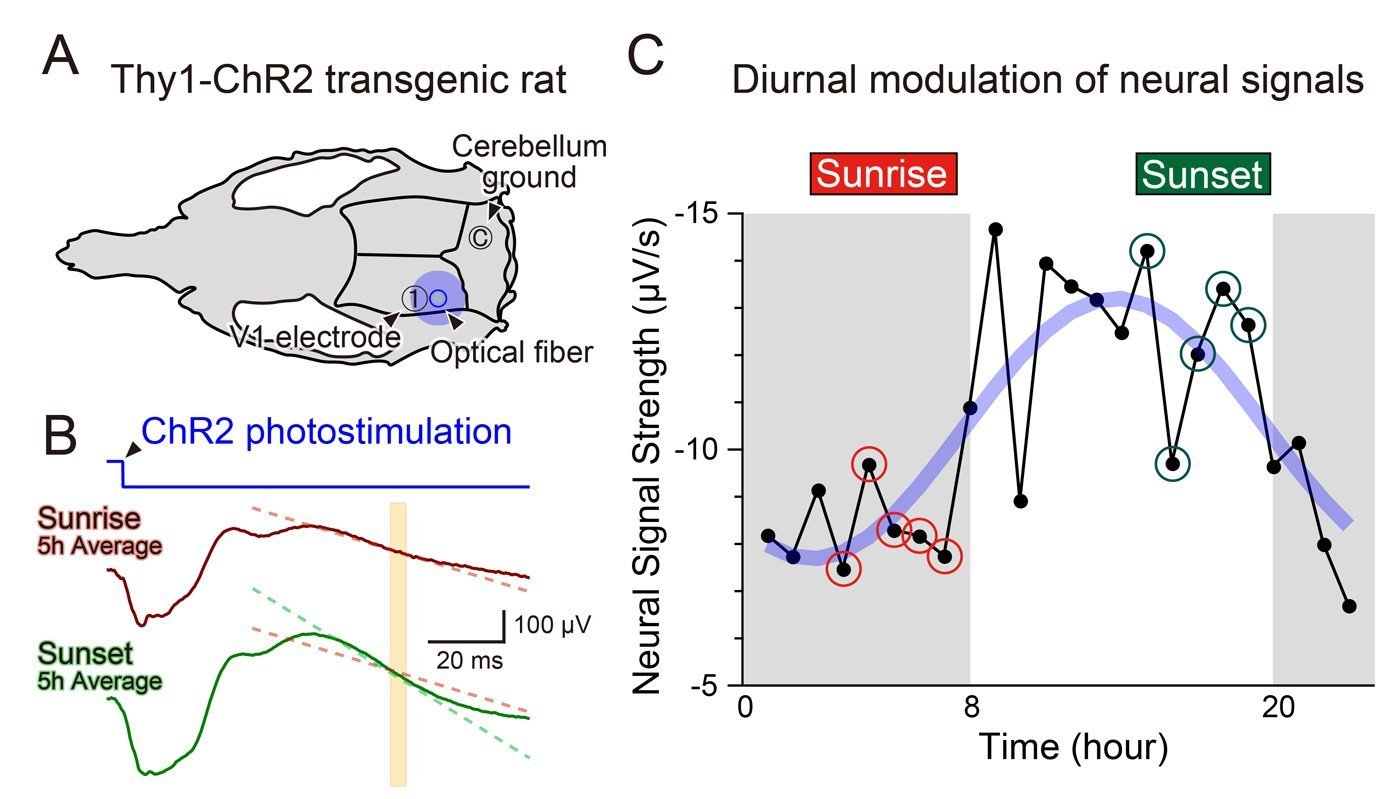

The team (Yuki Donen, Yoko Ikoma, and Ko Matsui) used a clean, engineer-friendly setup:

- Transgenic Thy1-ChR2 rats, whose cortical neurons could be driven with light.

- Precise optogenetic stimulation of visual cortex.

- Simultaneous local field potential (LFP) recordings to quantify population responses.

- Measurements repeated across multiple days under a regular light–dark cycle.

This is essentially black-box testing with high temporal control: apply identical inputs, at different times of day, and measure how the system’s response profile shifts.

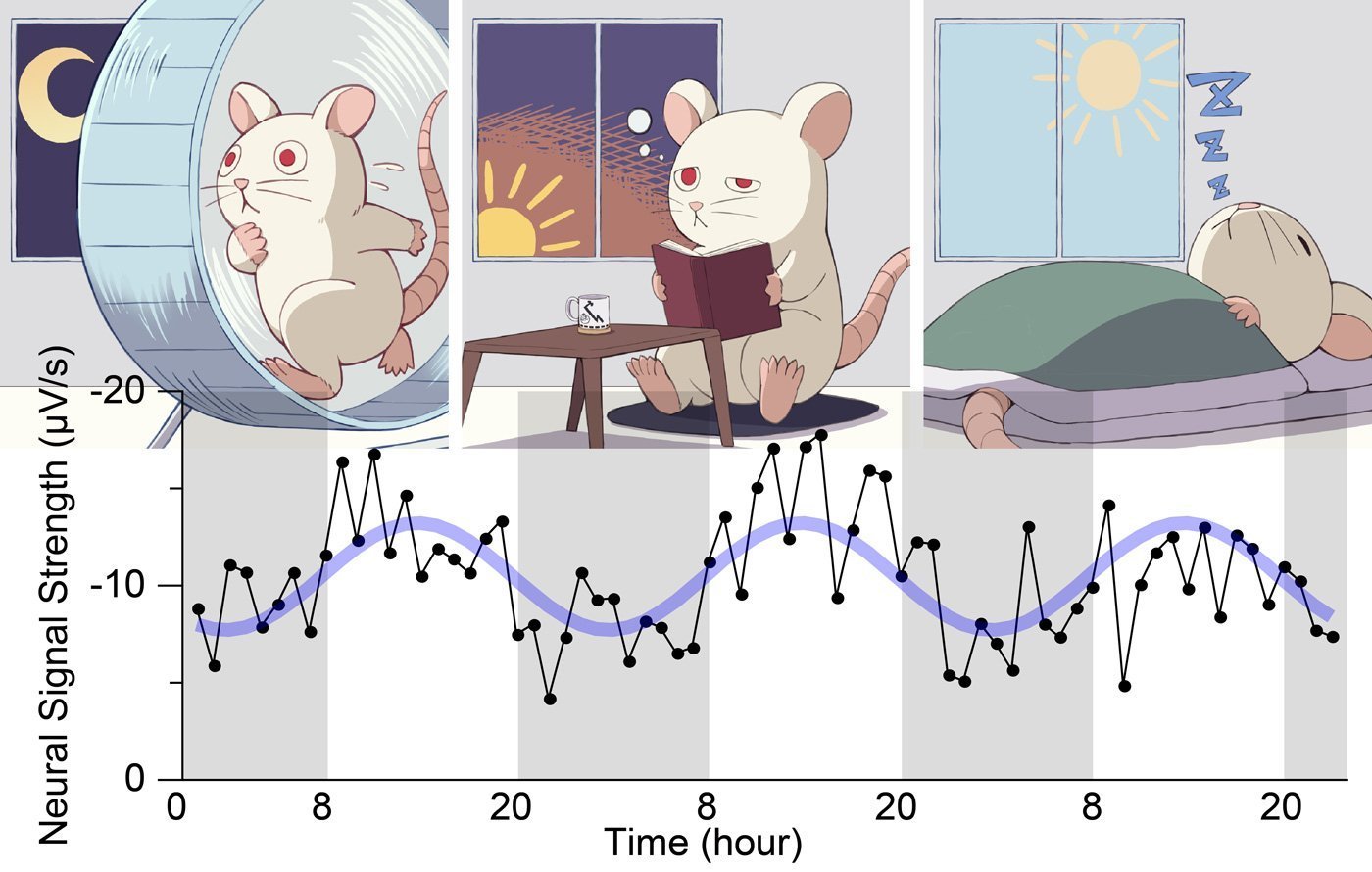

The results were striking:

- Identical optical stimuli produced weaker responses before sunrise and stronger responses before sunset.

- Averaged over three days, the neural response followed a roughly 24-hour sinusoidal pattern, phase-locked to the light–dark cycle.

In nocturnal rats, “before sunrise” corresponds to post-activity, high-fatigue, high sleep pressure—what should feel, to a human developer, like the 3 a.m. pre-crash haze. The cortex there is less responsive, but not randomly so: it’s systematically modulated.

Adenosine: the built-in rate limiter

To avoid hand-wavy explanations, the team probed mechanism.

Adenosine—a well-known neuromodulator that accumulates during wakefulness and contributes to sleep pressure—became the key variable. When they pharmacologically blocked adenosine signaling, something important happened:

- The dampened sunrise responses were “disinhibited” and enhanced.

- In other words, adenosine acted as a dynamic brake on cortical excitability, tuning how strongly the same input drove the network at different times.

Professor Ko Matsui summarized it succinctly: neural excitability is not constant; it depends on the brain's internal state. Molecules like adenosine link metabolism, sleep, and neuronal signaling into a time-varying systems profile.

For engineers, adenosine is effectively a biologically encoded traffic shaper—governing throughput and sensitivity based on accumulated load.

A twist: when learning is easiest isn’t when you’d expect

Beyond immediate responses, the researchers examined long-term potentiation (LTP)—a core cellular mechanism for learning and memory—and, critically, its metaplasticity: how the ease of inducing plastic changes itself changes.

Using repetitive optical stimulation as an LTP-inducing protocol, they found:

- At sunrise (high fatigue, high sleep pressure), LTP-like enhancement was robust.

- At sunset (when evoked responses were stronger), the same protocol did not yield comparable LTP.

So the brain’s “instant response strength” and its “capacity to rewire” are not synchronized. In these nocturnal animals:

- Evening: higher evoked responsiveness.

- Morning (sunrise): higher plastic potential.

The authors extrapolate cautiously: in diurnal humans, analogous windows may shift such that learning and memory formation are optimized around pre-sleep evening hours. Whether or not that precise mapping holds, the core architectural insight stands—plasticity has temporal structure.

Why this matters to the builders of intelligent systems

This is where the story crosses from biology into the domains our readers own—AI/ML, neuromorphic design, human–machine interfaces, and cognitive tooling.

1. Neuromorphic hardware: bake in circadian dynamics

Most neuromorphic architectures model:

- Spiking dynamics

- Synaptic plasticity (e.g., STDP)

- Stochasticity at various levels

Very few model structured diurnal modulation as a first-class concern.

The Tohoku study is a reminder that:

- Excitability and plasticity are not only state-dependent, but state-coupled to a global temporal controller (circadian + sleep-pressure mechanisms).

- Learning rules in silicon that operate identically 24/7 are biologically unrealistic—and potentially suboptimal.

Design implications:

- Introduce a "circadian control signal" into neuromorphic cores to modulate:

- Neuron thresholds (excitability)

- Learning rates and consolidation gates (plasticity windows)

- Use adenosine-like variables as cumulative activity meters that throttle learning after prolonged high-activity phases, then reopen high-plasticity windows in rest-equivalent phases.

This would align online learning hardware more closely with the energy-efficient, stability-preserving strategies evolution actually shipped.

2. AI training curricula: time-aware plasticity schedules

Modern deep learning already uses time-structured tricks: cosine learning rate schedules, warmup phases, curriculum learning.

What this work suggests is a more biological framing many teams are already circling:

- Separate phases for "high responsiveness" (inference-like, robust behavior) and "high malleability" (structural update, consolidation-like behavior).

- Maintain system stability by constraining large representational changes to designated "LTP windows"—for instance, in batched or offline periods—rather than allowing constant, uniform plasticity.

This resonates with:

- Offline RL and experience replay

- Periodic fine-tuning windows in production models

- Eventual consistency in distributed learning systems

The difference is conceptual discipline: instead of treating these purely as engineering hacks, we can tune them against well-characterized biological design patterns.

3. BCIs and neural therapeutics: stop ignoring local time

If you build:

- Non-invasive stimulation systems

- Closed-loop neuromodulation for epilepsy, depression, or movement disorders

- Cognitive enhancement or rehabilitation platforms

then this paper is a quiet warning: the assumption that "a given stimulus has a predictable effect" is incomplete without time-of-day in the model.

Key operational consequences:

- Efficacy of stimulation likely varies across the 24h cycle, not only because of circadian arousal, but because of circuit-level shifts in excitability and plasticity.

- Safety margins and dose–response curves may be time-dependent.

- Optimal therapy windows might intentionally target high-plasticity phases for rehabilitation protocols (e.g., motor cortex retraining after stroke).

Regulatory-grade systems will, eventually, need to justify how they incorporate—or consciously bracket—these dynamics.

4. Human performance tooling: beyond productivity folklore

We’ve long had pop-science claims: "study in the morning," "creativity at night," etc. This study doesn’t settle those debates, especially given species differences. But it does push serious tooling in a clear direction:

- Learning platforms and LLM-powered tutors could adapt schedules based on individual chronotypes and physiological indicators (sleep data, light exposure) rather than fixed folklore.

- Cognitive load, difficulty, and spaced repetition intervals might be aligned with personalized "plasticity windows" inferred from behavior and wearables.

For builders of such systems, the Tohoku findings are a strong rationale for:

- Integrating circadian and sleep metrics as first-class features.

- Running A/B tests on time-of-day–adaptive learning strategies, grounded in mechanistic hypotheses rather than generic “engagement” curves.

A more honest metaphor for the next generation of systems

The most important contribution of this research to the tech community may be conceptual. We keep designing systems—silicon or software—that assume:

- Uniform responsiveness

- Uniform learning capability

- Statelessness with respect to biological time

Meanwhile, the substrate we’re trying to emulate and interface with is:

- Phase-locked to environmental cycles

- Chemically rate-limited (adenosine and beyond)

- Strategically asymmetric: sometimes good at reacting, sometimes good at changing

The Tohoku team has effectively captured a high-resolution trace of that asymmetry in action.

If we take that seriously, the next wave of AI and neuromorphic design starts to look less like 24/7 always-on optimization and more like a living system with:

- Scheduled sensitivity

- Protected windows for structural change

- Built-in brakes that tie energy use to adaptability

We don’t need to romanticize biology to borrow its best tricks. We just have to stop pretending the brain is a stable circuit, and start designing our machines—and our interventions—around the fact that intelligence, natural or artificial, may work best when it respects its own clock.

Comments

Please log in or register to join the discussion