Amazon has unveiled prototype smart glasses designed specifically for delivery drivers, using computer vision to enable hands-free package scanning, navigation, and safety alerts. This enterprise-focused launch offers a revealing glimpse into Amazon's broader ambitions in wearable tech, hinting at an upcoming consumer product codenamed Jayhawk.

In a strategic move that blends augmented reality with real-world logistics, Amazon has officially entered the smart glasses arena—but with a twist. Unlike consumer-focused rivals from Meta or Apple, Amazon's monochromatic display glasses are tailored exclusively for its delivery workforce. The aim? To streamline the chaotic last mile of package handling through hands-free efficiency. As one of the first major deployments of computer vision in delivery operations, this initiative underscores how wearables are evolving from gadgets into essential industrial tools.

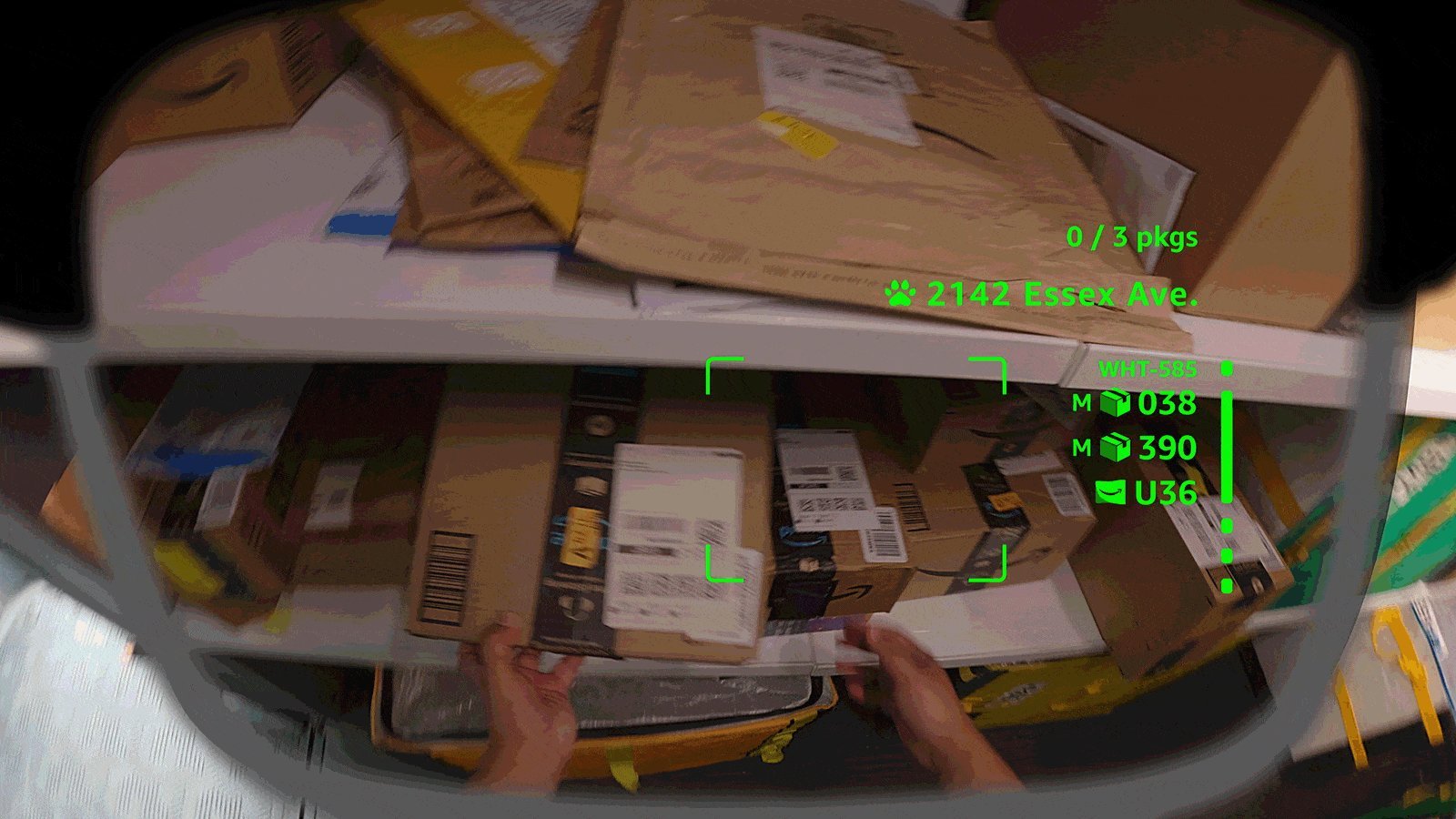

At its core, the glasses function as a digital co-pilot for drivers. Embedded screens overlay turn-by-turn walking directions, while cameras scan package barcodes and capture proof-of-delivery photos—all without requiring users to fumble with phones or scanners. As Amazon states, this addresses a critical pain point: "doing all of that would be a lot easier if you could do it hands-free." But the real innovation lies in the computer vision capabilities. The glasses can identify packages in sorting facilities by reading numbers and alert wearers to environmental hazards like low light or nearby pets. Amazon even suggests the lenses could dynamically adjust brightness, though details remain sparse. While skeptics might question whether glasses are needed to spot a barking dog, the integration highlights a push toward proactive, AI-driven workplace safety.

Yet, significant questions linger, particularly around usage during driving. Amazon explicitly mentions navigation is for walking only but omits any safeguards for van operation—echoing Meta's hands-off approach with its Ray-Ban smart glasses. This ambiguity raises concerns about distracted driving risks. More intriguing, though, is what this reveals about Amazon's long-term strategy. Industry reports, including one from The Information, indicate these delivery glasses are a precursor to "Jayhawk," a consumer-focused smart glasses project. Features like navigation and computer vision in the enterprise model—such as the center-aligned camera shown in prototypes—could seamlessly transition to everyday use. As one source caption wryly notes:

"It’s all fun and games until you realize your employer is watching everything you do all day."

Privacy implications are unavoidable, especially with Amazon's history of data-driven operations. While Alexa integration wasn't detailed, the glasses include a wearable controller for inputs and emergencies, reminiscent of Meta's approach. As described:

"The glasses feature a small controller worn in the delivery vest that contains operational controls, a swappable battery ensuring all-day use, and a dedicated emergency button."

This dual-hardware design suggests Amazon is betting on a future where wearables augment human capabilities beyond screens—whether for logistics or lifestyle. With competitors like Meta accelerating their own smart glasses ecosystems, Amazon's cautious timeline (a consumer launch is rumored for 2025 or 2026) feels increasingly precarious. In the race to dominate mixed reality, enterprise applications like these delivery glasses aren't just a trial run—they're the lens through which Amazon is focusing its next big consumer play. Delaying too long could mean missing the window as the market heats up, turning today's innovative edge into tomorrow's missed opportunity.

Source: Gizmodo

Comments

Please log in or register to join the discussion