A Harvard study shows AI tutoring doubled learning gains compared to expert-led active learning in physics, with median improvements of 0.73–1.3 standard deviations. Yet uncontrolled AI use risks eroding critical thinking through cognitive offloading, forcing a reckoning with whether teaching is fundamentally algorithmic. As models advance exponentially, educators must decide if efficiency will redefine or diminish learning.

In a landmark 2023 randomized controlled trial at Harvard University, an AI tutor built on GPT-4 delivered median learning gains more than double those of highly rated human instructors using research-backed active learning methods. The study, involving 194 undergraduate physics students, revealed not just efficiency—70% of AI students completed material in under 60 minutes versus a standard classroom session—but startling effectiveness. This challenges a core assumption in education: that human teaching is an irreducibly complex art, immune to algorithmic replication. Yet as physicist Roger Penrose once questioned whether consciousness transcends computation, this evidence forces us to confront a parallel dilemma—if learning obeys physical laws, can teaching be reduced to code?

The Data That Demands Attention

The Harvard experiment pitted the AI tutor against in-class active learning, a gold-standard pedagogy proven superior to traditional lectures. Students using the AI system showed gains of 0.73 to 1.3 standard deviations, among the highest ever recorded for educational AI. Crucially, the tutor was engineered to scaffold learning, not solve problems outright: it provided step-by-step guidance, withheld answers to prompt retrieval, and adapted pacing. As the authors noted, this wasn’t about replacing teachers but augmenting them—handling foundational concepts so classrooms could focus on advanced collaboration.

"The AI tutor produced median learning gains more than double those of the classroom group... with what might be described as overwhelming statistical significance." — Carl Hendrick, summarizing the Harvard findings.

This isn’t an isolated case. Pre-GPT-4 systems like ASSISTments (effect sizes of 0.18–0.29 SD in math) and Carnegie Learning’s MATHia (0.21–0.38 SD) demonstrated scalable benefits, particularly for struggling students. Kurt VanLehn’s meta-analysis found intelligent tutoring systems trailed human tutors by a mere 0.21 SD—a negligible gap. Even Tutor CoPilot showed AI-assisted human tutors boosted mastery rates, especially among novices. The pattern is clear: when designed with pedagogical principles—immediate feedback, spaced practice, adaptive personalization—AI tutors excel.

The Double-Edged Algorithm

However, the same technology that amplifies learning can also corrode it. Unconstrained tools like ChatGPT, optimized for frictionless task completion, encourage cognitive offloading. A 2025 study by Michael Gerlich found frequent AI use correlated with declining critical thinking, as users mistook generated outputs for personal understanding. Similarly, University of Pennsylvania researchers observed high school math students with unrestricted AI access performing worse on assessments than unaided peers. The mechanism is simple: bypassing struggle prevents knowledge consolidation.

"LLMs are engineered for efficiency, not education. They minimize friction and deliver immediate answers—virtues in engineering, but vices in learning." — Laak and Aru, cognitive scientists.

The distinction lies in design. The Harvard tutor intentionally increased cognitive load at key moments—what learning science calls "desirable difficulties." Off-the-shelf AI, however, defaults to harmful helpfulness. This creates a crisis for educators: students already use tools that complete essays and solve problems, often without oversight. As Dan Dinsmore and Luke Fryer argue in their analysis, generic AI promotes fluency over understanding, ignoring stages of expertise development.

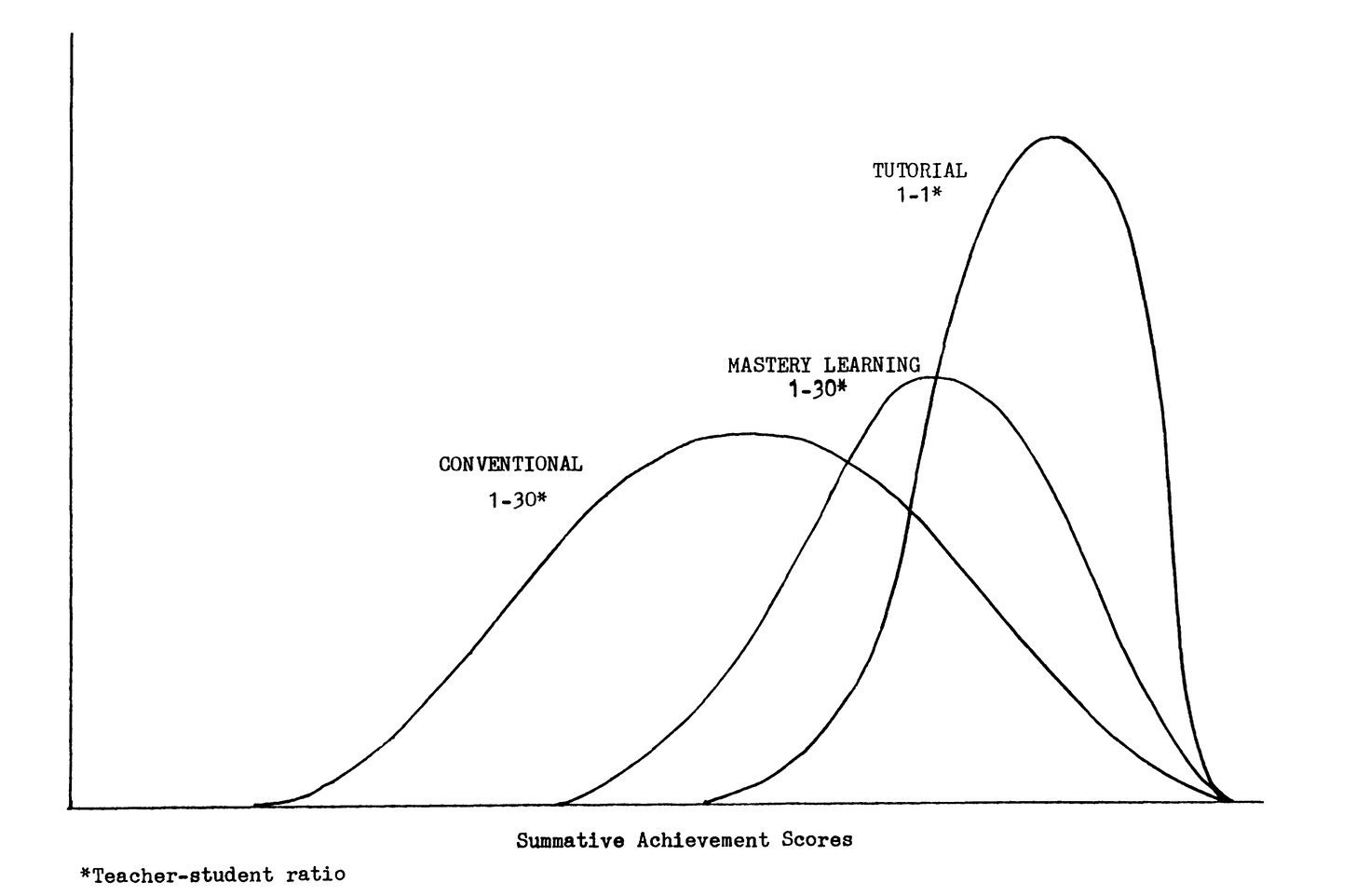

Bloom’s Ghost and the Scalability Paradox

Benjamin Bloom’s 1984 "2 Sigma Problem" looms large: one-on-one tutoring improved outcomes by two standard deviations over classrooms, but remained unscalable due to cost. AI now approaches this ideal—the Harvard study’s gains neared Bloom’s benchmark, while systems like ASSISTments achieved cost-effective 0.29 SD lifts at scale. Yet modern replications suggest Bloom’s original claim was inflated; rigorous studies show tutoring typically yields 0.30–0.40 SD effects, still meaningful but less revolutionary.

The tension is economic and existential. If AI tutors deliver superior results cheaply, policymakers may prioritize them over human labor. History warns efficiency often displaces rather than augments—from Jacquard looms replacing master weavers to EdTech’s legacy of overhyped failures. As Hendrick notes, "What we’ve called 'good teaching' may be the skilled application of principles that can be formalized." But teaching’s human dimensions—motivation, emotional support, cultural context—resist codification.

The Path Forward: Design Over Default

AI’s exponential growth amplifies the stakes. Each tutoring interaction refines models, creating Kurzweil-esque feedback loops where improvements compound. We’re nearing the "knee in the curve," where AI could surpass human tutors in diagnostic precision and adaptability. However, without pedagogical guardrails, this risks prioritizing speed over depth. As Hendrick warns, "Efficiency is not understanding."

The solution lies in evidence-aligned design: AI must be strategically unhelpful, embedding cognitive science principles like retrieval practice and metacognitive prompts. This demands collaboration between educators and engineers—a fusion rarely seen in EdTech’s history of wasteful missteps. Ultimately, the question isn’t whether AI will transform education (it already has), but whether we’ll steer it toward human flourishing or let it erode the very cognition it seeks to enhance.

"We stand at an inflection point... The question is whether we have the courage to design AI around evidence and human flourishing, or whether we’ll simply let it happen to us." — Carl Hendrick.

Source content derived from Carl Hendrick's analysis at carlhendrick.substack.com. Studies cited include Harvard's GPT-4 trial (Nature, 2025), Gerlich's cognitive offloading research (Societies, 2025), and VanLehn's meta-analysis.

Comments

Please log in or register to join the discussion