OpenAI is set to launch its GPT-5.1 model family—including base, Reasoning, and a $200/month Pro version—within weeks, promising faster performance and better health safeguards. Simultaneously, the new GPT-5-Codex-Mini offers developers 50% higher rate limits and quadrupled usage capacity, signaling a push for cost-effective AI scaling. This update arrives amid intensifying competition from Google's Gemini 3 Pro and Anthropic's Claude, accelerating the generative AI arms race.

OpenAI is gearing up for the public release of its GPT-5.1 model series, comprising three variants: a base model, a specialized Reasoning edition, and a high-end GPT-5.1 Pro tier priced at $200 per month. According to sources cited by BleepingComputer, the models are expected to debut on Microsoft Azure imminently, aligning with OpenAI's consistent three-to-four-month update cadence since GPT-5 launched in August. While not a revolutionary leap, GPT-5.1 will deliver incremental but critical enhancements—particularly in "health-related guardians" for safer interactions and raw speed optimizations—making it a strategic refinement for enterprise and developer workflows.

Codex Evolves with Efficiency Focus

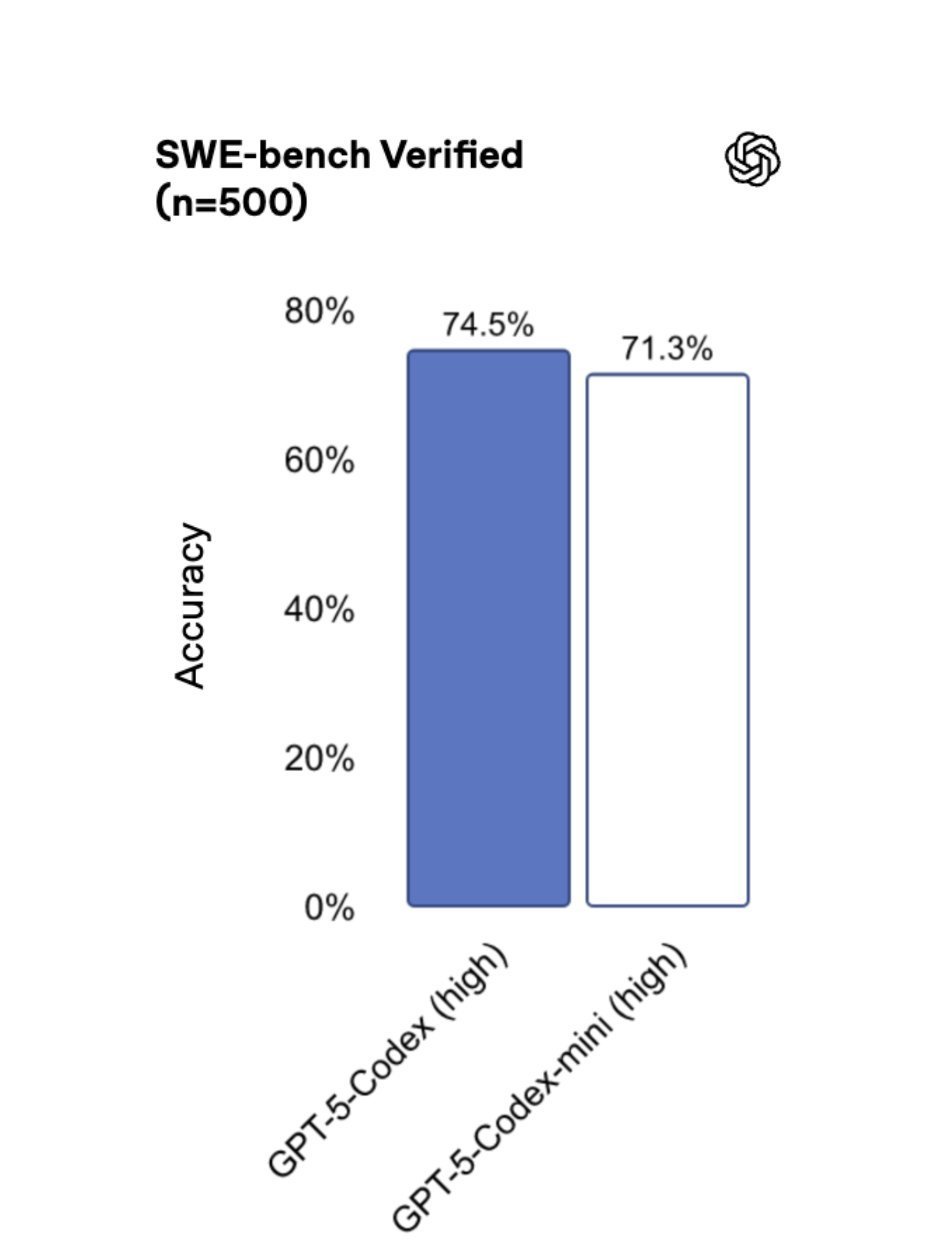

Simultaneously, OpenAI has rolled out GPT-5-Codex-Mini, a streamlined version of its code-generation model designed for cost-sensitive or high-volume tasks. Dubbed 'codex-mini-high,' this iteration provides 50% higher rate limits compared to its predecessor and allows roughly four times more usage per session at a slight capability trade-off. As OpenAI stated: "GPT-5-Codex-Mini enables extended productivity without interruptions, especially when users approach rate limits." The model now proactively suggests switching to Codex-Mini at 90% limit utilization, benefiting ChatGPT Plus, Business, and Edu subscribers with smoother, uninterrupted coding sessions. Pro and Enterprise users retain priority processing for latency-sensitive applications.

Caption: Codex improvements highlight OpenAI's focus on scalable, efficient AI for developers.

Caption: Codex improvements highlight OpenAI's focus on scalable, efficient AI for developers.

Broader AI Landscape Heats Up

This release positions OpenAI to maintain momentum against rivals like Google, which is testing Gemini 3 Pro, and Anthropic, reportedly developing new Claude models. The rapid iteration—from GPT-5 to GPT-5.1 in just months—underscores the industry's breakneck pace, where marginal gains in reasoning, efficiency, and safety are becoming key battlegrounds. For developers, these updates translate to tangible advantages: reduced operational costs via Codex-Mini, enhanced tool reliability through better guardianship, and new Pro-tier resources for demanding applications. Yet, it also highlights the growing pressure to adapt as AI evolves from monolithic releases to a fluid ecosystem of specialized, interconnected models.

Source: BleepingComputer

Comments

Please log in or register to join the discussion