Jeff Bezos revolutionized retail with Amazon's infinite digital shelf space, but what happens when inventory becomes infinite too, thanks to large language models? Scott Werner's Latent Library project turns AI hallucinations into a navigable universe of on-demand books, transforming generation into genuine discovery. This exploration delves into how such interfaces could reshape how developers and creators interact with boundless creative potential.

From Infinite Shelves to Latent Libraries: Redefining Discovery in the Age of AI-Generated Content

)

)

In 1994, Jeff Bezos glimpsed a future where the internet obliterated the constraints of physical retail. No longer bound by square footage, bookstores could offer infinite shelf space—a concept that birthed Amazon and the 'Long Tail' economy. But as Scott Werner argues in his recent WorksonMyMachine.ai post, true infinity demands not just endless space, but endless inventory. Enter large language models (LLMs), capable of generating any text imaginable, solving the supply side of the equation. Yet, Werner poses a deeper question: with infinite books at our fingertips, why are we still shouting prompts into a void?

The Warehouse Without Aisles

Bezos's vision worked because publishers and authors kept the supply chain humming. Translate that to AI: LLMs can now 'publish' on demand, sampling from a probability distribution of all possible text sequences. As Werner vividly puts it, it's like having a warehouse robot that's patient but literal—feed it an ISBN (or prompt), and it delivers. But without aisles, categories, or recommendations, this infinite library feels more like static noise than a browsable collection.

Werner draws from personal experience, recalling an undergrad project where he attempted to generate every possible 100x100 black-and-white image. The combinatorial explosion—2^10,000 possibilities, dwarfing the atoms in the universe—yielded nothing but endless static. LLMs sidestep this brute-force nightmare by learning textual structure, compressing infinity into something navigable. You won't get gibberish; you'll get plausible narratives, like a guide to competitive vegetable gardening from an alternate timeline.

"Infinite possibility and useful possibility are not the same thing." — Scott Werner

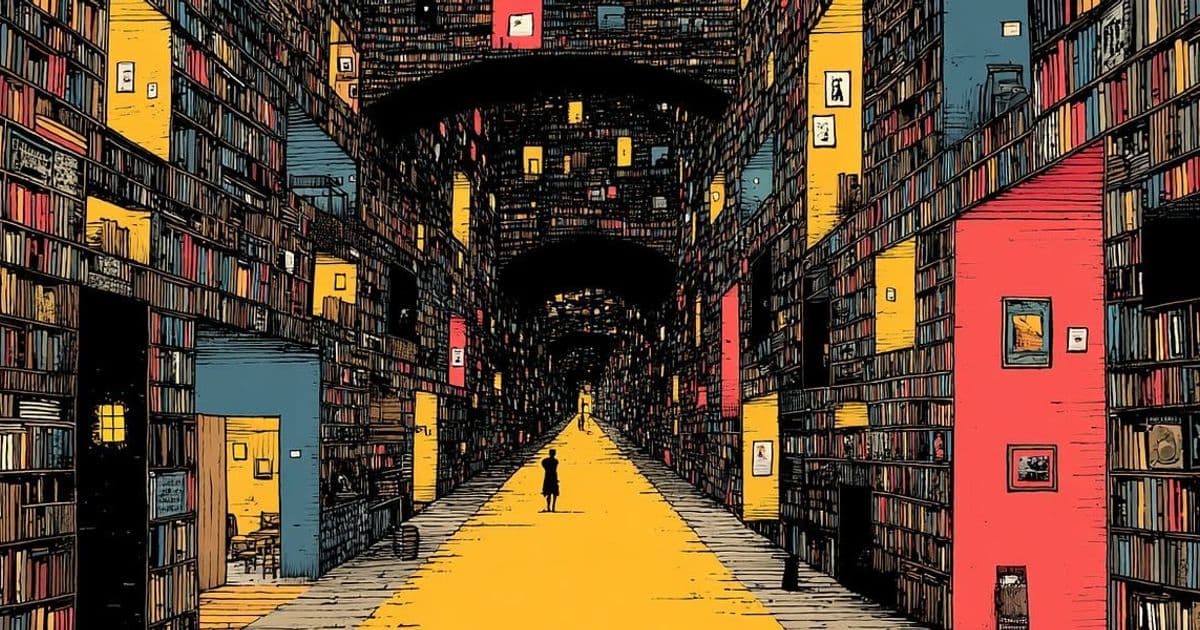

This insight echoes Jorge Luis Borges' Library of Babel, a universe-sized archive of every possible book, mostly nonsense. LLMs act as intuitive librarians, curating the readable from the chaos without exhaustive search.

Embracing Hallucinations as Inventory

Traditional AI interfaces treat 'hallucinations'—fabricated facts or content—as bugs to disclaim. Werner flips the script: in a world of generated fiction, what's a hallucination? If an LLM conjures The Recursive Raven, a tale of a bird in an infinite loop, is that error or artistry? His project, Latent Library, leans into this, making hallucinations the core feature.

In Latent Library, nothing pre-exists. Categories like 'Programming Fables' spawn titles such as The Fox Who Compiled Himself or grep Dreams of Electric Strings. Click one, and the book materializes—chapters, citations, even cross-references to other unrealized works. The library expands organically, like a city growing where paths are trodden. Users collaborate on content, turning passive generation into active exploration.

For developers and AI researchers, this isn't just whimsy. It prototypes new paradigms for interacting with generative models. Instead of rigid prompts, imagine browsing latent spaces in code generation tools or design software—discovering solutions through serendipity rather than specification.

The Interface Revolution

The magic lies in the interface. Ditch the linear prompt-response loop for a discovery-driven flow:

- Browse categories (e.g., 'Physics for Felines').

- Spot intriguing titles (e.g., The Cat’s Guide to Superposition).

- Dive in: chapters reference phantom books, pulling them into existence.

This mirrors real-world serendipity—stumbling upon a book that reshapes your thinking. As Werner notes, "Discovery is just generation with better PR." For tech leaders, it raises questions: How might such navigable infinities apply to codebases, datasets, or simulation environments? In an era where AI can simulate realities, interfaces that foster exploration could unlock creativity in ways direct commands cannot.

Even emergent quirks, like the prolific fictional author 'Elara Voss' (an LLM invention, as detailed in Max Read's newsletter), highlight the system's charm. It's not perfection; it's potential—a reminder that all books, in Borges' terms, exist in potentia, waiting for us to collapse the waveform.

Navigating Infinity's True Potential

Amazon solved shelf space; LLMs solved inventory. Latent Library tackles navigation, proving that infinite possibility thrives on smart interfaces. For developers weary of prompt engineering's trial-and-error, this hints at future tools: AI-assisted IDEs with 'latent browsing' for algorithms, or collaborative platforms where ideas materialize on demand.

Try it at Latent Library. Wander its aisles. You might not need it daily, but in revealing how we can browse the unbuilt, Werner's creation nudges us toward richer engagements with AI's boundless output. After all, the best innovations often start as a hunch in a garage—or a prompt in a text box—evolving into something far more discoverable.

Comments

Please log in or register to join the discussion