Google’s new lawsuit against the Chinese-run ‘Lighthouse’ platform marks one of the most aggressive legal strikes yet against phishing-as-a-service infrastructure powering global toll and delivery scams. Beyond brand protection, it signals a maturing strategy: treat criminal PhaaS ecosystems like transnational enterprises—and dismantle them accordingly.

)

)

On paper, it looks like another lawsuit. In practice, it’s a blueprint for how big tech intends to wage war on phishing-as-a-service.

Google has filed a sweeping legal action to dismantle “Lighthouse,” a China-based phishing-as-a-service (PhaaS) platform allegedly enabling global SMS toll and delivery scams. The operation, linked to threat actor "Wang Duo Yu" and associated crews, industrialized smishing campaigns that impersonated E‑ZPass, USPS, and other trusted brands—leveraging templates, automation, and cloud infrastructure in a way that looks uncomfortably close to mainstream SaaS.

For developers, security engineers, and tech leaders, this is more than a headline about scams. It’s a case study in how cybercrime has productized, how platform operators are professionalizing, and how defenders—corporate and governmental—are starting to respond with coordinated legal, technical, and policy offensives.

PhaaS grows up: Lighthouse as a criminal SaaS business

Lighthouse didn’t just run a few phishing pages. It sold:

- Ready-made phishing templates mimicking toll agencies, postal services, and major brands (including Google itself).

- Infrastructure and tooling to distribute SMS-based lures at scale.

- Customizable flows that harvested payment card data, credentials, and even two-factor codes.

- Support and updates via Telegram, with subscription tiers ranging from $88/week to $1,588/year.

This is the PhaaS model: vertically integrated cybercrime-as-a-service.

Instead of needing in-house expertise in:

- Web dev for spoofed portals

- SMS delivery pipelines

- Evasion of URL filtering and carrier-level defenses

…buyers simply pay Lighthouse to get a turnkey fraud stack.

Cisco Talos and Netcraft reporting tie Lighthouse to prior operations known as "Smishing Triad," which rebranded as Lighthouse in March 2025. The operation has been observed using thousands of typosquatted domains and re-usable, polished templates—some shared with or resembling those in other Chinese PhaaS ecosystems like Darcula and Lucid. That overlap, including the shared ‘LOAFING OUT LOUD’ fake shop template, suggests either tight collaboration or shared tooling supply chains.

This is supply-chain thinking, applied to cybercrime.

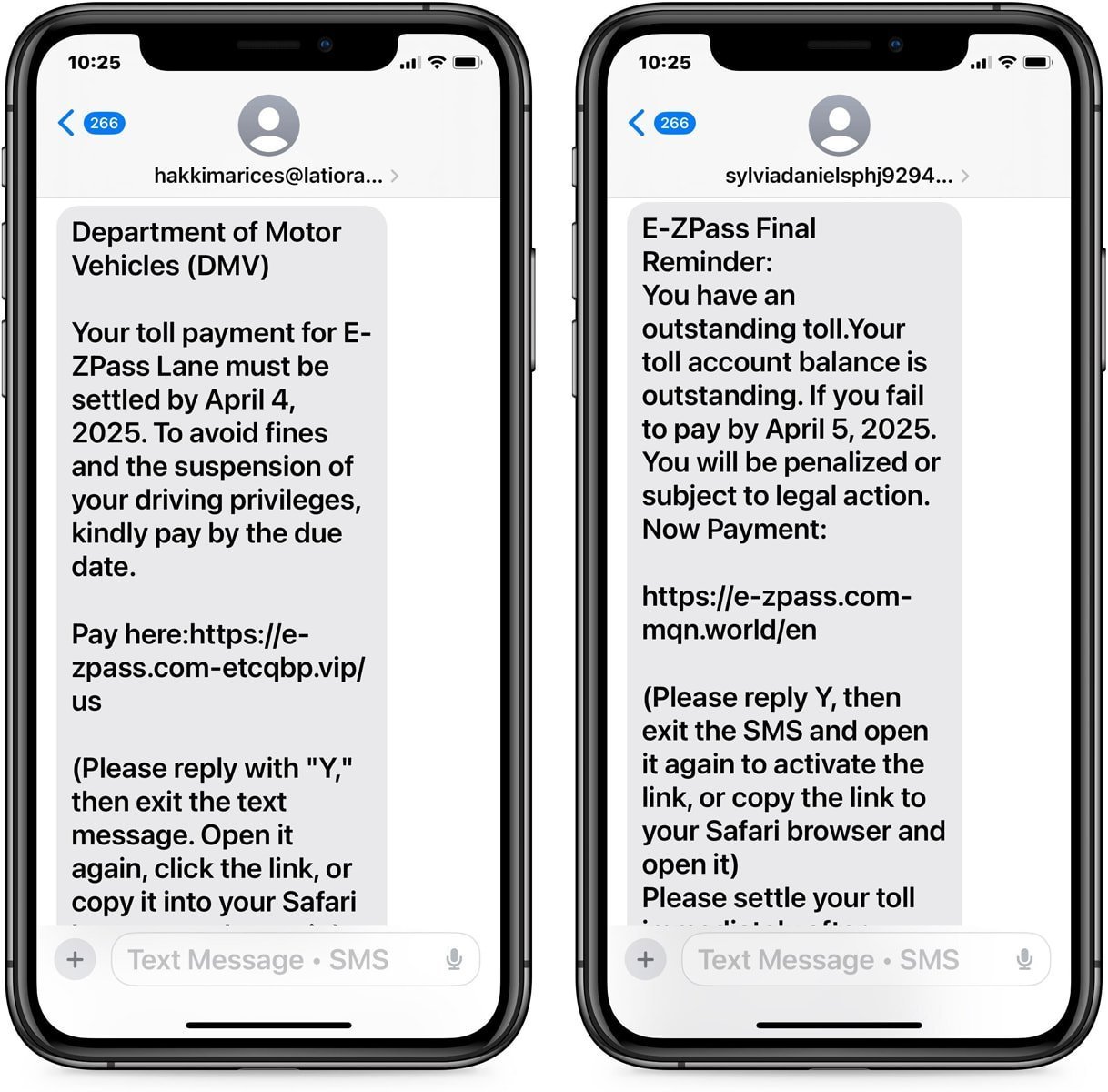

The scam mechanics: familiar lures, industrial execution

The Lighthouse-enabled campaigns are technically simple—but ruthlessly optimized.

- A victim receives a “toll due” or “delivery issue” SMS, often geo-tailored to U.S. states and local toll systems.

- The message includes a link to a typosquatted domain impersonating E‑ZPass or a postal service.

- The fake site requests payment details and personal information, sometimes layered with spoofed login pages.

- Stolen data is reused for broader financial fraud and account takeovers.

{{IMAGE:4}}

What elevates Lighthouse from low-tier phishing are its delivery channels and defenses:

- Use of iMessage and RCS (Android) for message delivery, which can:

- Circumvent traditional SMS-only filtering pipelines.

- Exploit user trust in “richer” messaging ecosystems.

- Continuous domain churn and brand impersonation at scale, including at least 107 templates featuring Google-branded sign-in flows.

- Built-in support to capture two-factor authentication, directly targeting the industry’s standard defense mechanism.

From an engineering lens, Lighthouse behaves like any modern SaaS platform:

- Subscription model.

- Customer success/support (via Telegram).

- Feature updates.

- Built-in integrations (e.g., messaging channels).

The difference is the product is fraud.

Why Google’s lawsuit is structurally different

Google’s suit doesn’t treat Lighthouse as a loose collection of bad domains. It treats it like an enterprise-scale criminal platform—and that framing matters.

Filed under:

- Racketeer Influenced and Corrupt Organizations Act (RICO)

- Lanham Act (trademark infringement and brand abuse)

- Computer Fraud and Abuse Act (CFAA)

…Google is effectively arguing that Lighthouse is:

- A coordinated criminal organization.

- Commercially exploiting U.S. brands and infrastructure.

- Facilitating unauthorized access and fraud at scale.

For the security community, this strategy has notable implications:

Infrastructure mapping becomes evidence-grade.

- Enumerating domains, templates, and hosting relationships is no longer just telemetry—it’s part of a racketeering narrative.

Brand impersonation is elevated from nuisance to strategic lever.

- The misuse of Google’s branding, along with others, becomes legal ammunition for takedowns.

PhaaS operators face enterprise-style legal exposure.

- Instead of chasing individual phishers, this targets the platform builders, much like actions against botnet operators or exploit kit authors.

We’re watching legal tooling catch up to technical reality.

A pattern emerges: ecosystem-level disruption as a strategy

Google’s Lighthouse action fits a broader pattern across the industry:

- Microsoft and partners disrupting large-scale phishing services targeting Microsoft 365.

- Cloudflare and others collaborating on targeted infrastructure takedowns.

- Law enforcement and vendors jointly targeting botnets, loaders, and MaaS/PhaaS ecosystems.

We’re moving from:

- "Block that domain" → to → "Dismantle that platform."

For defenders, that shift is crucial. PhaaS has reduced the barrier to entry so far that:

- Sophisticated campaigns can be run by low-skilled actors.

- Detection signatures often lag behind the rapidly refreshed templates.

- Traditional, isolated takedowns feel performative unless the upstream kit/service is hit.

By treating Lighthouse as a platform to be removed—not just a noisy set of IPs—Google is signaling that the era of purely reactive blocklists is over.

Policy alignment: why Google is tying this to U.S. legislation

Google paired the lawsuit announcement with support for several U.S. policy initiatives:

- GUARD Act: Focused on protecting aging retirees from fraud.

- Foreign Robocall Elimination Act: Targeting overseas-origin robocalls.

- SCAM Act: Building a national framework to counter scam compounds and sanction operators.

On the surface, this is public-interest signaling. Strategically, it’s more interesting:

- It acknowledges that major scam infrastructure is now foreign-hosted, multi-tenant, and industrial.

- It validates that vendors can’t solve this with filters alone; they need legal hooks to act across borders and in coordination with law enforcement.

- It suggests a merging of threat intel, compliance, and policy—the same triad many enterprises are now forced to navigate.

For CISOs and platform owners, the message is clear: legal frameworks are becoming part of your security architecture.

AI-powered defenses: useful, but not a silver bullet

Google also highlighted expanded use of AI and product features:

- Enhanced scam detection in Google Messages.

- Improved account recovery (e.g., Recovery Contacts).

- Continued user education campaigns.

AI is legitimately useful here:

- Detecting smishing patterns across massive message volumes.

- Identifying phishing kits and templates based on HTML/CSS/JS fingerprints.

- Clustering domains and infrastructure into attributed campaigns.

But technical readers know the tradeoff:

- PhaaS platforms iterate quickly and can A/B test around AI defenses.

- Models are only as effective as their training data, feature engineering, and integration into real-time enforcement.

- Attackers can use AI for content generation, domain selection, and evasion in return.

The Lighthouse case underlines an uncomfortable truth: you can't purely “ML your way out” of a well-funded criminal ecosystem. You need:

- Platform-level intelligence sharing.

- Coordinated takedowns.

- Legislative and civil action pressure.

AI is a tool in that stack—not the stack itself.

What this means for builders and defenders

If you build or secure systems that handle payments, identity, or messaging, Lighthouse should read like an architecture review of your threat model.

Key takeaways:

Assume phishing-as-a-service will target your brand.

- Monitor for:

- Typosquatted domains.

- Unauthorized brand use in login/checkout flows.

- Lookalike SMS and RCS campaigns.

- Automate this via threat intel feeds and brand protection APIs where possible.

- Monitor for:

Shift left on user journey hardening.

- Add opinionated UX for payment and recovery flows:

- Clear indicators when communication comes from official channels.

- Consistent URLs and domains; reduce domain sprawl.

- Standardized language that’s hard for adversaries to convincingly copy.

- Add opinionated UX for payment and recovery flows:

Build resilient authentication.

- Assume OTP-over-SMS is compromised.

- Encourage FIDO2/WebAuthn and phishing-resistant MFA across consumer and enterprise surfaces.

Instrument your abuse response like a product.

- A modern abuse team needs:

- Data engineers for signal collection.

- Applied ML for detection.

- Counsel familiar with RICO/CFAA/Lanham, not just privacy law.

- Playbooks for working with external platforms and law enforcement.

- A modern abuse team needs:

Treat crimeware ecosystems as first-class dependencies.

- Just as you model dependencies on open-source libraries or cloud regions, account for:

- Active PhaaS kits targeting your vertical.

- Their typical infrastructure patterns.

- How quickly you can verify, attribute, and act.

- Just as you model dependencies on open-source libraries or cloud regions, account for:

Lighthouse is a reminder: if criminals can run like SaaS companies, your security and legal responses must run like a platform business.

When the platforms fight back

The Lighthouse lawsuit won’t end smishing. Another kit will rise; another brand will be cloned. But something important is happening here.

Security has long been framed as a sequence of tactical patches—block a domain, educate a user, update a filter. Google’s move reframes it as structural: identify the operators, use civil and criminal statutes that match their actual operating model, and push back on the ecosystem, not just the symptoms.

For the technical community, that’s the real story. Phishing-as-a-service has grown into a mature, revenue-optimized software industry. Now the platforms it feeds on are starting to treat it like one—and take it apart with the same mix of intelligence, automation, and ruthless scalability that made it possible in the first place.

Source: BleepingComputer — "Google sues to dismantle Chinese platform behind global toll scams" (https://www.bleepingcomputer.com/news/security/google-sues-to-dismantle-chinese-platform-behind-global-toll-scams/)

Comments

Please log in or register to join the discussion