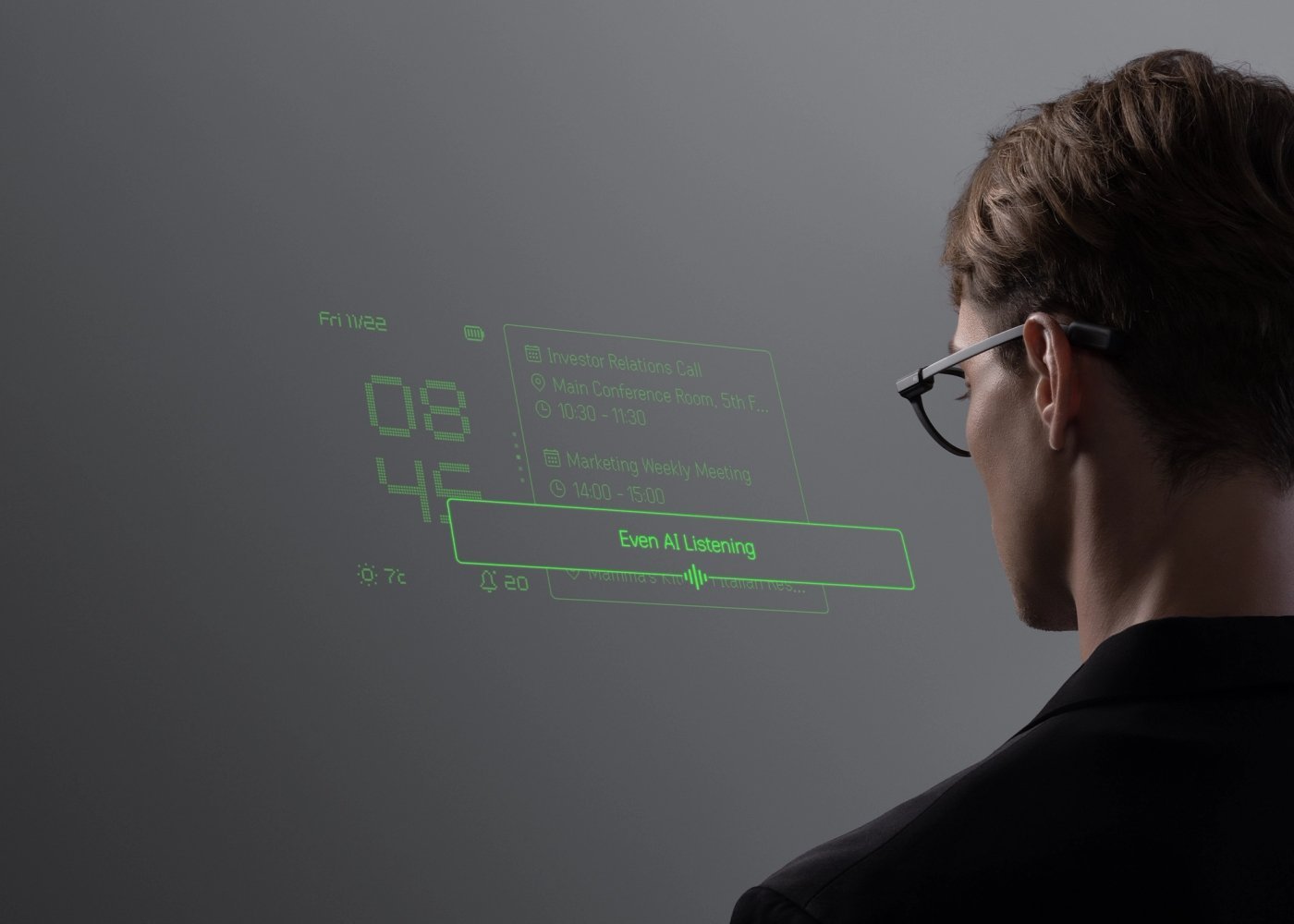

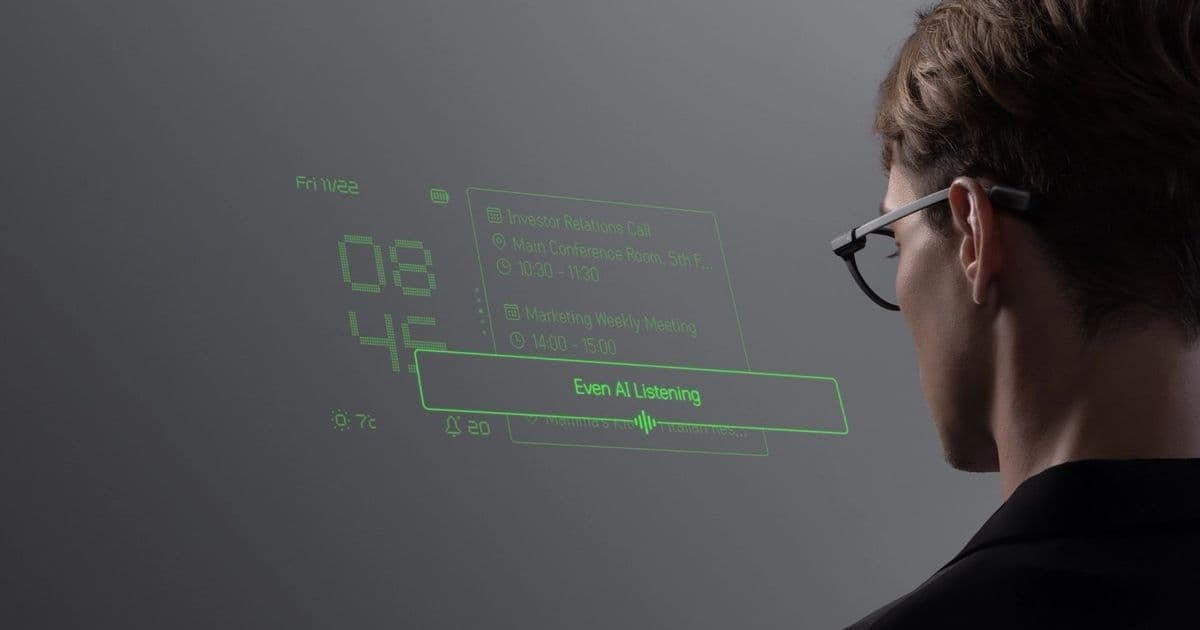

Even Realities’ new G2 smart glasses quietly fuse prescription-grade optics, contextual AI, and a companion smart ring into a discreet heads-up display for real life—not just demos. For developers and tech leaders, they signal a serious bid to turn ambient, privacy-conscious AI into a platform, not a gimmick.

Even G2 Smart Glasses Aim to Make Ambient AI Actually Wearable

Smart glasses have spent a decade oscillating between sci‑fi promise and social failure. Bulky frames, awkward cameras, patchy apps, dead batteries, and justifiable privacy backlash have kept most attempts in the "interesting prototype" bucket.

Even Realities' new Even G2 is the latest challenger to that curse—only this time, the ambition is sharper: a prescription-grade, camera-free, MicroLED display in everyday frames, driven by contextual AI and paired with a smart ring. It isn’t pitched as a toy. It’s positioned as infrastructure for ambient computing.

Source: Even Realities – Even G2 Smart Glasses

A Heads-Up Display That Hides in Plain Sight

Unlike most mixed reality headsets, Even G2 is built to pass as regular eyewear.

Key technical choices stand out:

- MicroLED, 640×350 resolution, 60 Hz, 1200 nits

- Waveguide-based binocular display with 27.5° FoV

- 98% optical passthrough for the real world

- Notifications projected at roughly 2 m distance to reduce eye strain

- Green monochrome display for legibility and efficiency

It’s a deliberate rejection of cinematic AR theatrics in favor of a narrow, utilitarian HUD: texts, prompts, directions, transcriptions, and AI hints living just at the edge of awareness.

Even claims the new lenses offer:

- 75% bigger and sharper display area vs. their previous generation

- Edge-to-edge clarity with no wobble/blur

- 20% thinner optical stack, -53% temple thickness, reduced frame bulk

For developers, that constraint is interesting. This is not about volumetric dragons in your living room. It’s about latency, legibility, and cognitive ergonomics: how much timely, contextual information can you surface without hijacking a user’s attention or their social presence.

{{IMAGE:4}}

Contextual AI on Your Face: Conversate and Even AI

The core software story is Conversate, billed as "contextual AI" that listens (via microphones), interprets conversations, and surfaces:

- Explanations of unfamiliar terminology

- Public bios of people being discussed

- On-the-spot factual answers

- Suggested follow-up questions

- Live subtitles and translations (31 languages)

- Meeting and conversation summaries

On top of that sits "Even AI," which integrates large language models such as ChatGPT, Perplexity, and Even’s proprietary LLM for voice commands and dialog-style assistance.

A few technical implications matter for this audience:

Always-listening, selectively responding

- Wake phrase: “Hey, Even.”

- Multi-mic beamforming and environmental awareness implied by the feature set.

- The system must continuously capture and segment audio, run ASR, then pipe text into LLM-powered pipelines—all under tight latency constraints.

Context as a first-class primitive

- Conversate’s pitch isn’t just Q&A; it’s about tracking ongoing context across prompts and conversations.

- This suggests:

- Session-based context windows

- Entity extraction (names, companies, topics)

- Task memory for follow-ups and summaries

- For developers, this hints at APIs where "ambient context" (what the user is hearing/doing) becomes an input dimension—far richer than a one-off voice command.

Assistive, not performative

- The UI runs silently to the wearer: hints during negotiations, definitions in technical meetings, or suggested questions in investor calls.

- That’s powerful—and ethically loaded. You’re effectively outsourcing parts of your cognition, live.

If Even executes this well, G2 becomes less of a gadget and more of a low-friction control plane for LLM-native workflows.

A Smart Ring as the Quiet Input Layer

The companion device, Even R1, is a clever design decision.

Instead of forcing mid-air gestures or constant temple tapping, the R1 ring offers:

- Tactile input: tap, scroll, and control the HUD discreetly

- Direct interaction with both the glasses and phone via Bluetooth (BLE 5.2)

- Continuous vital sign monitoring for wellness insights

For human–computer interaction, this division of labor is smart:

- Glasses: ambient display and audio capture

- Ring: intentional input and biometrics

It reduces the "Glasshole" signaling problem and gives developers another input modality to build around—think:

- Ring gestures as triggers for AI workflows

- Health and motion as contextual signals for notifications or assistance

Serious About Optics: Prescription-First, Not Afterthought

Most "smart" eyewear treats prescription support as a begrudging add-on. Even goes the opposite way.

Highlights:

- Supports prescriptions from -12.00 to +12.00, including astigmatism

- Digitally surfaced lenses, each custom-etched per eye

- Multiple high-index options (1.60, 1.67, 1.74) tuned to prescription strength

- Anti-reflective and anti-fingerprint coatings

Combined with waveguides and MicroLED, the promise is that Even G2 can be your actual daily pair, not your "gadget glasses." That distinction is crucial: adoption at scale requires something people are willing to wear 12 hours a day, not 12 minutes.

No Camera, And Why That Matters

In a world now comfortable with CCTV but still allergic to face-mounted cameras, Even G2 makes a strong bet: no camera.

Instead, it leans on:

- Microphones for input

- On-device and encrypted processing pathways

- An explicit, consent-based approach to cloud usage

From a privacy and security viewpoint, that’s not just branding:

- No camera simplifies regulatory and social acceptance.

- Reduces the attack surface for visual data exfiltration.

- Forces more inventive approaches to context (audio, sensors, user input, LLM inference) instead of naive screen-scraping of reality.

For enterprises or regulated industries considering wearable AI, "no camera" is a feature, not a limitation.

Battery, Durability, and the Boring Stuff That Makes or Breaks Platforms

Even G2’s hardware stack is deliberately conservative where it counts:

- IP67: dust-tight and water-resistant (submersion up to 30 minutes)

- Materials: titanium temples, magnesium frame

- Battery: up to 2 days on a charge, with a case that holds 7 full recharges

- Auto-brightness control to balance visibility and power

These details are unglamorous, but they anchor the claim that this is "everyday" hardware. For developers, the difference between “cool demo” and “real platform” is whether users actually keep the device on them. IP67 and multi-day effective runtime are the right answers.

{{IMAGE:5}}

Even OS, The Upcoming Hub, And Why Developers Should Care

Buried toward the end of Even’s pitch is the most strategic line: "The Even Hub. Coming soon. Here, developers can create powerful new tools, powered by Even OS." That’s where this product steps from gadget toward ecosystem.

If Even delivers a credible developer story, here’s what it could unlock:

Micro-interfaces for ambient workflows

- Ultra-lightweight HUD apps for:

- Real-time code review hints in standups

- Incident response runbooks during on-call

- Discreet SRE metrics overlays while presenting

- Ultra-lightweight HUD apps for:

AI-native, context-rich plug-ins

- Access to:

- Transcribed speech streams (with consent)

- User tasks, schedules, location (with permissions)

- Physiological signals from the ring

- Combined, this enables adaptive systems: adjusting prompts, nudging breaks, or tailoring information density.

- Access to:

Enterprise & vertical integrations

- Healthcare: live terminology expansion, drug interaction checks

- Finance: regulatory hints, structured summary generation during calls

- Engineering: architecture references, ticket context, deployment status overlays

The challenge for Even will be balancing three competing forces:

- Developer access to rich context

- Hard privacy guarantees, especially around always-on audio

- Latency and reliability constraints on a device that must "just work"

If Even Hub exposes clear SDKs, event streams, and LLM-native primitives while keeping processing secure and scoped, G2 could become a reference model for ambient AI platforms.

The Stakes: From Novelty Glasses to Cognitive Co-Pilot

Even G2 is not the first attempt at smart glasses, nor the loudest. But it is one of the more opinionated:

- No camera

- Real prescriptions

- HUD, not holograms

- Contextual AI as the core experience

- Companion ring as the primary input channel

For developers, engineers, and tech leaders, the interesting question isn’t whether this will replace your phone. It’s whether this kind of device becomes the default interface for situational intelligence: surfacing the right hint, translation, metric, name, or next step at the exact moment you need it—without demanding a new ritual.

If Even Realities follows through with a robust Even OS and Hub, and if they uphold their privacy promises while enabling rich contextual apps, G2 won’t just be another entry in the smart glasses graveyard. It could be the first everyday wearable where ambient AI feels less like a spectacle and more like a quietly competent colleague, sitting just at the edge of your vision.

Comments

Please log in or register to join the discussion