Tor's ingenious onion routing architecture enables anonymous communication through layered encryption and distributed relays, but its design reveals fundamental tradeoffs between anonymity, usability, and vulnerability to sophisticated attacks. This technical deep-dive explores Tor's operational mechanics and inherent limitations.

For developers and cybersecurity professionals, Tor represents one of the most fascinating yet misunderstood privacy systems. Originally developed by the U.S. Naval Research Laboratory to protect intelligence communications, Tor (The Onion Routing Protocol) has evolved into a globally distributed anonymity network. Its core innovation—onion routing—boils down to a clever cryptographic relay system that balances anonymity with practical usability, though not without significant tradeoffs.

The Onion Routing Principle

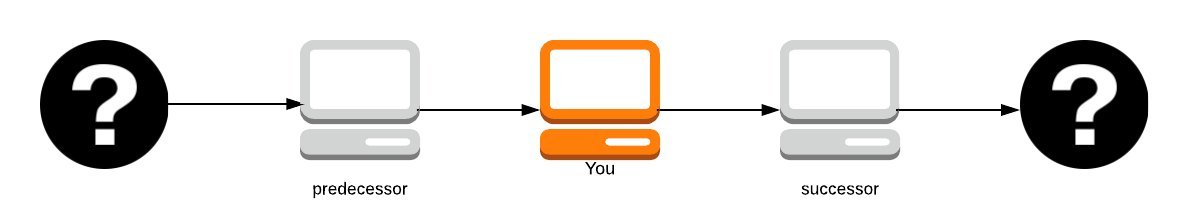

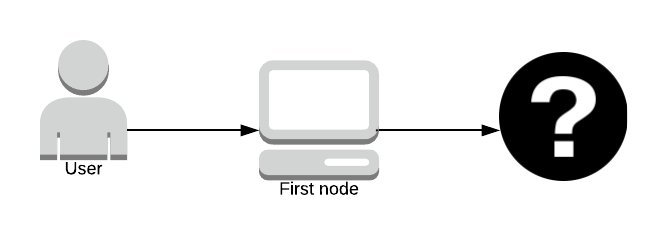

At Tor's heart lies onion routing: a technique where messages are wrapped in multiple layers of encryption, resembling an onion. Each layer corresponds to a hop in a predetermined circuit of three Tor nodes (relays). As data traverses this path:

- The entry node (guard node) peels off the outer encryption layer, revealing the next relay

- Middle relays decrypt their designated layer and forward the payload

- The exit node removes the final layer, delivering plaintext to the destination

Visualization of Tor's multi-layered encryption (Source: skerritt.blog)

Visualization of Tor's multi-layered encryption (Source: skerritt.blog)

Critically, no single node knows both the message origin and content. The guard node sees the user's IP but not the decrypted message. The exit node sees the decrypted message but not the source. Middle relays see neither. This compartmentalization creates anonymity through distributed trust.

Circuit Construction: Security Through Constraints

When initiating a connection, Tor clients build circuits with strict relay selection rules:

# Simplified relay selection pseudocode

def select_relays():

exit_node = choose_exit(allow_ports, capacity)

middle_nodes = []

while len(middle_nodes) < 2:

candidate = random_relay()

if (candidate != exit_node and

not same_family(candidate, existing_nodes) and

not same_/16_subnet(candidate, existing_nodes) and

candidate.is_valid):

middle_nodes.append(candidate)

return [guard_node] + middle_nodes + [exit_node]

Key constraints include:

- Guard node persistence: Clients pin guard nodes for ~12 weeks to resist traffic correlation attacks

- Subnet diversity: No two relays from same /16 IP subnet

- Family avoidance: Excludes relays operated by same entity

- Capacity weighting: Prefers nodes with available bandwidth

These rules mitigate (but don't eliminate) end-to-end attacks where adversaries control both guard and exit nodes—a vulnerability inherent to Tor's design.

Critical Network Services

Tor relies on specialized infrastructure for coordination:

Directory Authorities (9 trusted servers):

- Publish hourly consensus documents listing all active relays

- Prevent Sybil attacks through distributed trust

Bridge Nodes:

- Unlisted relays that bypass censorship

- Use pluggable transports to disguise Tor traffic (e.g., mimicking HTTPS)

Hidden Services:

- Enable anonymous servers via .onion addresses

- Use introduction points and rendezvous routing to decouple client/server identities

- Rely on DHT for descriptor distribution

Attack Vectors and Limitations

Despite its ingenuity, Tor faces persistent threats:

| Attack Type | Mechanism | Mitigation Status |

|---|---|---|

| End-to-end correlation | Adversary controls guard + exit | Theoretically possible |

| Traffic fingerprinting | Statistical analysis of packet timing/size | Padding improvements underway |

| Sybil attacks | Flooding network with malicious relays | Guard pinning + consensus monitoring |

| Application leaks | Protocols like BitTorrent reveal IP | Application-layer fixes needed |

As security researcher Dan Egerstad noted:

"If you look at where Tor nodes are hosted... some cost thousands monthly. Who pays this anonymously?"

Most user compromises stem from operational errors—not protocol flaws. The Harvard student caught after sending bomb threats via Tor and Guerrilla Mail learned this harshly: The email service included originating IP headers, and authorities correlated Tor access logs with campus network activity.

The Usability-Security Tension

Tor's architecture embodies critical tradeoffs:

- Anonymity through volume: More users = harder attacks, necessitating easy adoption

- Performance sacrifices: Multi-hop routing introduces latency (AES decryption at each hop)

- Centralization necessities: Directory authorities create trust dependencies

- Protocol limitations: Cannot prevent confirmation attacks ("Is Alice talking to Bob?")

Tor remains a remarkable feat of privacy engineering—not perfect anonymity, but the best practical implementation of distributed trust we have. Its ongoing evolution (like next-generation onion services and improved traffic obfuscation) continues the cat-and-mouse game between privacy advocates and well-resourced adversaries. For developers, understanding these mechanics reveals both the power and peril of building systems where anonymity is the prime directive.

Source: Technical analysis adapted from skerritt.blog

Comments

Please log in or register to join the discussion