Learn how to implement sophisticated traffic splitting for AI APIs using Apache APISIX, enabling seamless version migrations and canary testing. This step-by-step guide covers security hardening, weighted distribution, and observability for modern AI workloads.

The API Gateway Evolution: Taming AI Traffic with Apache APISIX

As AI adoption explodes, developers face unprecedented challenges in managing API traffic across rapidly evolving models. The Apache APISIX gateway emerges as a critical solution for orchestrating complex AI workloads, enabling sophisticated traffic control between API versions with surgical precision. This technical walkthrough demonstrates how to implement production-grade load balancing for AI services.

Why Traffic Splitting Matters in AI Ecosystems

Modern AI deployments demand more than simple round-robin distribution:

- Version Rollouts: Safely migrate between GPT-4 and newer models

- A/B Testing: Validate performance of Claude 3 vs. Mistral endpoints

- Zero-Downtime Updates: Shift traffic during model retraining cycles

- Failure Containment: Isolate problematic API versions instantly

"Traffic splitting transforms API gateways into AI orchestration engines. APISIX's dynamic routing allows real-time experimentation that's crucial for ML ops." - API Infrastructure Engineer

Implementation Blueprint: From Security to Validation

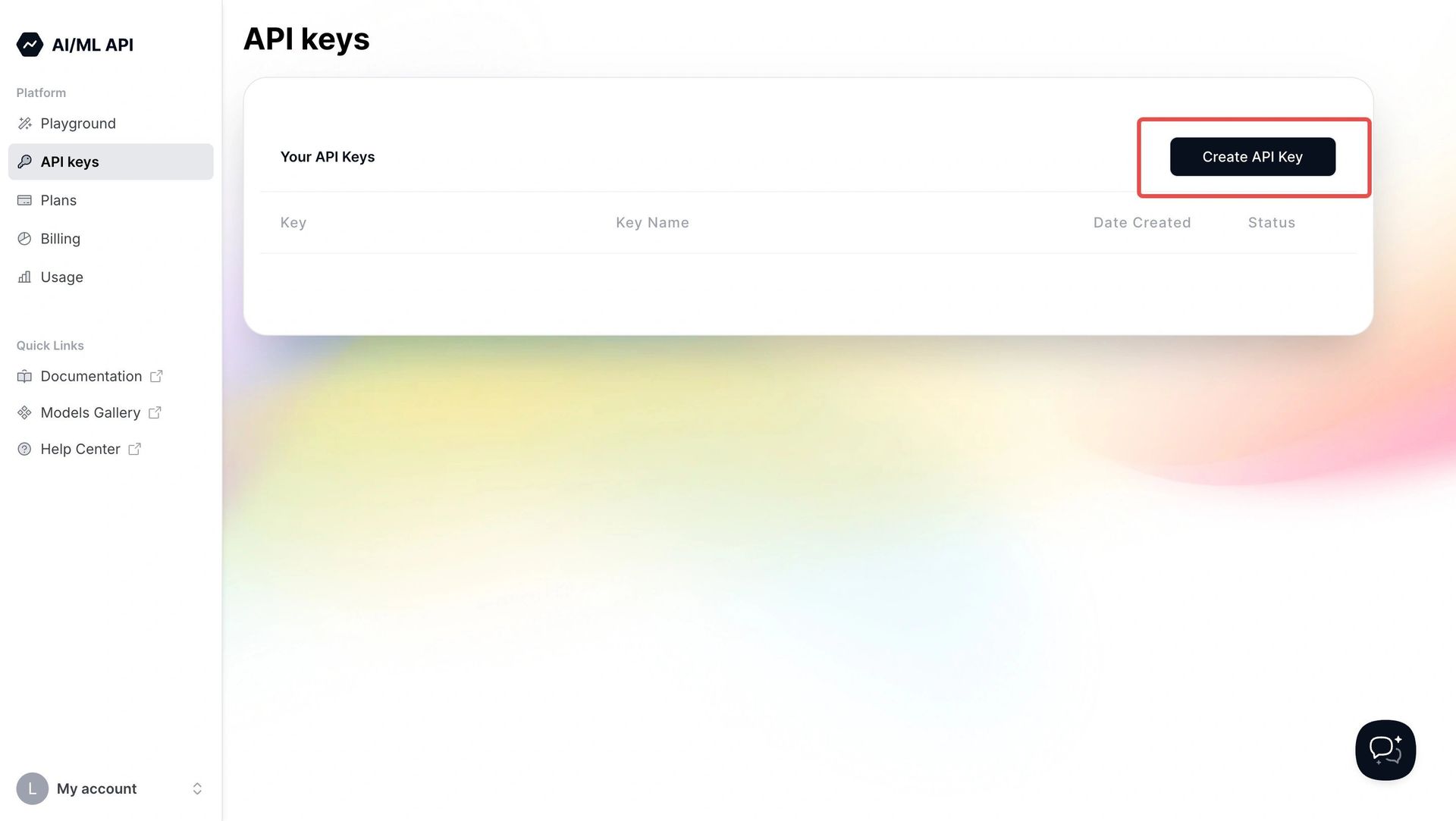

1. Fortress Foundations: Securing the Control Plane

Before routing traffic, lock down APISIX's Admin API:

# config.yaml critical security settings

apisix:

admin_key_required: true

admin_key:

- name: admin

key: 7x!A2Df5*9z$C1vBnM@q8t#wE (32+ char secret)

role: admin

admin:

allow_admin:

- 192.168.1.0/24 # Production management CIDR

- 10.10.0.2/32 # CI/CD pipeline IP

Security Tip: Rotate keys quarterly and never use 0.0.0.0/0 in production.

2. Traffic Surgery: The Split Plugin in Action

APISIX's traffic-split plugin enables weighted distribution:

{

"plugins": {

"traffic-split": {

"rules": [

{

"weight": 50,

"rewrite": {"uri":"/v1/chat/completions"}

},

{

"weight": 50,

"rewrite": {"uri":"/v2/chat/completions"}

}

]

}

}

}

Real-World Use: Adjust weights dynamically during canary releases via Admin API.

3. Validation & Observability

Confirm distribution accuracy with load testing:

# Send 1000 test requests

seq 1000 | parallel -j 20 curl -s -o /dev/null \

-H "Authorization: Bearer AIML_KEY" \

-X POST http://localhost:9080/chat/completions

# Monitor upstream distribution

apisix_http_requests_total{route="aimlapi-split",upstream="v1"}

apisix_http_requests_total{route="aimlapi-split",upstream="v2"}

Pro Tip: Integrate Prometheus metrics for real-time dashboards instead of log scraping.

Beyond Basic Splitting: Production-Grade Enhancements

Scale your implementation with:

- Rate Limiting - Protect AI backends from traffic spikes

- Circuit Breaking - Automatically quarantine failing endpoints

- JWT Authentication - Layer security with

key-authplugin - Infrastructure as Code - Manage via Kubernetes CRDs for reproducibility

The API Gateway as AI Orchestrator

This configuration transforms APISIX from a simple proxy to an intelligent traffic conductor. By mastering these techniques, teams gain:

- Risk-free experimentation with next-gen AI models

- Quantifiable performance comparisons between providers

- Graceful degradation during model updates

- Unified control plane for multi-vendor AI services

The future of AI deployment isn't just about building models—it's about architecting resilient delivery systems. APISIX provides the traffic management backbone that turns experimental AI into production-ready services.

This article is based on the original guide from the Apache APISIX blog.

Comments

Please log in or register to join the discussion