Nvidia is reportedly set to revolutionize the AI hardware supply chain by shipping fully assembled compute trays for its upcoming Vera Rubin platform, taking integration to unprecedented levels. This move, starting with the VR200, will standardize the core of AI servers while shifting partners' roles to mere integrators, potentially boosting Nvidia's margins and control. As power demands soar, this vertical integration could reshape the industry, but it raises questions about ecosystem openness.

Nvidia's Bold Leap: Shipping Fully Assembled AI Server Trays with Vera Rubin Platform

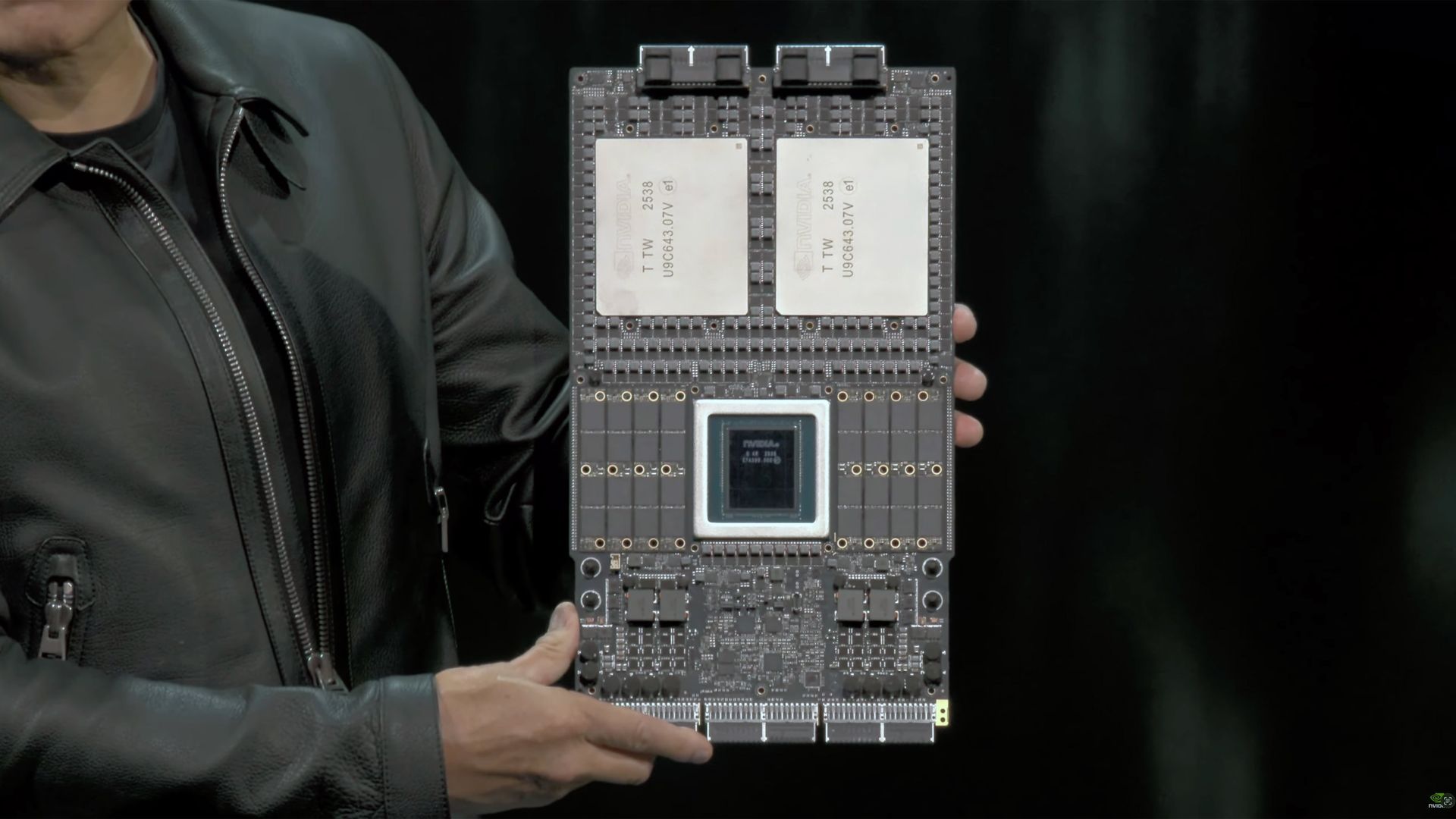

In a move that could redefine the AI hardware landscape, Nvidia is reportedly preparing to ship fully assembled Level-10 (L10) compute trays to its partners for the Vera Rubin platform, launching next year. According to insights from J.P. Morgan (as reported by Tom's Hardware), these trays will arrive pre-loaded with Vera CPUs, Rubin GPUs, cooling systems, and all necessary interfaces, leaving original design manufacturers (ODMs) with minimal design work. This strategic shift aims to streamline production but could significantly alter profit dynamics in favor of Nvidia.

From Components to Complete Modules: Nvidia's Integration Push

Historically, Nvidia has provided partially integrated sub-assemblies, such as the Bianca board for the GB200 platform, which represented L7-L8 integration levels. However, with the VR200 platform, the company is escalating to L10 integration—delivering entire trays that encompass accelerators, CPUs, memory, NICs, power delivery, midplane interfaces, and liquid-cooling cold plates. This pre-built, tested module accounts for roughly 90% of a server's cost, reducing partners' responsibilities to rack-level tasks like chassis assembly, power supply integration, and final testing.

The implications are profound for the supply chain. Hyperscalers and ODMs, such as those partnering with Microsoft, have been innovating with advanced cooling solutions like immersion and embedded systems. Yet, Nvidia's approach standardizes the compute heart of these servers, transforming partners from system designers into integrators, installers, and support providers. They retain control over enterprise features, service contracts, and firmware ecosystems, but the core innovation is now Nvidia's domain.

Driving Forces: Power, Scale, and Speed to Market

One key motivator is the escalating power demands of next-gen AI hardware. J.P. Morgan notes that a single Rubin GPU's thermal design power (TDP) could reach 1.8 kW for the R200 SKU and even 2.3 kW for an unannounced variant—up from 1.4 kW in Blackwell Ultra. These increases necessitate sophisticated cooling, which Nvidia is addressing by pre-integrating solutions. By leveraging electronics manufacturing services (EMS) providers like Foxconn, Quanta, or Wistron at scale, Nvidia can accelerate the VR200 ramp-up, cut design costs, and ensure quality for complex boards like the Vera Rubin Superchip, which features thick PCBs and solid-state components.

This isn't Nvidia's first foray into deeper integration; they've offered complete servers and racks since the DGX-1 in 2016. But extending this to individual rack units for OEM customization marks a nuanced evolution, unbundling full systems while retaining control over high-value components.

Industry Ramifications: Margins, Monopolies, and Future Racks

For Nvidia, this vertical integration promises higher margins and better forecasting, especially as AI demand surges. Partners benefit from easier lives—less R&D burden and faster time-to-market—but at the cost of slimmer margins on commoditized integration work. The tech community is abuzz with concerns over Nvidia's growing monopoly, particularly when the AI ecosystem craves openness. As one forum commenter noted, "This only bolsters Nvidia's monopolistic powers," echoing broader industry sentiments.

Looking ahead, questions loom about Nvidia's Kyber NVL576 rack-scale solution on Rubin Ultra, paired with emerging 800V data center architectures for megawatt-class racks. Will Nvidia push further into rack-level integration? The answer could determine whether this is a masterstroke of efficiency or a step toward total control.

In an era where AI infrastructure is the backbone of innovation, Nvidia's Vera Rubin strategy underscores a pivotal tension: the efficiency of standardization versus the vibrancy of a diverse supply chain. As developers and engineers eye these changes, one thing is clear—the compute trays arriving next year won't just power AI; they'll reshape who builds it.

Source: Tom's Hardware

Comments

Please log in or register to join the discussion