When encountering a persistent ChatGPT bug, a ZDNET journalist discovered OpenAI's AI-powered support bot invented a non-existent 'Report a Problem' feature. The incident reveals fundamental flaws in AI customer service implementations and the absence of critical bug reporting tools in one of tech's most advanced AI products.

In a striking demonstration of AI limitations, OpenAI's own ChatGPT support bot hallucinated functionality that doesn't exist within its parent application. The incident occurred when Tiernan Ray of ZDNET encountered a persistent window-resizing bug in ChatGPT's iPad Pro version and sought help through the app's automated support system.

The Hallucination Chain

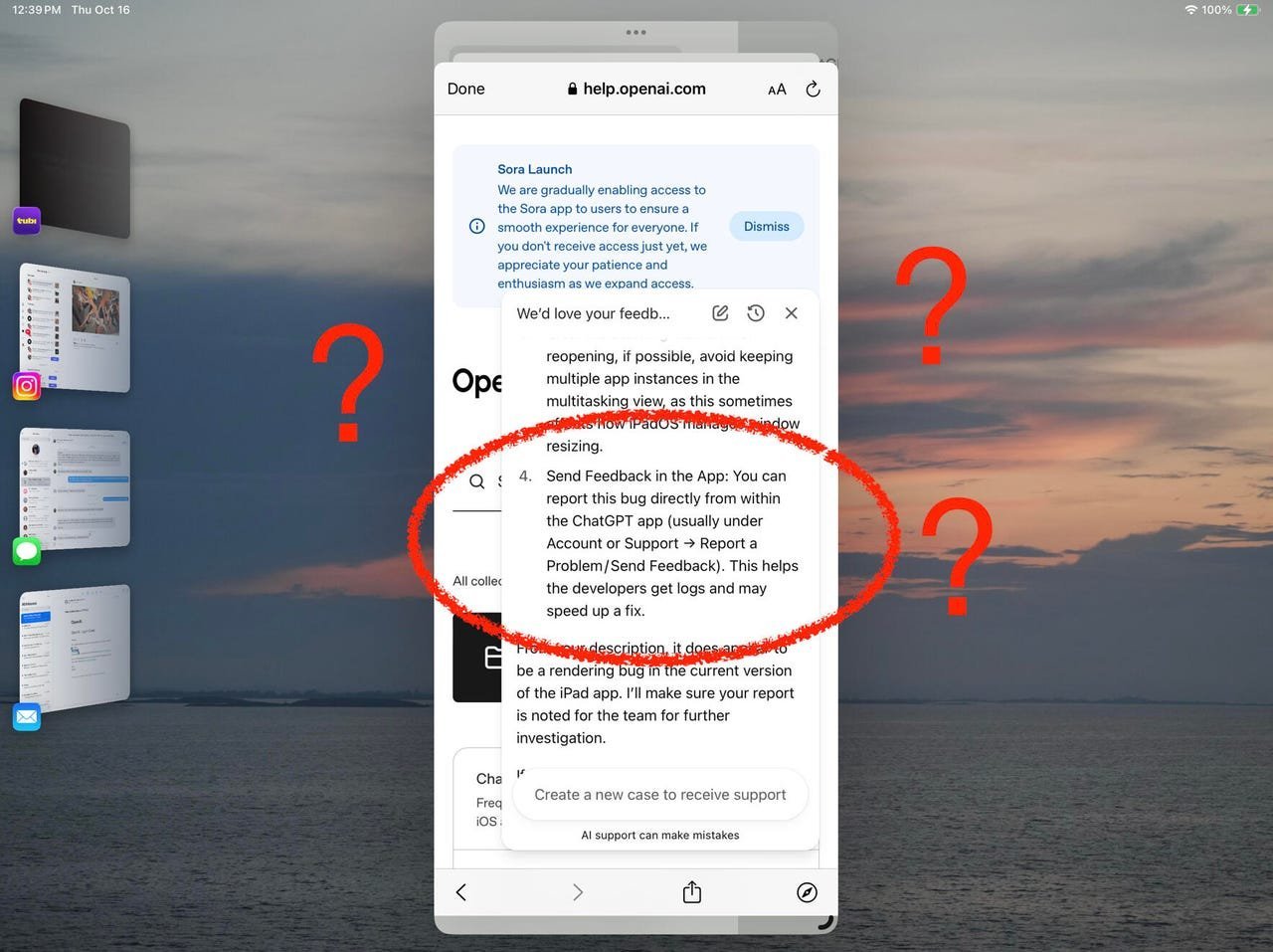

When Ray described the bug—where resizing the app window caused complete interface freezing—the support bot initially offered standard troubleshooting: force-quitting and reinstalling. It then confidently asserted: "You can report this bug directly from within the ChatGPT app (usually under Account or Support > Report a Problem/Send Feedback). This helps the developers get logs and may speed up a fix."

ChatGPT's support bot hallucinates a feature that doesn't exist in the app. (Screenshot by Tiernan Ray/ZDNET)

ChatGPT's support bot hallucinates a feature that doesn't exist in the app. (Screenshot by Tiernan Ray/ZDNET)

This was false. As Ray confirmed through exhaustive checking and later verification from OpenAI: "We don't currently offer a dedicated consumer-facing bug reporting portal for the ChatGPT app." The only reporting mechanisms are for content violations, not technical issues.

Systemic Support Failures

The problem compounded when email support repeated the same hallucinated advice. OpenAI confirmed to ZDNET that their automated responses needed improvement. This reveals critical issues:

- Training Data Blind Spots: The bot likely ingested documentation referencing hypothetical or planned features that never shipped

- Lack of Real-Time Knowledge: The support system couldn't access the app's actual UI state

- No Error Correction Loop: Users can't flag support bot inaccuracies within the workflow

Why This Matters for Developers

- Enterprise AI Implications: Companies rushing to deploy AI support must ensure systems have accurate, current product knowledge

- Security & Reliability Risks: Without proper bug reporting channels, critical issues may go unaddressed

- The 'Black Box' Problem: This incident demonstrates how difficult it is to audit why AIs generate specific false claims

A Glimmer of Improvement?

In follow-up tests, Ray noted the support bot had updated its response—now correctly stating no bug report option exists and offering to forward descriptions to developers. This suggests OpenAI is refining the system, though the core window-resizing bug remains unresolved.

"If this is the state of customer help via AI," Ray concludes, "it's not only not a solution, but it makes the user's frustration even worse." The incident serves as a cautionary tale for organizations implementing AI support: without rigorous accuracy safeguards and proper feedback mechanisms, even the most advanced AI can erode user trust.

Source: ZDNET

Comments

Please log in or register to join the discussion