Photoroom’s PRX model opens the curtain on end‑to‑end text‑to‑image training, releasing a 1.3 B‑parameter diffusion engine and the full experimental recipe under Apache 2.0. The release promises to democratize high‑resolution generative AI by exposing every tweak, from backbone choice to post‑training distillation.

Photoroom Unveils PRX: A Fully Open‑Source Text‑to‑Image Diffusion Model

Photoroom has just released PRX, a 1.3 B‑parameter text‑to‑image diffusion model that can be trained from scratch and is available on 🤗Diffusers under an Apache 2.0 license. The release is more than a set of weights—it’s a blueprint that exposes every experiment, hyper‑parameter sweep, and training trick that the team used to get 1024‑pixel images in under ten days on 32 H200 GPUs.

Why PRX Matters

“Open‑source end‑to‑end pipelines are the missing piece for reproducible research in generative AI,” says Jon Almazán, lead engineer at Photoroom. The PRX release gives developers a rare chance to step into the training loop, tweak a transformer backbone, or swap out a VAE, all while staying within a single, well‑documented codebase.

Training from Scratch: The Core Ingredients

| Component | Choices Tested | Impact |

|---|---|---|

| Backbone | DiT, UViT, MMDiT, DiT‑Air, PRX (MMDiT‑like) | PRX’s lightweight MMDiT variant cuts GPU memory by ~20 % without hurting fidelity |

| VAE | Flux, DC‑AE | Flux VAE gives sharper textures at 1024 px |

| Text Embedding | T5‑Gemma | Lightweight yet expressive, enabling fast inference |

| Training Tricks | REPA, REPA‑E, Contrastive Flow Matching, TREAD, Uniform ROPE, Immiscible, Muon | REPA and TREAD together reduce convergence time by 30 % |

| Post‑Training | LADD distillation, supervised fine‑tuning, DPO | Distillation yields a 40 % smaller checkpoint with minimal loss |

The team ran 1.7 M training steps on a cluster of 32 NVIDIA H200 GPUs, finishing in under ten days—an impressive pace for a model of this scale.

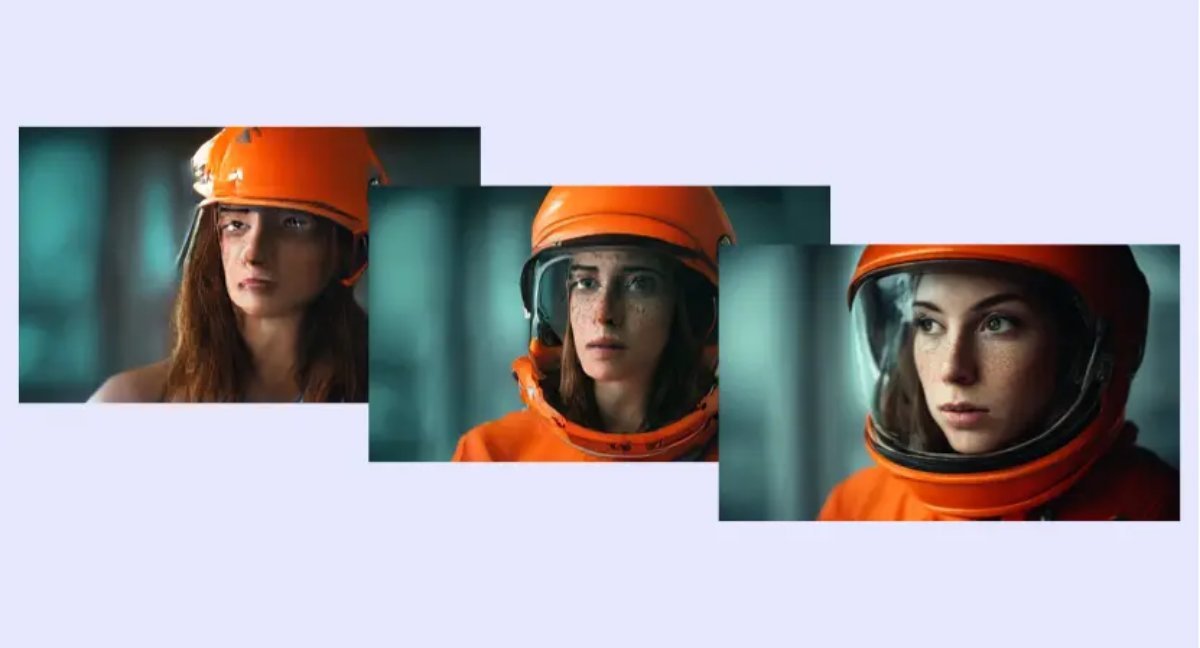

First Glimpse of 1024‑Pixel Output

The animation shows how a single prompt (“A front‑facing portrait of a lion in the golden savanna at sunset”) evolves from a noisy canvas to a coherent, high‑resolution image as training progresses.

Getting Started with PRX

from diffusers.pipelines.prx import PRXPipeline

import torch

pipe = PRXPipeline.from_pretrained(

"Photoroom/prx-1024-t2i-beta",

torch_dtype=torch.bfloat16,

).to("cuda")

prompt = "A front‑facing portrait of a lion in the golden savanna at sunset"

image = pipe(prompt, num_inference_steps=28, guidance_scale=5.0).images[0]

image.save("lion.png")

The 🤗Diffusers hub hosts several variants: base, SFT, distilled, and multiple VAEs. A preview of the full 1024‑pixel checkpoint is already available for experimentation.

Behind the Curtain: A Research Series

Photoroom is publishing a three‑part series that dissects the entire pipeline:

- Design experiments & architecture benchmark – already live.

- Accelerating training – coming soon.

- Post‑pretraining – coming soon.

Each post will unpack the ablation studies, code snippets, and lessons learned, making the process fully reproducible.

The Road Ahead

- Expand the research series with deeper ablations.

- Finalize and release the 1024‑pixel model.

- Explore preference alignment via DPO and GRPO (Pref‑GRPO).

- Investigate Representation Autoencoders (RAE) for even higher fidelity.

Join the Conversation

Photoroom has opened a Discord server for live updates and community discussions. Contributions—whether through code, experiments, or ideas—are welcome. Reach out via Discord or email [email protected].

Credits

The PRX project was built by a cross‑disciplinary team of engineers and researchers: David Bertoin, Roman Frigg, Simona Maggio, Lucas Gestin, Marco Forte, David Briand, Thomas Bordier, Matthieu Toulemont, Jon Almazán, along with earlier contributors Quentin Desreumaux, Tarek Ayed, Antoine d’Andigné, and Benjamin Lefaudeux.

Reference list omitted for brevity; see the original Hugging Face blog for full citations.

Comments

Please log in or register to join the discussion