Discover battle-tested strategies for effective agentic development, from context engineering to feedback loops, distilled from real-world application building. Learn why frustrating agent behaviors often point to system design flaws rather than model limitations.

As AI agents transition from research prototypes to production systems, developers face a critical knowledge gap in reliable implementation patterns. Drawing from extensive work on app.build, engineer Herrington Darkholme shares six empirical principles that address common pain points in agentic development—moving beyond prompt hacking to holistic system design.

1. System Prompts: Clarity Over Cleverness

"Modern LLMs need direct detailed context, no tricks—just clarity."

Early prompt engineering resembled "shaman rituals" with psychological tricks, but Darkholme advocates for straightforward, detailed instructions aligned with model providers' best practices (Anthropic, Google). The key insight: Ambiguity causes more failures than insufficient model capability. For production systems:

- Use LLMs to bootstrap initial prompts (e.g., Deep Research techniques)

- Maintain large, static system prompts for caching efficiency

- Keep user-specific context dynamic and minimal

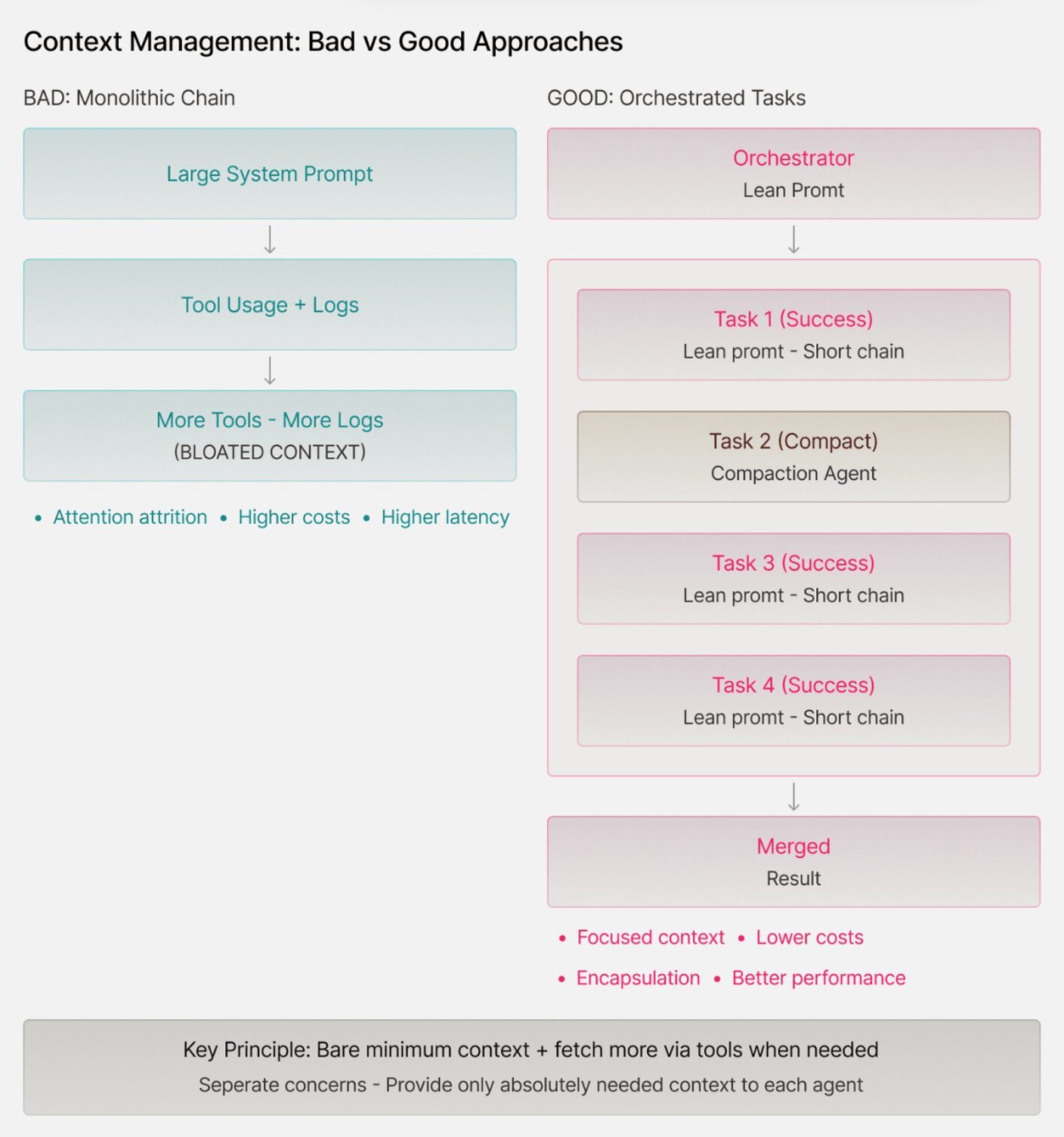

2. Context Management: Less Is More

Context bloat remains a silent killer—causing hallucinations, attention attrition, and soaring costs. The solution? Strategic minimalism:

def context_strategy():

# Provide only essential context upfront

essential_files = ['core.py', 'config.yaml']

# Implement tools for on-demand retrieval

provide_tool('read_file', params=['filename'])

Treat context like OOP encapsulation: Each agent component gets only what it absolutely needs. Automate context compaction for logs/artifacts to prevent bloat.

3. Tool Design: Rigorous Simplicity

Agent tools demand stricter design than human-facing APIs. Key characteristics:

- Idempotency: Critical for state management

- Limited parameters: 1-3 strictly typed inputs

- Minimal surface area: 5-10 multifunctional tools max

"Design tools for a smart but distractible junior developer—no loopholes allowed."

Examples like edit_file (app.build) and execute (opencode) demonstrate focused functionality. Alternatively, consider DSL-based approaches (smol-agents) for complex workflows.

4. Feedback Loops: The Actor-Critic Framework

Effective agents combine LLM creativity with deterministic validation:

| Component | Role | Example |

|---|---|---|

| Actor | Generative freedom | Code creation, file edits |

| Critic | Strict validation | Compilation, tests, linters |

This mirrors reinforcement learning's actor-critic paradigm. Domain-specific invariants are non-negotiable:

- Software: Compilable code passing tests

- Travel: Valid flight connections

- Finance: Double-entry bookkeeping compliance

Guardrails should enable Monte Carlo-style branching—pruning dead ends while nurturing promising paths.

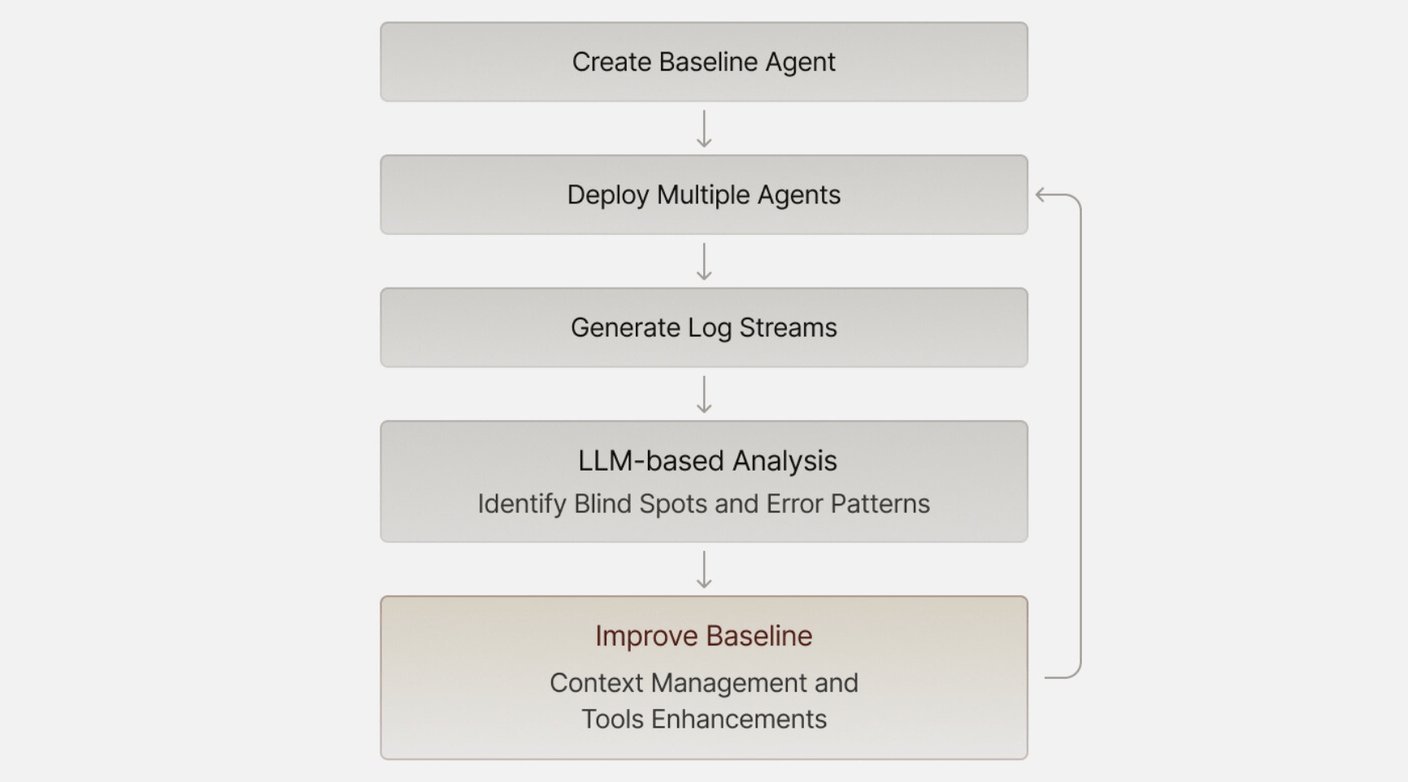

5. LLM-Powered Error Analysis

With agents generating volumes of logs, manual analysis becomes impossible. Implement a meta-feedback loop:

- Run baseline agents

- Feed trajectories to a long-context LLM (e.g., Gemini 1M)

- Identify systemic weaknesses

- Iterate

This regularly reveals context gaps or tooling deficiencies invisible during initial development.

6. Frustration as a Debugging Compass

When agents behave inexplicably, first examine the system—not the model. Darkholme recounts:

"I cursed an agent for using mock data... until realizing I forgot to provide API keys."

Recurring failure patterns often indicate:

- Missing tools

- Insufficient context access

- Contradictory instructions

Treat these moments as diagnostic opportunities rather than model failures.

The Path to Production Resilience

Building reliable agents isn't about finding silver bullets—it's rigorous software engineering. Prioritize clear interfaces, context discipline, and validation loops. When failures occur (and they will), leverage meta-agents to transform errors into improvement vectors. As Darkholme concludes:

"The goal isn't perfect agents—it's reliable, recoverable ones that fail gracefully."

Source: Six Principles for Production AI Agents by Herrington Darkholme

Comments

Please log in or register to join the discussion