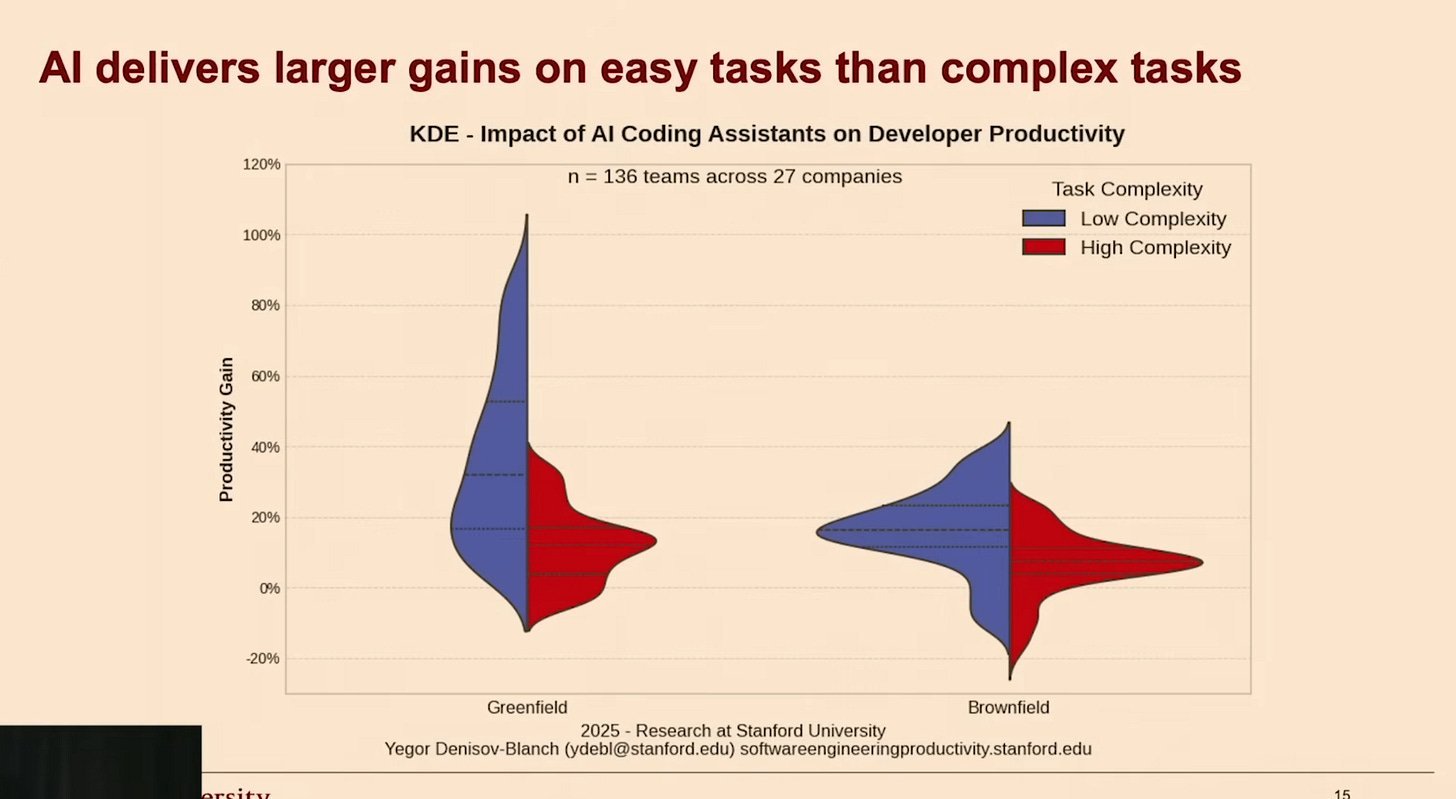

A Stanford study of 100,000 developers shows AI boosts simple task efficiency by up to 60% but offers only 5% gains for complex work, increasing rework risks. Real-world SaaS experiences reveal how this amplifies the 'just ship it' fallacy, where faster iteration fails to address validation bottlenecks and system integration challenges. Tech leaders must balance AI adoption with strategic focus to avoid commoditization and wasted effort.

In the rush to harness artificial intelligence for supercharged productivity, a dangerous narrative has taken hold: that AI can replace human ingenuity and accelerate development to mythical speeds. But as a Stanford University video analyzing 100,000 developers reveals, the reality is far more nuanced—and for complex software systems, the gains are startlingly modest. This disconnect isn't just about code; it's a cautionary tale for any tech leader tempted to equate faster shipping with better outcomes.

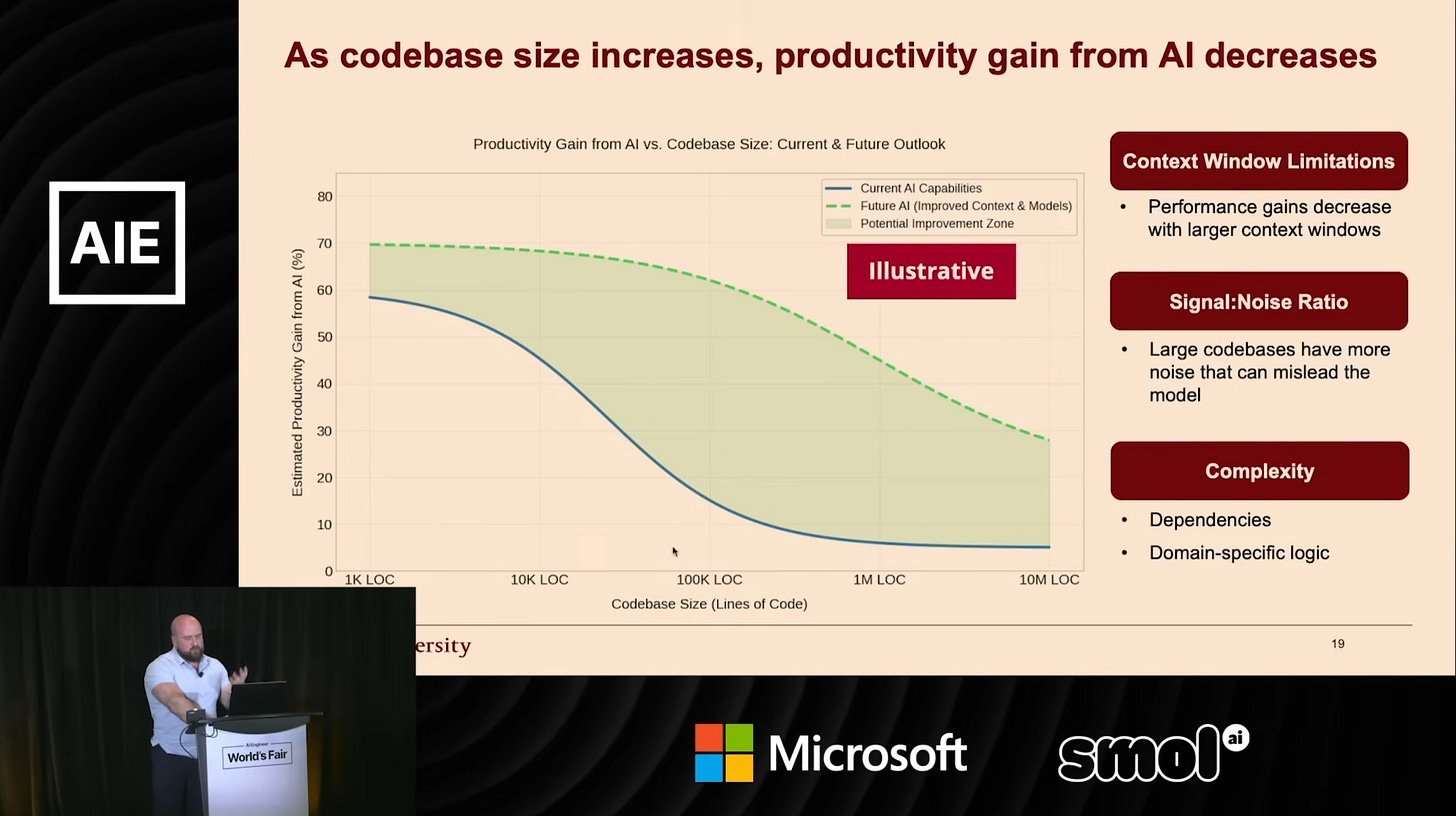

The Stanford research, titled "Does AI actually boost developer Productivity?", provides hard data on AI's impact. For simple tasks—think boilerplate code or minor fixes—AI tools can improve efficiency by up to 60%. Yet as complexity rises, such as integrating legacy systems or architecting novel solutions, the benefits plummet to just 5%. As the study notes: "As codebase size increases, productivity gain from AI decreases." This trend is visualized in the data, showing most real-world projects hovering around a 20% improvement margin.

More alarmingly, the study found that AI adoption often increases rework. Developers ship more code, but without the contextual understanding to ensure quality, leading to integration nightmares and technical debt. As Leah Tharin, a product veteran with experience at hypergrowth SaaS company Smallpdf, observes in her article: "You're delivering more and shipping more. Not all of it is useful... it usually requires rework that AI can’t solve by itself."

Tharin's time at Smallpdf exemplifies this. The company, which grew to 50 million monthly users with a lean team, mastered the "spaghetti effect"—throwing ideas at the wall rapidly. Yet despite massive traffic, they hit invisible walls: "The bottleneck was waiting for statistical significance for experiments," Tharin writes. Simple changes validated quickly, but complex ones took weeks or months, underscoring that AI can't speed up validation. Her CEO, Dennis Just, warned early on: "We’re really good at throwing spaghetti at the wall... but we need to grow out from that; it’s become unmanageable."

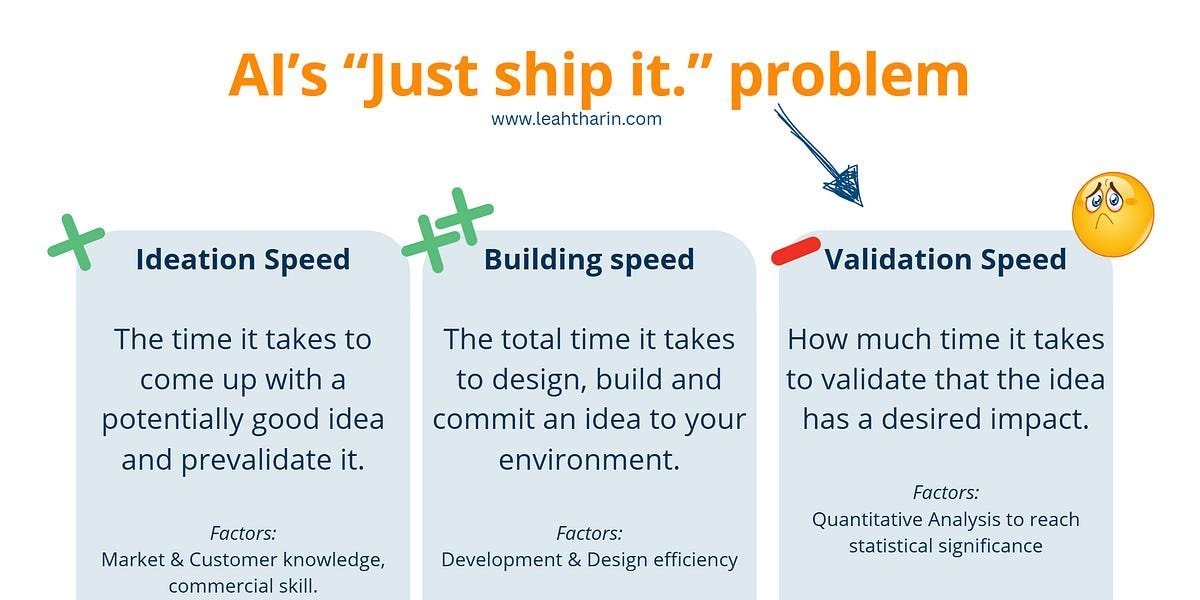

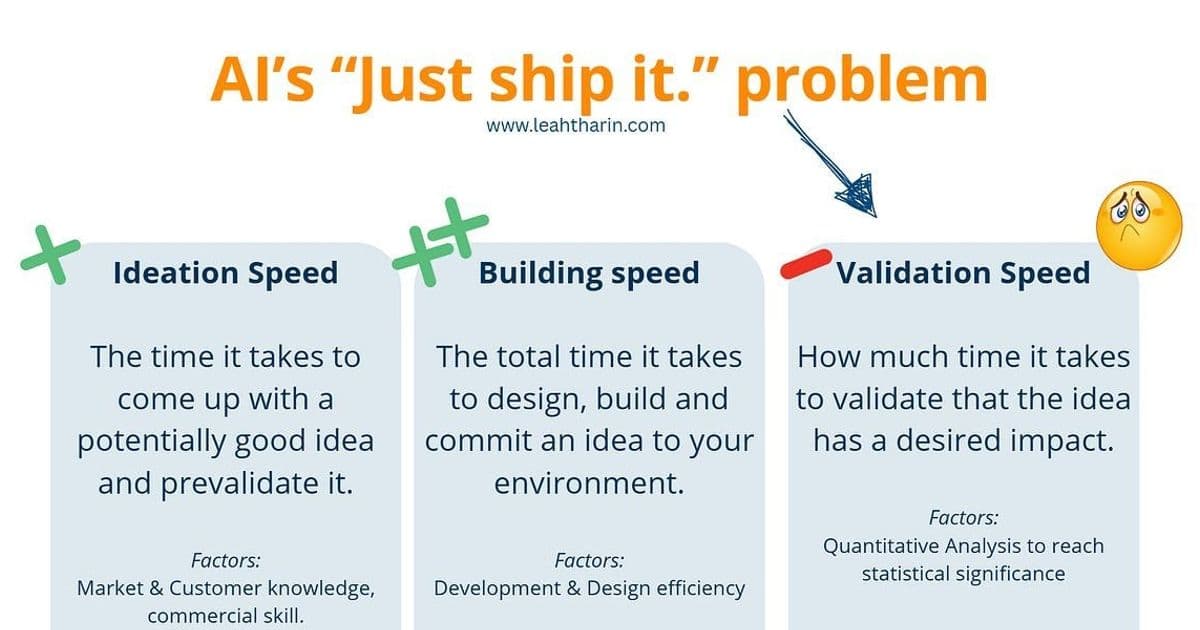

This exposes the core flaw in the "just ship it" mentality. As Tharin argues, shipping isn't just about development; it's a three-part cycle:

- Ideation: Generating valuable ideas (AI offers little help here).

- Development: Building the solution (where AI shines for simple tasks).

- Validation: Testing impact with users (constrained by data scale, not AI speed).

"If you changed a copy of our main website, experiments were significant within hours. A complex change for high-value customers? Weeks, sometimes months," Tharin recalls, highlighting how AI exacerbates bottlenecks without solving them.

The implications are stark. Companies slashing staff based on AI hype risk drowning in rework or commoditization—after all, 75% of shipped ideas fail to drive meaningful impact. As codebases grow, the marginal gains from AI could even stall innovation. Yet this isn’t a call to abandon AI; it’s a plea for wisdom. Integrate tools to handle mundane work, but double down on customer insights and strategic validation. In the end, building what matters will always trump building faster.

Source: Analysis inspired by "AI's 'Just Ship it.' problem" by Leah Tharin (leahtharin.com) and findings from Stanford's developer productivity study.

Comments

Please log in or register to join the discussion