Discover how the seemingly simple task of selecting books to maximize foreign vocabulary acquisition reveals an NP-hard optimization problem. We explore why finding the optimal k books requires exponential time, how submodular approximation algorithms provide practical solutions, and the Python tools enabling efficient implementations.

Imagine trying to learn Spanish efficiently by reading books that maximize exposure to common vocabulary. While selecting a single high-impact book is computationally straightforward, choosing the optimal set of books for comprehensive coverage reveals a profound computational challenge—one that lands squarely in the realm of NP-hard problems.

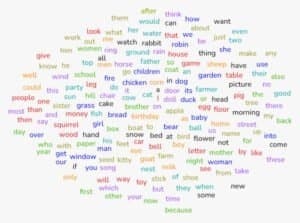

{{IMAGE:2}} Visualizing word frequency distributions across texts (Credit: John D. Cook)

The Vocabulary Optimization Problem

At its core, the challenge involves selecting k books from a corpus of m titles (each averaging n words) to maximize "vocabulary impact." This metric weights each unique word by its frequency in the language—prioritizing common terms over rare ones. Filtering stop words (like "the" or "and") simplifies the model, but the combinatorial nature of multi-book selection quickly escalates complexity.

For k=1, solutions are efficient (O(mn)): hash word frequencies across all books, then compute each book's weighted sum. But for k≥2? Optimal selection becomes NP-hard. The search space explodes combinatorially—finding the absolute best set requires evaluating all possible combinations, which grows exponentially with k.

Submodularity to the Rescue

Fortunately, vocabulary impact exhibits submodularity—a diminishing returns property where adding a book to a larger set yields incrementally less value. This allows approximation algorithms with rigorous guarantees:

# Greedy approximation in Python using submodlib

from submodlib import FacilityLocationFunction

# (Pseudocode: Define book-word matrix)

data = [[freq of word j in book i] for i in books]

obj = FacilityLocationFunction(n=len(books), data=data, mode="dense")

greedy_result = obj.maximize(k=k, optimizer='NaiveGreedy')

The greedy approach, which iteratively adds the highest-impact remaining book, achieves at least (1-1/e)≈63% of the optimal score [6]. Libraries like submodlib implement these methods efficiently.

Beyond Greedy: Trading Compute for Accuracy

While greedy suffices for many cases, enhanced strategies exist:

- Blocking: Evaluate pairs/triples at each step (O(m²) or O(m³))

- Look-ahead: Temporarily add multiple books before finalizing choices

- Pruning: Discard books offering negligible unique vocabulary

As researcher Rishabh Iyer notes, these leverage submodularity's curvature properties to tighten bounds without breaking polynomial time.

Why Developers Should Care

This isn't just about language learning. Similar optimization problems appear in:

- Recommender systems (diverse content selection)

- Sensor placement (maximizing coverage)

- Feature selection for ML models

- Cybersecurity log analysis (identifying critical event subsets)

The tension between optimality and computational feasibility—solved via submodular approximations—exemplifies how theoretical computer science shapes practical tools. As John D. Cook observes, sometimes "good enough" algorithms unlock solutions where perfection is provably unattainable.

Source: Adapted from John D. Cook's analysis of vocabulary optimization and submodular maximization.

Comments

Please log in or register to join the discussion