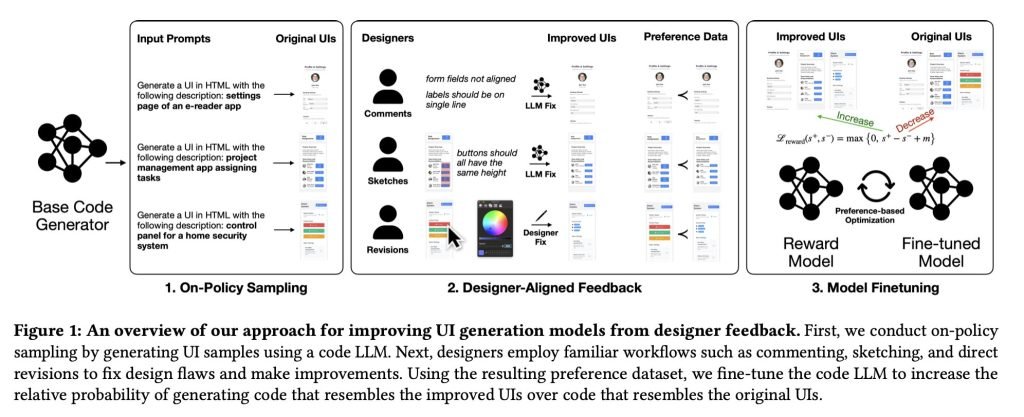

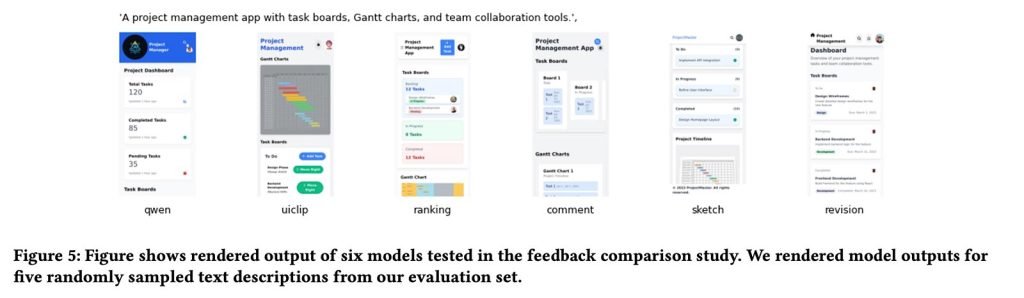

Apple's latest research paper demonstrates how professional designer feedback can train AI models to generate higher-quality user interfaces, outperforming larger models with minimal training data.

Apple researchers have published a groundbreaking study showing how professional designer feedback can significantly improve AI-generated user interfaces. The paper, titled "Improving User Interface Generation Models from Designer Feedback", builds upon Apple's previous work with UICoder while addressing core challenges in design quality assessment.

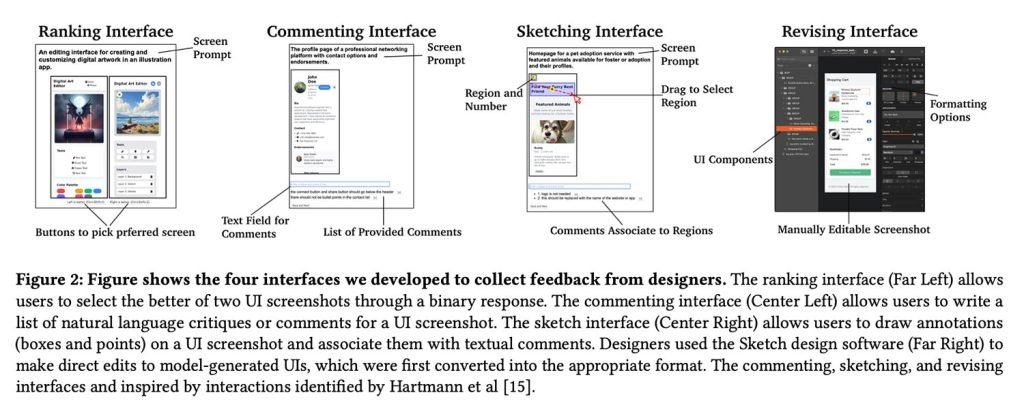

The research team developed a novel training framework that captures designer feedback through three primary methods:

- Text comments explaining design improvements

- Hand-drawn sketches illustrating layout changes

- Direct edits to the generated interfaces

21 professional designers with 2-30 years of experience participated in the study, producing 1,460 annotations that were converted into training data. The system uses these designer-modified interfaces to train a reward model that evaluates:

- Visual hierarchy

- Component spacing

- Information density

- Accessibility considerations

Technical implementation details reveal an elegant pipeline:

- Base model (Qwen2.5-Coder) generates initial UI code

- Automated rendering converts code to screenshots

- Reward model evaluates screenshot against design target

- Feedback loop updates generation preferences

The results showed particular promise with sketch-based feedback, where:

- Small models outperformed GPT-5 in UI generation

- Just 181 sketch annotations produced measurable improvements

- Researcher-designer alignment improved from 49% to 76%

Key technical insights from the paper include:

- Subjectivity challenges: Design preferences varied significantly between professionals

- Feedback quality: Concrete visual feedback (sketches/edits) proved more effective than text

- Model scaling: Smaller fine-tuned models outperformed larger base models

The full research paper provides detailed metrics on:

- Training dataset composition

- Reward model architecture

- Cross-model performance comparisons

- Real-world interface examples

This research has important implications for:

- Xcode development: Potential future integration with Interface Builder

- Cross-platform tools: Unified design systems for SwiftUI and UIKit

- Accessibility: Automated WCAG compliance checking

Apple continues to demonstrate leadership in practical AI applications, focusing on real-world usability rather than pure model scale. Developers can expect these findings to influence future versions of Xcode and SwiftUI as Apple refines its AI-assisted development tools.

Comments

Please log in or register to join the discussion