A developer rebuilt Azure's foundational Replicated State Library in Rust using AI coding agents, completing 100K+ lines in weeks while addressing critical performance gaps. The project reveals how AI-driven code contracts and lightweight spec development enabled unprecedented speed without sacrificing correctness. Throughput soared from 23K to 300K operations/sec, showcasing AI's potential for high-stakes systems programming.

When Microsoft engineer Zihao Huang set out to rebuild Azure's Replicated State Library (RSL)—the multi-Paxos consensus engine underpinning critical Azure services—he didn't start with a keyboard. Instead, he deployed an arsenal of AI coding agents: GitHub Copilot, Claude Code, Codex CLI, and others. The result? A Rust-based reimplementation matching RSL's capabilities while addressing modern hardware limitations—completed in roughly three months with 130K+ lines of Rust code.

Why Modernize Azure's Consensus Engine?

RSL has reliably powered Azure services for over a decade, but its age shows in three critical areas:

- No pipelining: Sequential voting stalls new requests, inflating latency

- No NVM support: Can't leverage non-volatile memory now common in Azure datacenters

- Limited hardware awareness: Lacks optimizations for RDMA networks

"Removing these limitations could unlock significantly lower latency and higher throughput—critical for modern cloud workloads and AI-driven services," Huang noted. His implementation specifically targeted these gaps while maintaining RSL's robustness.

The AI Development Workflow

Huang's process delivered startling productivity:

- 100K lines of Rust written in ~4 weeks

- Performance optimization from 23K ops/sec to 300K ops/sec in ~3 weeks

- 1,300+ tests covering unit, integration, and failure scenarios

His secret? A CLI-centric workflow combining Claude Code and Codex CLI, with subscriptions split across days to bypass rate limits. He admits even psychological incentives played a role: "Paying $100/month for Anthropic's max plan became a forcing function—if I didn't kick off coding tasks before bed, I felt I was wasting money."

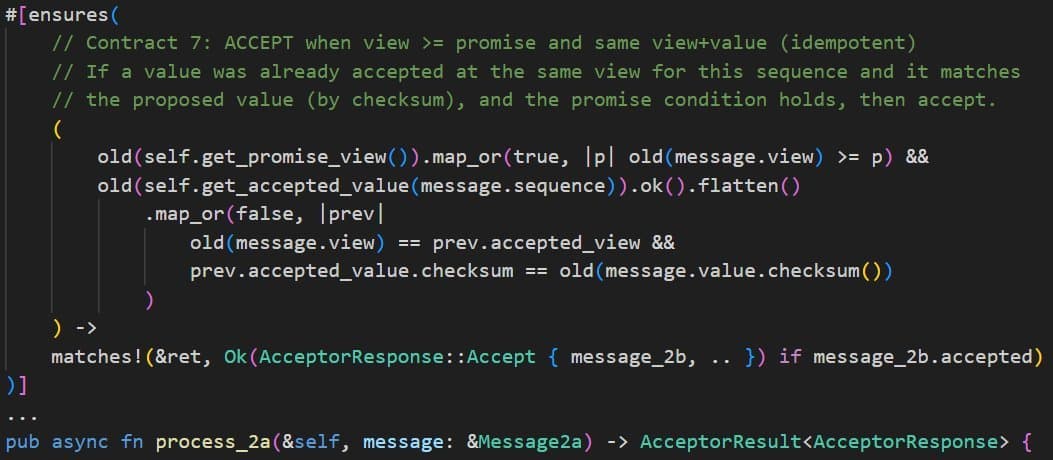

Code Contracts: AI's Safety Net

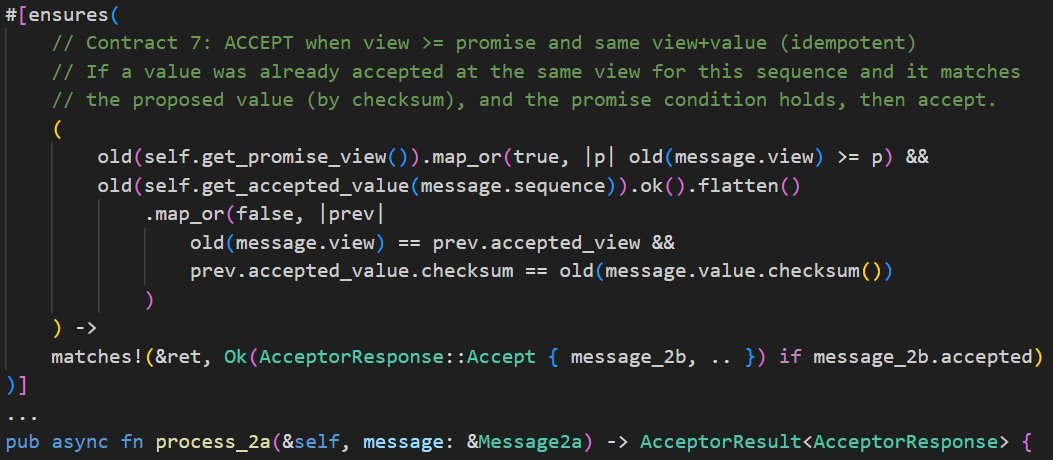

The biggest skepticism around AI-assisted systems programming centers on correctness. Huang countered this with AI-generated code contracts—preconditions, postconditions, and invariants converted to runtime assertions during testing:

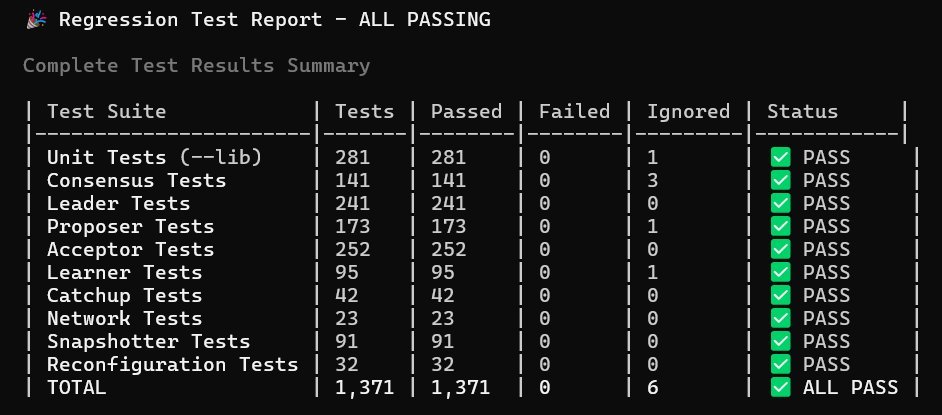

Example of AI-generated contracts for Paxos message handling

Example of AI-generated contracts for Paxos message handling

This approach enabled three layers of verification:

- AI writes initial contracts (GPT-5 High produced the most reliable ones)

- Contracts generate targeted test cases

- Property-based tests explore randomized inputs to expose edge cases

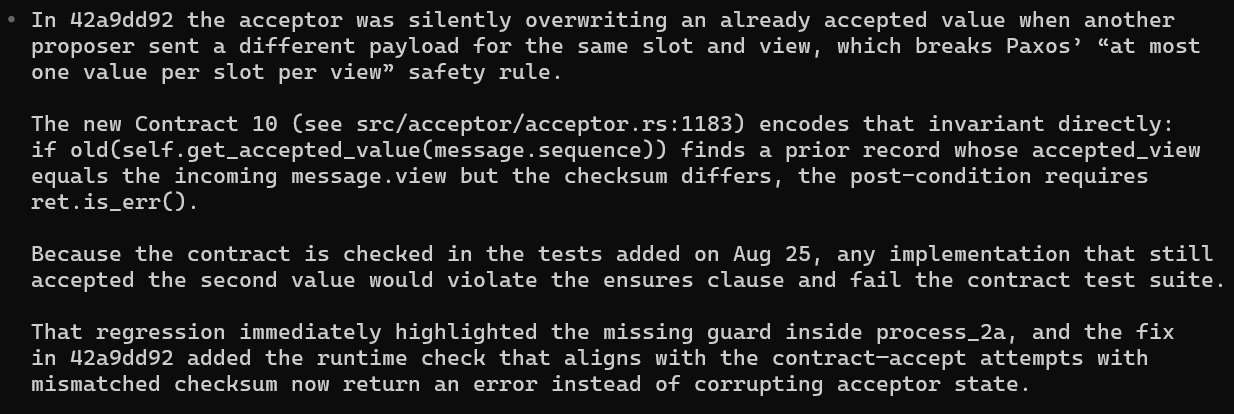

One contract alone caught a subtle Paxos safety violation:

Contract detecting a replication consistency issue

Contract detecting a replication consistency issue

"That single contract saved what could have been a serious replication consistency issue—well before it ever hit production," Huang emphasized.

Lightweight Spec-Driven Development

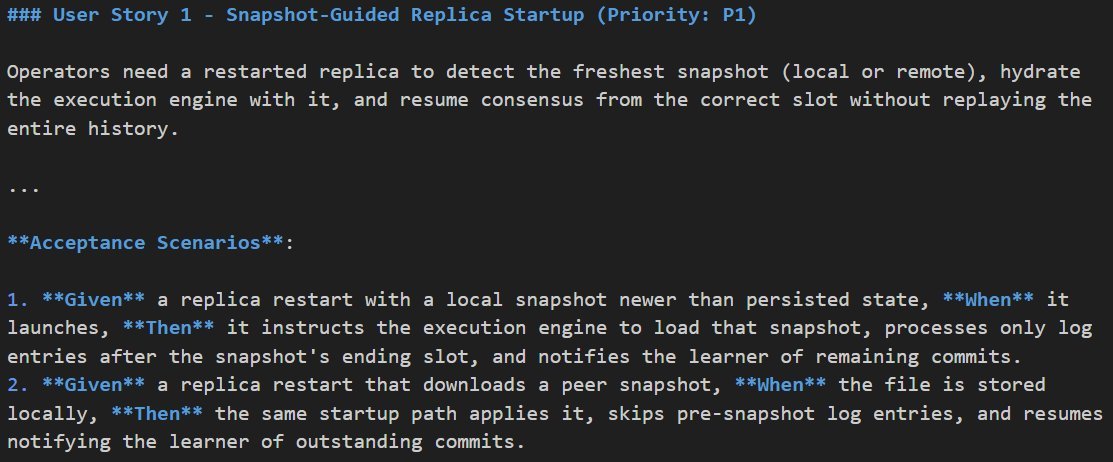

Early rigid specification documents gave way to agile AI collaboration:

- Generate user stories via

/specifycommand - Refine with AI self-critique using

/clarify - Implement per-story with AI handling execution

User story refinement for configuration changes

User story refinement for configuration changes

This approach maintained flexibility while ensuring critical decisions were documented—like choosing sequential slot assignment for configuration changes to prevent orphaned slots.

Performance Tuning at Warp Speed

Optimization became an AI-powered feedback loop:

- Instrument latency metrics

- Run performance tests

- Let AI analyze traces and propose fixes

- Implement one optimization and repeat

Rust's safety enabled aggressive changes: removing allocations, applying zero-copy techniques, and eliminating lock contention. The result? A 13x throughput improvement on consumer hardware.

The Next Frontier for AI Coding

Huang envisions three evolutionary leaps:

- End-to-end user story execution: Reduced human steering during implementation

- Automated contract workflows: AI generating tests and fixing violations

- Autonomous optimization: AI running performance experiments across entire systems

"Performance tuning seems ripe for more automation," he noted, citing projects like AlphaEvolve as early indicators.

Project Status and Legacy

Based on Microsoft Research's simplified multi-Paxos design, Huang's engine already addresses RSL's pipelining and NVM limitations using techniques from the "PoWER Never Corrupts" paper. RDMA support remains in development.

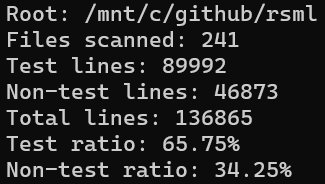

Code volume and test coverage metrics

Code volume and test coverage metrics

With 65% test coverage and rigorous verification, this experiment demonstrates AI's readiness for mission-critical systems work—provided developers leverage rigorous safety nets like AI-generated contracts.

Source: Building a Modern RSL Equivalent in Rust with AI by Zihao Huang

Comments

Please log in or register to join the discussion