Comprehensive benchmarking across coding, reasoning, and language tasks shows GPT-4 defying previous scaling laws and reversing performance declines observed in earlier models. The data reveals surprising inflection points in AI development and highlights GPT-4's dominance in multilingual understanding and complex problem-solving.

The latest performance analysis of OpenAI's models reveals a seismic shift in AI capabilities, with GPT-4 defying previous scaling predictions and reversing troubling performance trends observed in its predecessors. Across multiple domains—from code generation to academic exams—the model demonstrates unprecedented leaps that challenge conventional wisdom about neural scaling laws.

Coding Prowess Redefined

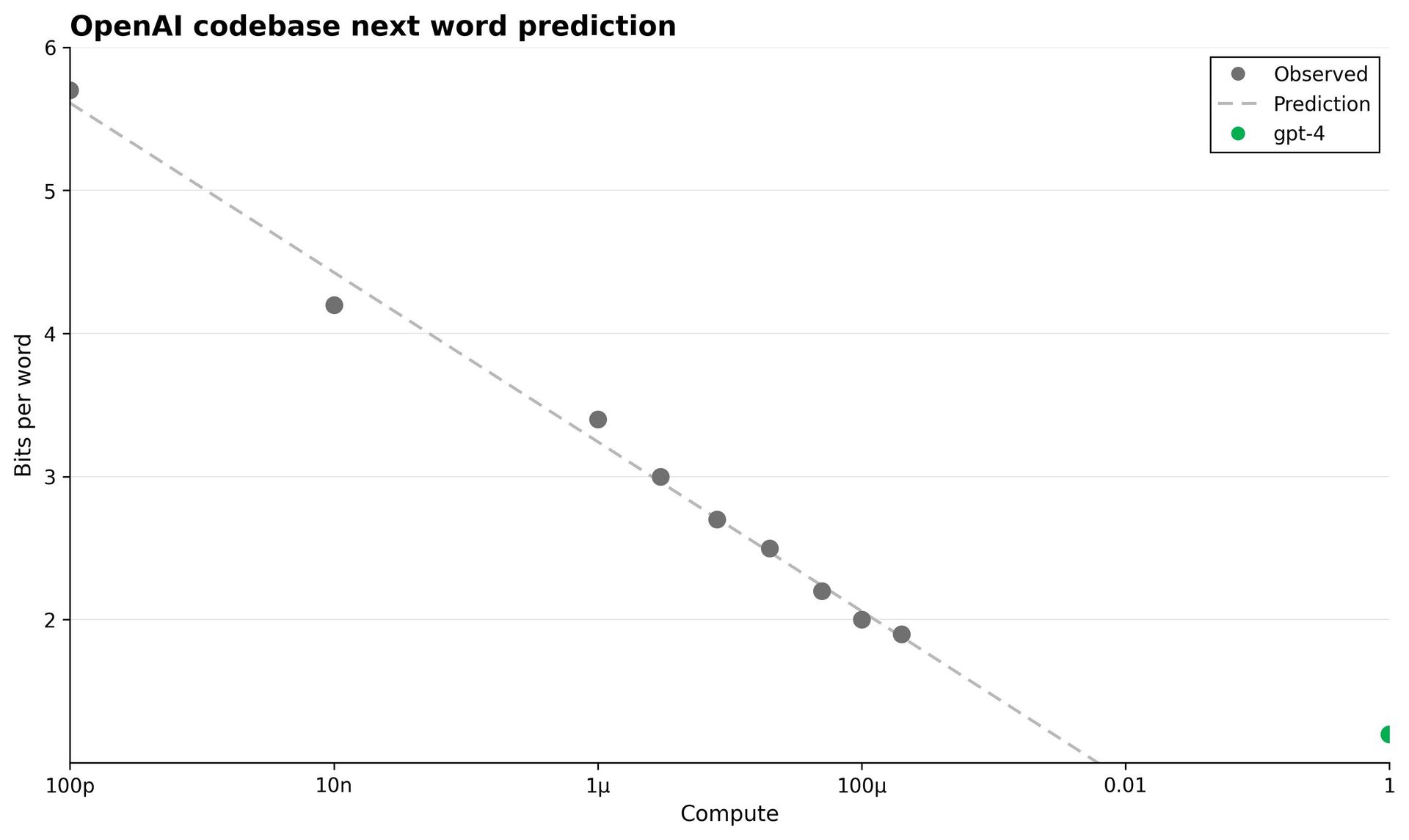

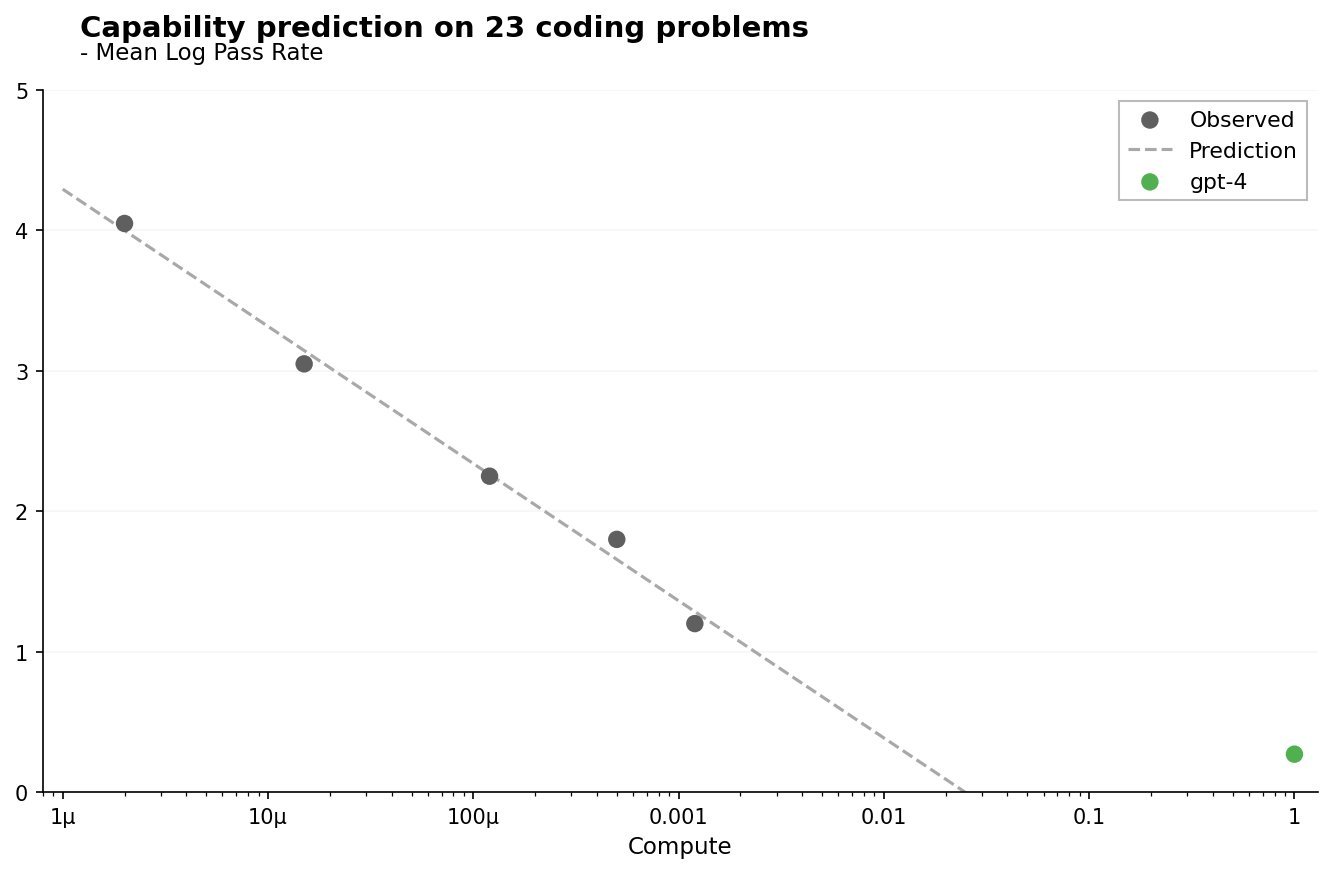

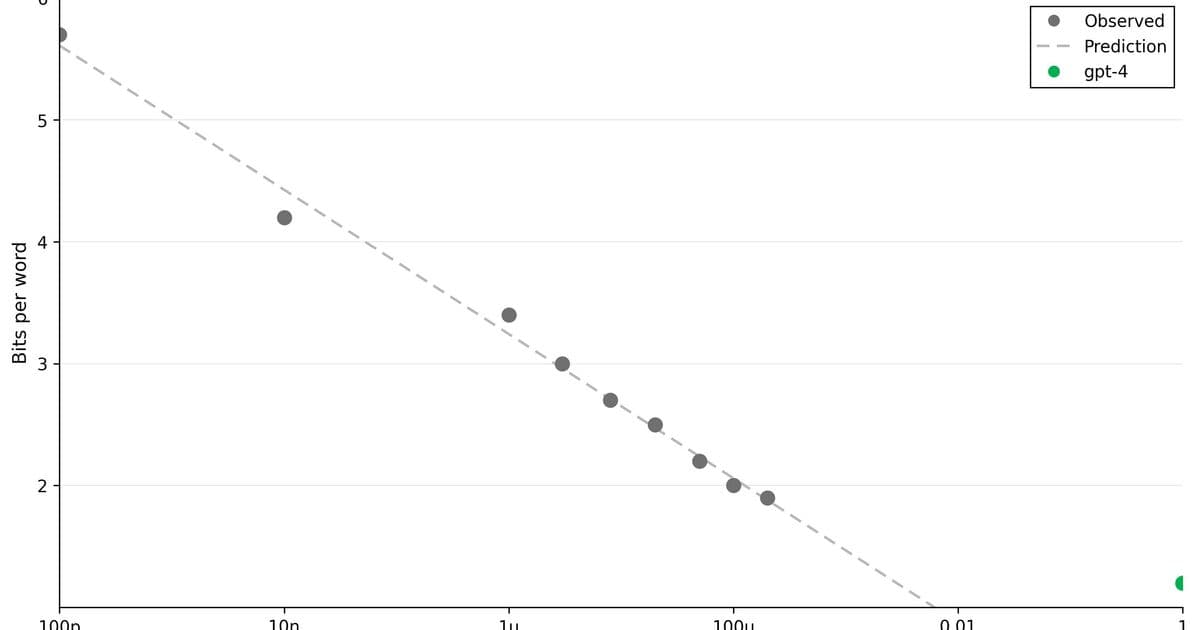

The OpenAI codebase next-word prediction benchmark (above) shows GPT-4 (green dot) dramatically outperforming scaling curve predictions. While earlier models followed predictable compute-performance curves, GPT-4 operates in a new regime—achieving lower bits-per-word than anticipated even at its massive compute scale. This breakthrough extends to functional coding ability, where GPT-4's mean pass rate on 23 coding problems similarly defies predictions.

The Inverse Scaling Anomaly

Perhaps most surprisingly, GPT-4 reverses the accuracy degradation trend seen in earlier models for the hindsight neglect task. Where GPT-3.5 showed declining performance compared to smaller models (ada, babbage, curie), GPT-4 not only recovers but significantly surpasses them—suggesting qualitative architectural improvements beyond mere scale.

Academic Dominance

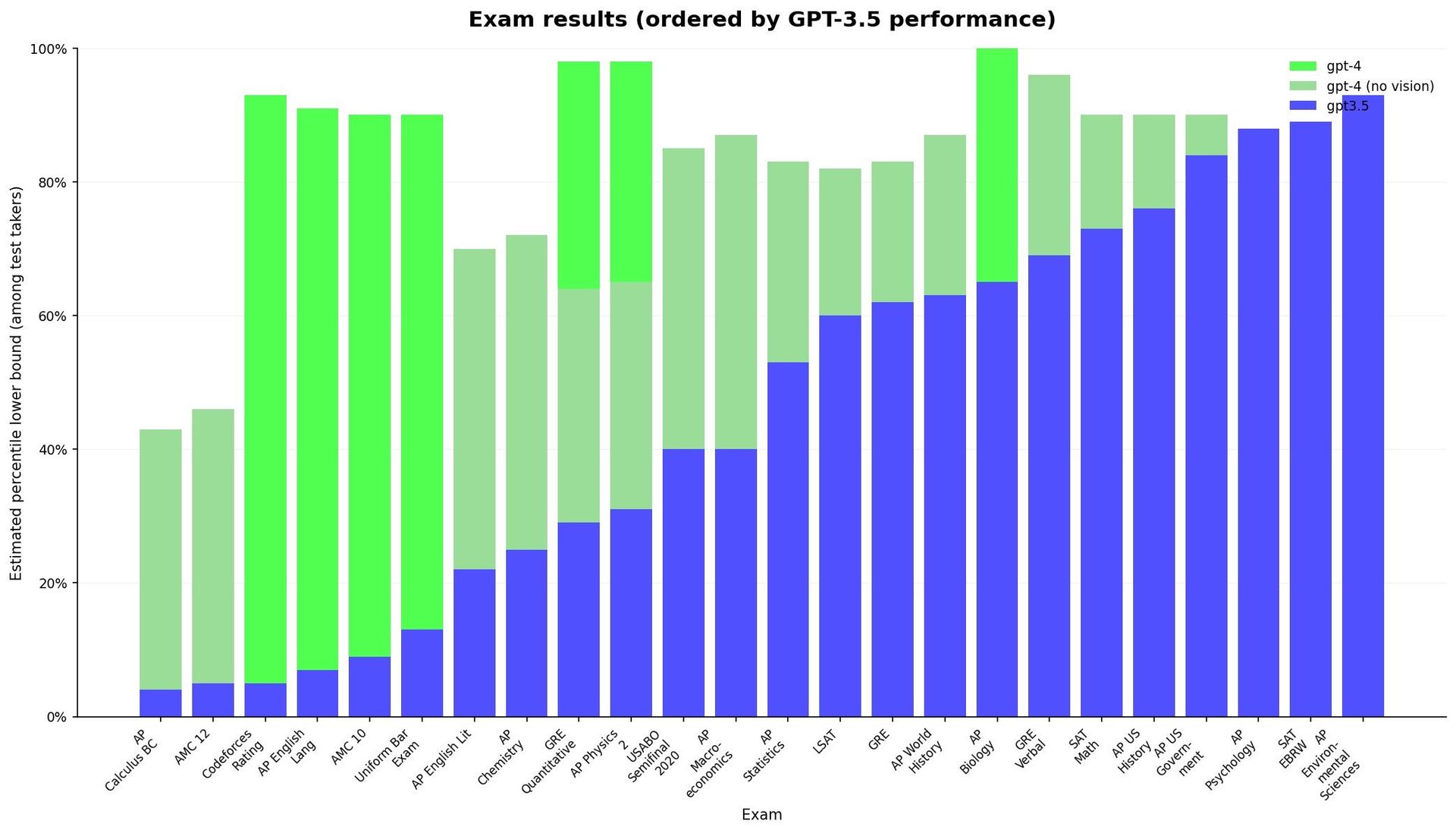

When evaluated against academic and professional exams—including law bar exams, medical assessments, and advanced placement tests—GPT-4 consistently outperforms GPT-3.5 by substantial margins. The stacked bars reveal particularly strong gains in quantitative and specialized domains, with the vision-enabled variant unlocking additional capabilities.

Multilingual Mastery

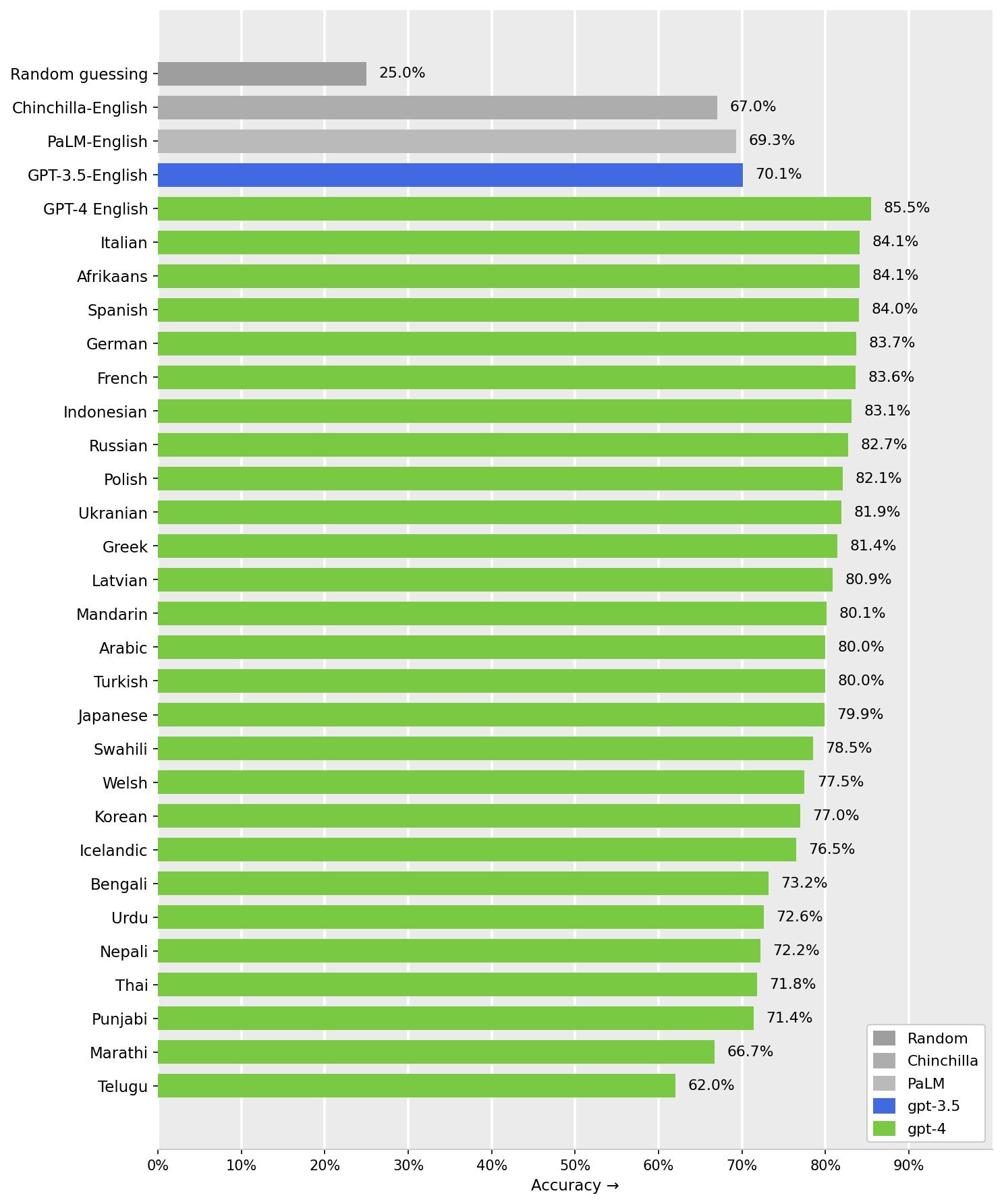

GPT-4's language understanding transcends previous models, significantly closing the gap with human-level performance across diverse languages. The horizontal bar comparison shows it outperforming specialized models like Chinchilla and PaLM in non-English contexts—a critical advancement for global applications.

Implications for AI Development

These findings suggest we've reached an inflection point where architectural innovations (like mixture-of-experts) and training methodology breakthroughs enable discontinuous jumps beyond predictable scaling curves. The reversal of inverse scaling phenomena indicates that larger models may now overcome previous limitations in reasoning and contextual understanding. However, the data also reveals lingering challenges in sensitive content handling and adversarial robustness that will define the next frontier.

Source: Analysis of OpenAI benchmarking data from ML Builder

Comments

Please log in or register to join the discussion