We dissect Lamport's seminal solution to the Byzantine Generals Problem, implementing it with Python and Flask to demonstrate how distributed systems achieve consensus despite malicious actors. The code reveals both the elegance of the algorithm and its impractical exponential message complexity at scale.

When Traitors Threaten Consensus: The Byzantine Generals Problem

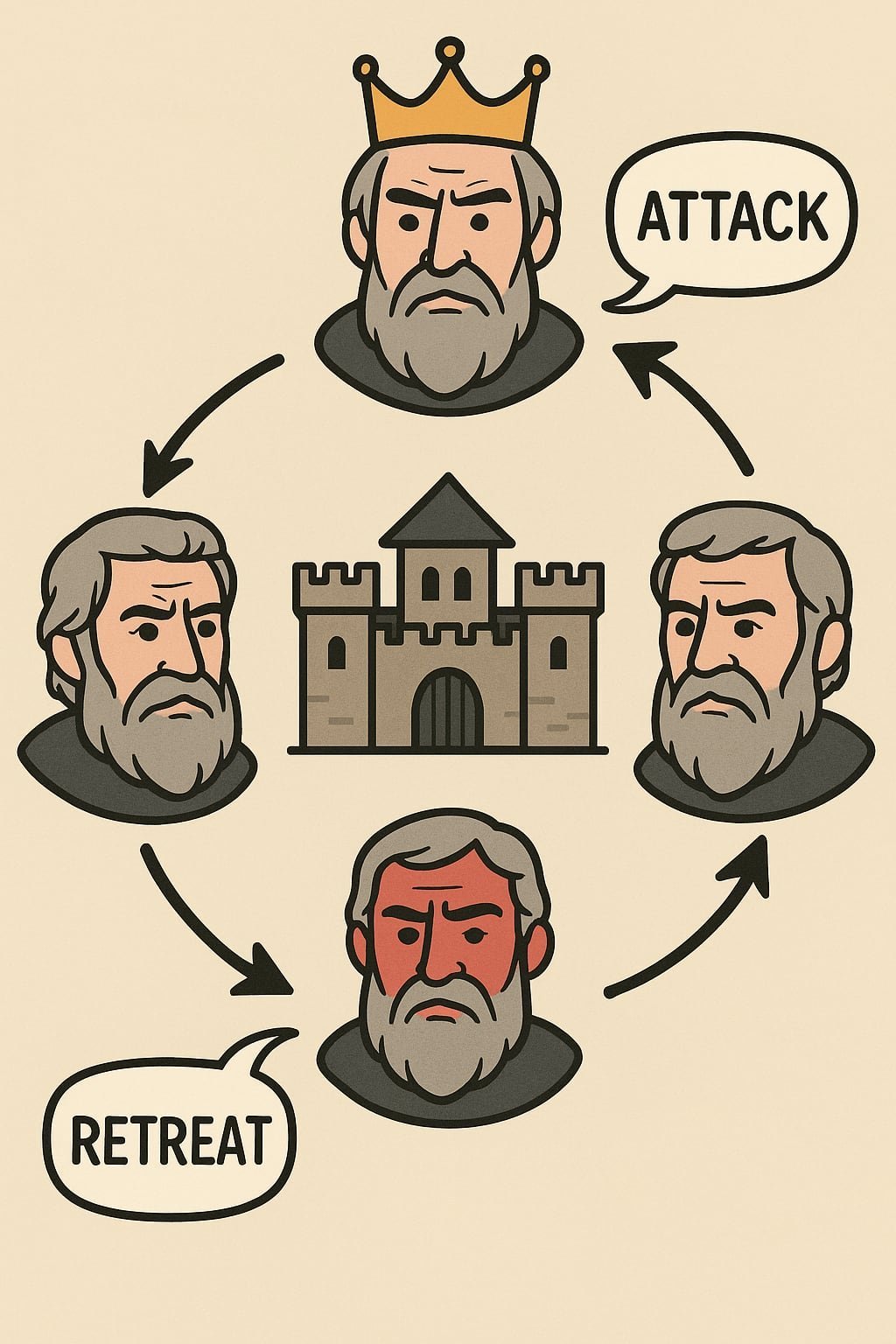

In distributed systems, achieving consensus when nodes can fail arbitrarily—not just crash but actively sabotage—remains a fundamental challenge. Leslie Lamport's 1982 paper framed this as the Byzantine Generals Problem: How can N generals agree on a battle plan when M of them are traitors sending conflicting messages? The solution requires N ≥ 3M + 1 nodes and unfolds through recursive message passing to isolate malicious actors.

Visualization of message paths in a 7-node system with 2 traitors (Credit: Bytepawn)

Visualization of message paths in a 7-node system with 2 traitors (Credit: Bytepawn)

How the Algorithm Outsmarts Traitors

- The King Proposes: One node (king) broadcasts an initial value (attack/retreat). Traitors may send conflicting values.

- Recursive Verification: Each node shares received values with others, extending message paths (e.g., [0] → [0,1]).

- Majority Voting: Nodes apply a recursive

OM()function to reconcile values:

def OM(path):

k = 1 + m - len(path)

if k == 0:

return received_values[path]

values = [received_values[path]]

for i in nodes_not_in_path:

values.append(OM(path + [i])) # Recursive verification

return majority(values) # "attack" if counts > "retreat"

- Exponential Cost: Each traitor adds a communication round. For M=4, nodes process 9,032 messages.

Python Implementation Insights

- Flask HTTP Servers: Each general runs as a process with REST endpoints (

/start,/order,/decide). - Traitor Handling: Malicious nodes flip values mid-cascade but avoid spoofing paths (engineered constraint).

- Deterministic Paths: Messages include routing history (e.g.,

[0,3,1]) to prevent ambiguity.

Sample Run: M=2 Traitors Among N=7 Nodes

Traitors: {0,5}

General 1 decided retreat

General 2 decided retreat

...

SUCCESS: All non-traitors agreed on "retreat"!

Why LLMs Struggle with Byzantine Logic

Despite GPT-4's proficiency in routine coding, it repeatedly failed to implement this algorithm. The recursion depth, path-tracking, and fault-injection requirements exceed its training data for sparse academic problems. Yet once debugged, the solution proves robust—non-traitors always converge identically.

The Scaling Paradox

Lamport's solution works perfectly at small scale but becomes impractical beyond M=4 due to O(Nᴹ) messages. Modern alternatives like PBFT optimize this, but the core insight remains: trust emerges from structured skepticism.

Source: Lamport Byzantine Generals Problem (Bytepawn)

Comments

Please log in or register to join the discussion