The santa-lang Workshop demonstrates how LLM agents can autonomously implement programming language interpreters through structured, test-driven workflows. By breaking development into gated stages and enforcing strict CLI contracts, this experiment reveals how AI approaches complex engineering tasks across Python, Rust, and other languages. The project offers unprecedented insights into agentic reasoning and cross-language implementation patterns.

What happens when you task AI agents with building programming languages—not just writing code, but architecting complete interpreters from scratch? Developer Edd Mann's whimsically named santa-lang Workshop provides a fascinating answer, demonstrating how structured LLM workflows can tackle one of software engineering's most complex challenges.

At its core, the workshop deploys "elves"—LLM agents like Claude and GPT—to implement elf-lang, a functional subset of the Christmas-themed santa-lang designed for Advent of Code-style problems. These agents don't just generate snippets; they systematically build lexers, parsers, and evaluators through a rigorous five-stage process:

- Lexing: Token recognition

- Parsing: Abstract Syntax Tree (AST) construction

- Basic evaluation: Runtime execution

- Collections: List/set/dict operations

- Higher-order functions: Map/filter/fold and composition

Each stage must pass a comprehensive suite of .santat tests (inspired by PHP's PHPT format) before progression, preventing regressions and ensuring consistency. The test suite validates behavior through a strict CLI interface:

# Run program

<bin> <file>

# Print AST

<bin> ast <file>

# Print tokens

<bin> tokens <file>

This implementation-agnostic contract allows direct comparison between languages. As Mann notes:

"The test runner doesn’t care whether you’re using recursive descent parsing or a Pratt parser—it only cares that

1 + 2evaluates to3."

The architecture leverages four custom tools:

santa-bootstrap: Scaffolds new implementations with festive elf personassanta-test: Executes PHPT-style validationsanta-journal: Maintains decision logs across agent sessionssanta-site: Generates progress dashboards

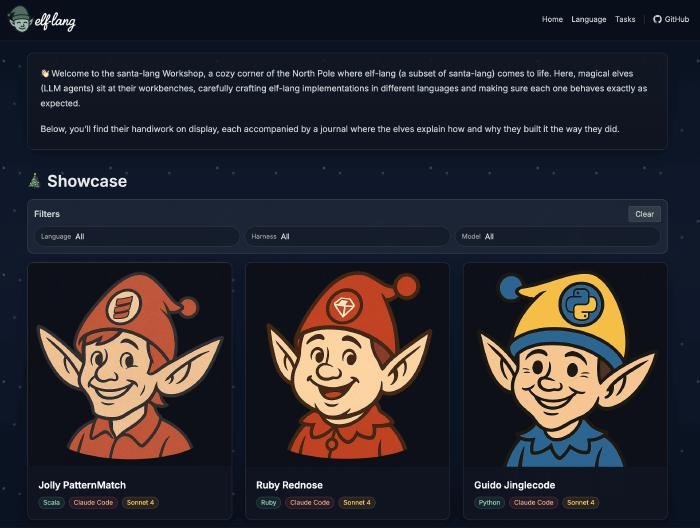

The automatically generated workshop site tracks implementation progress across languages

The automatically generated workshop site tracks implementation progress across languages

Critical to reproducibility, each language runs in isolated Docker containers, with CI pipelines automatically validating all implementations on every commit. This reveals intriguing patterns: Python agents leverage dynamic typing, Rust implementations emphasize memory safety, and Haskell versions embrace pure functional patterns—all converging on identical behavior thanks to the test suite.

Beyond technical achievement, the project offers profound insights:

- Structure is non-negotiable: Agents thrive with phased goals and anti-regression gates

- Journals enable continuity: Decision logs allow seamless context transfer between sessions

- Contracts beat conventions: CLI-focused testing bypasses language-specific biases

As AI-assisted development evolves, the workshop demonstrates how meticulously designed constraints transform LLMs from code generators into architectural partners. The elves might be festive, but their output—a growing collection of language implementations—delivers serious lessons in agentic problem-solving.

Source: Edd Mann's blog

Comments

Please log in or register to join the discussion