Sabrina Ramonov unveils a rigorous framework for integrating Claude AI into production coding workflows, emphasizing disciplined rules to prevent technical debt and ensure maintainability. Her CLAUDE.md guidelines and structured shortcuts like qcode and qcheck offer a blueprint for developers to harness AI's speed while maintaining senior-level code quality. This approach tackles real-world challenges in complex codebases, balancing automation with critical human oversight.

As AI coding assistants like Claude become ubiquitous, developers face a critical dilemma: How to leverage their velocity without sacrificing production-grade code quality. Sabrina Ramonov, a seasoned engineer, cuts through the hype with a tactical blueprint refined for real-world complexity. Her methodology—centered on a customizable CLAUDE.md rulebook and disciplined workflows—transforms AI from a chaotic copilot into a scalable engineering asset.

The Core Challenge: Speed vs. Sustainability

Ramonov opens with a stark reality check: "In 2025, no AI tool performs at a senior engineer level." Blind acceptance of AI-generated code risks architectural drift, hidden bugs, and mounting technical debt. Her solution? Enforceable guardrails. The CLAUDE.md file codifies best practices across seven pillars—from implementation ("Prefer branded types for IDs") to testing ("ALWAYS separate pure-logic unit tests from DB-touching integration tests"). These aren't suggestions; they're CI-enforced mandates.

Key Excerpts from CLAUDE.md:

// MUST: Use branded types for domain safety

type UserId = Brand<string, 'UserId'>; // ✅ Good

type UserId = string; // ❌ Bad

// SHOULD: Default to `type` over `interface` unless merging is needed

// SHOULD NOT: Extract functions without reuse or testability justification

// Testing non-negotiable: Assert holistically

expect(result).toBe([value]); // ✅ Good

expect(result).toHaveLength(1); // ❌ Bad

expect(result[0]).toBe(value); // ❌ Bad

The AI Coding Workflow: Shortcuts for Precision

Ramonov’s process turns ambiguity into repeatability. Developers initiate with qnew to load the rulebook, then qplan to validate alignment with existing code. The critical qcode command triggers implementation with embedded quality checks: auto-running Prettier, type checks, and tests. Crucially, qcheckf and qcheckt force AI to self-audit against strict function and test checklists—like verifying cyclomatic complexity or banning trivial asserts.

"I rarely accept AI’s first draft. Without

qcheck, it’s too easy to accumulate debt that slows velocity," Ramonov warns. "AI drifts, misinterprets, and gaslights. You must watch closely."

Why This Matters for Engineering Teams

This isn’t about automating junior tasks; it’s about augmenting senior judgment. Ramonov’s rules address endemic AI pitfalls:

- Over-Abstraction: Blocking unnecessary class proliferation (C-3).

- Testing Theater: Enforcing property-based tests with libraries like

fast-checkfor invariants. - Context Collapse: Mandating domain-aligned naming (C-2) and conventional commits (GH-1).

For teams scaling AI adoption, this framework offers something rare: auditability. Every AI action is traceable to a rule, making code reviews tangible rather than theological.

The Inescapable Human Factor

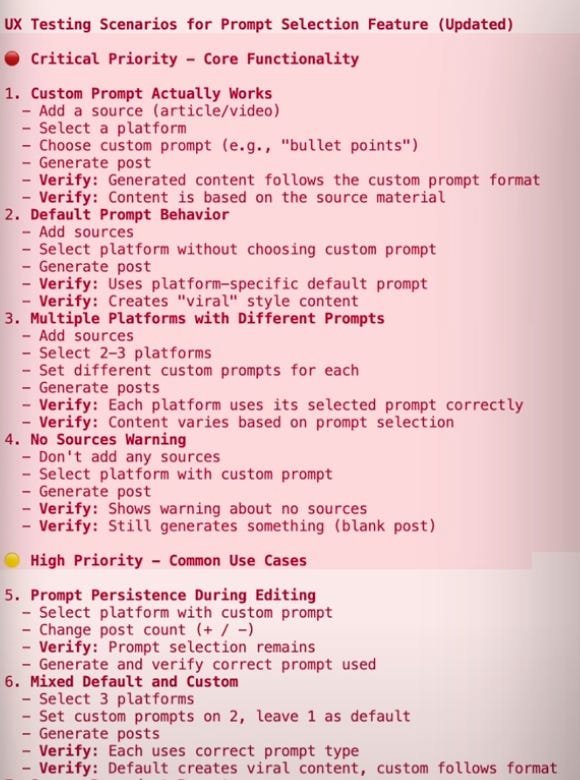

Ramonov’s closing insight resonates: AI accelerates output but can't replace scrutiny. The qux command generates UX test scenarios, but humans must execute them. Her caveats are blunt: "If you don’t question sketchy solutions, you’ll waste hours fixing silent breaks." The future belongs to engineers who wield AI as a disciplined tool—not a crutch.

Source: Sabrina Ramonov, sabrina.dev

Comments

Please log in or register to join the discussion