OpenAI is preparing to launch open-source model weights for 'gpt-oss-20b' and 'gpt-oss-120b,' signaling a shift toward greater transparency as GPT-5 development advances. The models, already spotted on HuggingFace, could democratize AI access for developers and researchers, fostering innovation while addressing long-standing criticism of the company's closed approach.

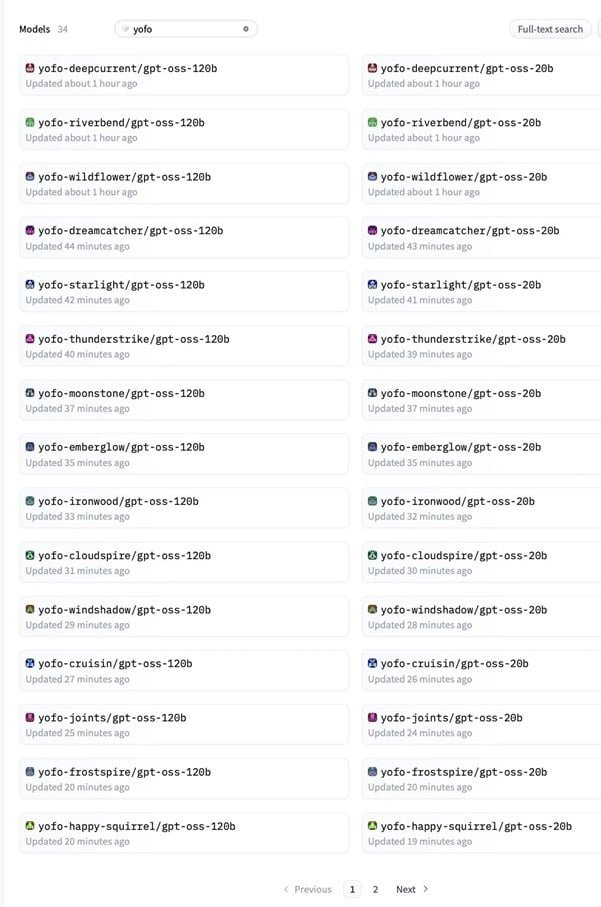

In a quiet yet significant move, OpenAI appears to be rekindling its founding ethos of openness. Recent activity on HuggingFace—a hub for AI collaboration—has revealed two new open-weight models: 'gpt-oss-20b' and 'gpt-oss-120b.' This development, occurring alongside the highly anticipated GPT-5 rollout, suggests a strategic pivot for the AI giant, one that could reshape how developers and enterprises interact with cutting-edge language models.

The Discovery and What It Means

As reported by BleepingComputer, these models surfaced on HuggingFace ahead of any official announcement, likely shared with partners for pre-release testing. The 'weights' refer to the learned parameters of a neural network—essentially, the core intelligence of an AI system. By open-sourcing them, OpenAI is providing a blueprint that allows developers to inspect, modify, and build upon these models without the black-box limitations of closed APIs like GPT-4. For context, '20b' and '120b' denote parameter counts (20 billion and 120 billion), positioning them as substantial but not top-tier alternatives to GPT-5's expected scale.

Image: Conceptual representation of AI model weights, symbolizing OpenAI's shift.

Image: Conceptual representation of AI model weights, symbolizing OpenAI's shift.

Why This Shift Matters Now

OpenAI's journey from open-source pioneer to guarded innovator has been contentious. Early releases like GPT-2 were fully open, but subsequent models grew more restricted, citing safety concerns. This new move, however, aligns with growing industry pressure. Competitors like Meta have gained traction with open models such as Llama, while developers clamor for transparency to debug biases, enhance security, and reduce API dependency. Releasing weights empowers researchers to fine-tune models for niche applications—say, in healthcare or finance—without costly proprietary access.

Implications for the AI Ecosystem

For developers, this is a double-edged sword. On one hand, open weights enable deeper experimentation: imagine optimizing 'gpt-oss-120b' for low-resource environments or integrating it into custom workflows. On the other, it raises security questions—malicious actors could exploit these models for phishing or disinformation, demanding robust safeguards. Strategically, OpenAI might be preempting regulatory scrutiny by fostering goodwill, or using this as a testing ground for GPT-5's architecture. Either way, it signals that the era of walled-garden AI is evolving toward collaborative, community-driven innovation.

As GPT-5 looms, these open-weight releases aren't just a nod to OpenAI's roots; they're a tactical evolution. By balancing proprietary advancement with measured transparency, the company could catalyze a new wave of AI development—one where accessibility fuels progress without compromising on ambition.

Source: BleepingComputer

Comments

Please log in or register to join the discussion