OpenAI is testing a groundbreaking 'Thinking effort' picker that allows users to control how deeply ChatGPT processes queries, with four intensity levels ranging from 'light' to 'max'. This feature, which ties cognitive depth to an internal 'juice' budget, promises optimized responses for tasks from casual chats to complex analyses like econometrics—but comes with trade-offs in speed and accessibility. The innovation could redefine how developers and professionals leverage AI efficiency, though

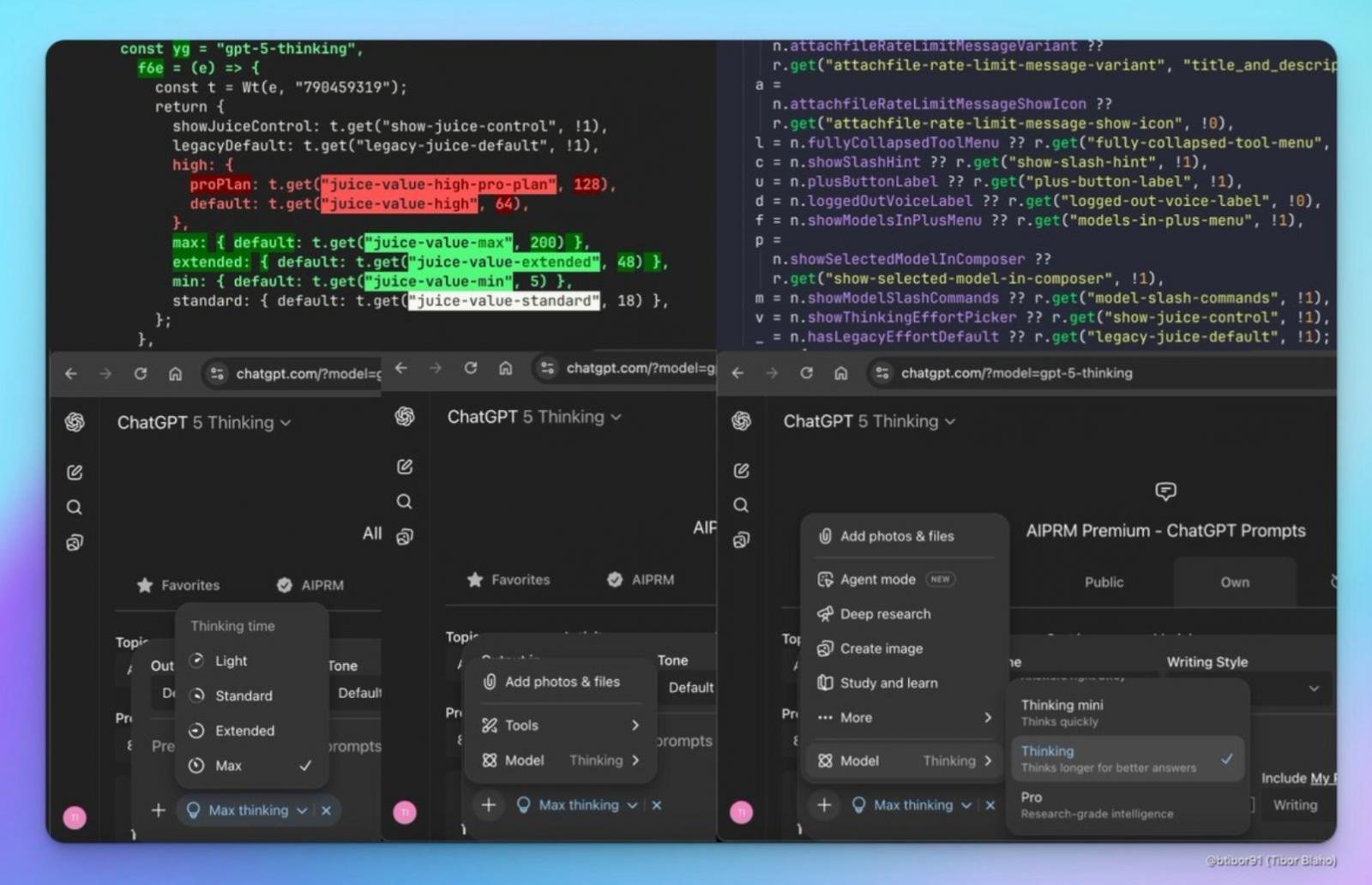

OpenAI is quietly testing a transformative feature for ChatGPT that gives users unprecedented control over the AI's cognitive processing: a "Thinking effort" picker. As first spotted on social media and reported by BleepingComputer, this tool allows users to select from four intensity levels—light, standard, extended, and max—each tied to an internal "juice" budget that dictates how much computational resources ChatGPT expends on generating responses. For developers and tech leaders, this isn't just a usability tweak; it's a strategic move toward customizable AI efficiency that could reshape workflows in fields like data science, finance, and healthcare.

How the Thinking Effort Picker Works

At its core, the feature assigns a numerical "juice" value to each thinking level:

- Light (5): Designed for quick, low-stakes queries, like brainstorming weekend plans, where speed trumps depth.

- Standard (18): The default for everyday use, balancing responsiveness with reasonable analysis.

- Extended (48): Ideal for moderately complex tasks, such as debugging code or summarizing technical documents, where deeper reasoning is beneficial.

- Max (200): Reserved for intensive scenarios like econometric modeling or bond valuation, demanding careful, step-by-step cognition but resulting in slower response times.

Crucially, the "max" tier is gated behind OpenAI's $200/month Pro plan, highlighting a new frontier in AI accessibility. As one insider noted, "More juice means the model takes more steps and usually gives a deeper answer, but it responds slower." This trade-off underscores a key insight: harder thinking doesn't always yield better results—it's about matching the tool to the task.

Why This Matters for the Tech Community

For developers, this feature addresses a long-standing pain point: AI inefficiency. In high-stakes environments like healthcare diagnostics or financial forecasting, a "light" response could be dangerously superficial, while "max" might overkill a simple query, wasting resources. By letting users calibrate effort, OpenAI empowers more intentional AI interactions—potentially reducing computational costs and latency in applications. However, it also raises questions about equitable access, as premium features could widen the gap between casual users and enterprises.

Industry implications are profound. This move signals a shift toward adaptive AI models that prioritize user-defined optimization over one-size-fits-all responses. Imagine integrating this into CI/CD pipelines, where "extended" effort could enhance code reviews, or in research, where "max" might accelerate hypothesis testing. Yet, challenges loom: How will OpenAI prevent misuse in critical systems? And could variable thinking levels introduce inconsistencies in output quality?

As AI evolves, features like the thinking effort picker remind us that smarter tools require smarter usage—balancing power with pragmatism to unlock true potential.

Source: BleepingComputer

Comments

Please log in or register to join the discussion