Samsung announces first commercial HBM4 shipments, positioning itself ahead of rivals SK Hynix and Micron in the high-bandwidth memory race for AI accelerators like Nvidia's.

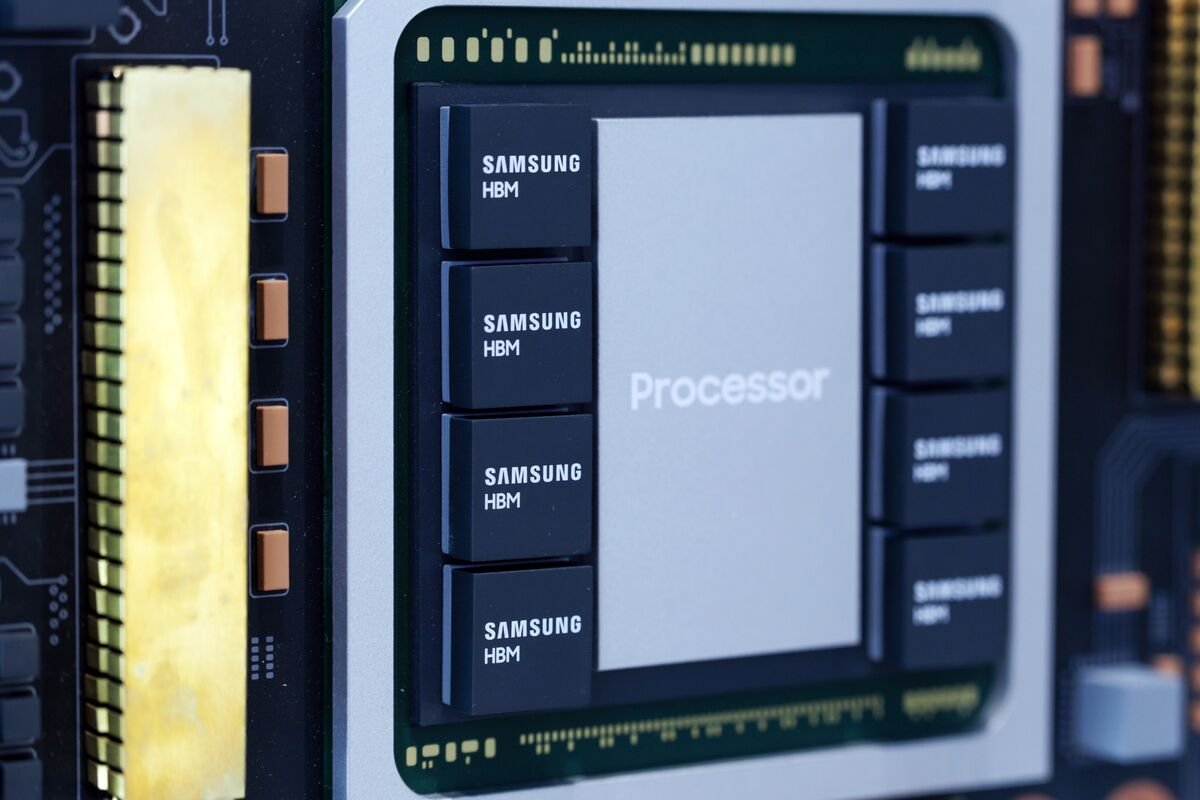

Samsung Electronics has claimed an early lead in the race to supply advanced high-bandwidth memory (HBM) for AI accelerators, announcing it has shipped the first commercial HBM4 memory to customers. The South Korean tech giant is positioning itself to compete directly with rivals SK Hynix and Micron for lucrative contracts with AI chipmakers like Nvidia.

The HBM4 Advantage

High-bandwidth memory has become critical infrastructure for AI workloads, with demand surging as companies race to build more powerful AI systems. HBM4 represents the next generation of this technology, offering higher bandwidth and capacity than previous versions.

Samsung's announcement comes at a crucial time when AI companies are scaling up their infrastructure investments. The company's ability to deliver HBM4 ahead of competitors could secure it preferential supplier status with major AI chip manufacturers.

Market Context

This development is part of a broader competition in the memory market, where Samsung, SK Hynix, and Micron have been vying for dominance. Each company has been investing heavily in R&D to maintain technological leadership.

SK Hynix has been a traditional leader in HBM technology, particularly as a key supplier to Nvidia. Micron has also been investing in next-generation memory solutions to capture market share.

Technical Implications

HBM4's improved performance metrics will enable AI accelerators to process larger models more efficiently. The technology's higher bandwidth reduces data bottlenecks that can limit AI system performance.

For AI companies, having reliable access to cutting-edge memory technology is essential for maintaining competitive advantages in model training and inference speeds.

Industry Response

While Samsung has announced shipments, competitors are likely to respond with their own HBM4 timelines. The memory market typically sees rapid technological advancement as companies seek to differentiate their offerings.

Industry analysts will be watching closely to see which AI companies adopt Samsung's HBM4 first and how this affects the competitive landscape among memory manufacturers.

Broader AI Infrastructure Trends

The HBM4 announcement reflects the broader trend of specialized hardware development for AI workloads. As AI models grow larger and more complex, the demand for high-performance memory continues to increase.

This competition among memory manufacturers ultimately benefits AI companies by driving down costs and improving performance characteristics of the underlying hardware infrastructure.

The memory technology race is just one front in the broader AI hardware competition, where companies are simultaneously developing specialized chips, interconnects, and cooling solutions to support increasingly demanding AI workloads.

Comments

Please log in or register to join the discussion