As engineering teams face alert overload, AI-powered tools like Sonarly are attempting to automate triage and fixes—but questions remain about reliability and developer displacement.

Engineering teams drowning in a deluge of production alerts have long sought better solutions than pager fatigue and manual triage. A new category of tools promises to not just filter noise but autonomously resolve issues.  Sonarly, recently backed by Y Combinator, represents this emerging approach: connecting directly to observability platforms like Datadog and Sentry, using AI to diagnose issues, and even submitting code fixes via GitHub pull requests.

Sonarly, recently backed by Y Combinator, represents this emerging approach: connecting directly to observability platforms like Datadog and Sentry, using AI to diagnose issues, and even submitting code fixes via GitHub pull requests.

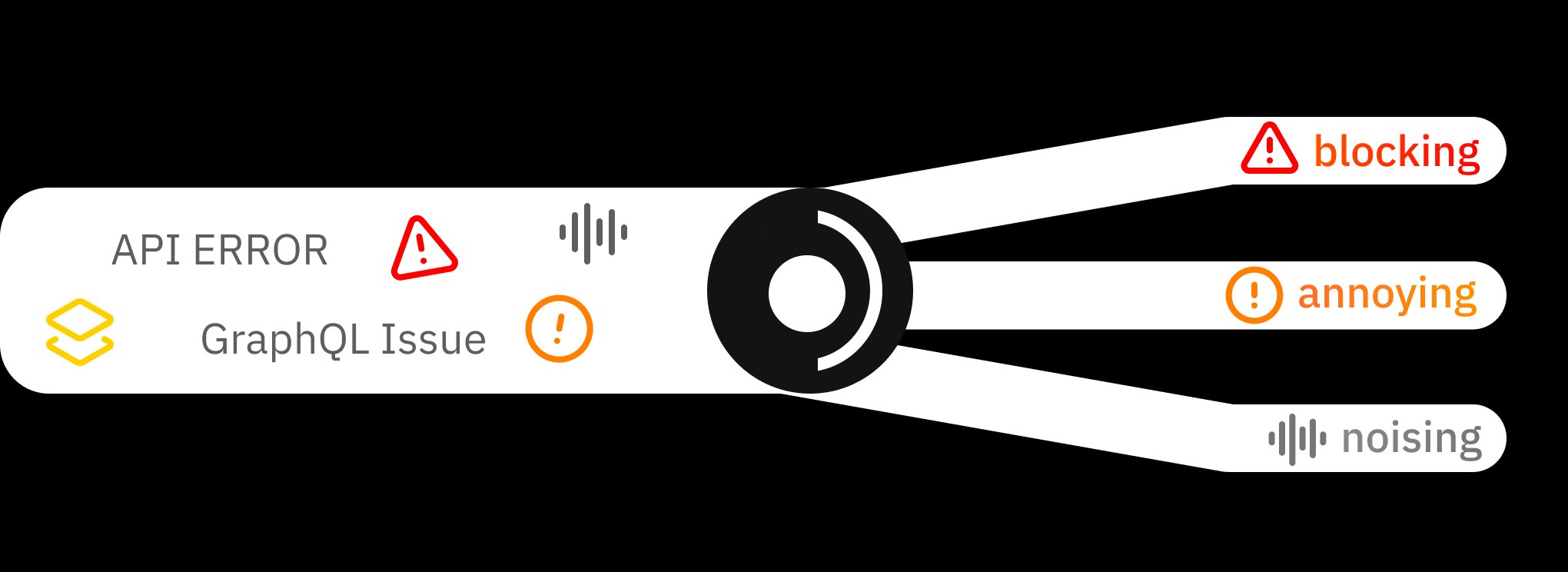

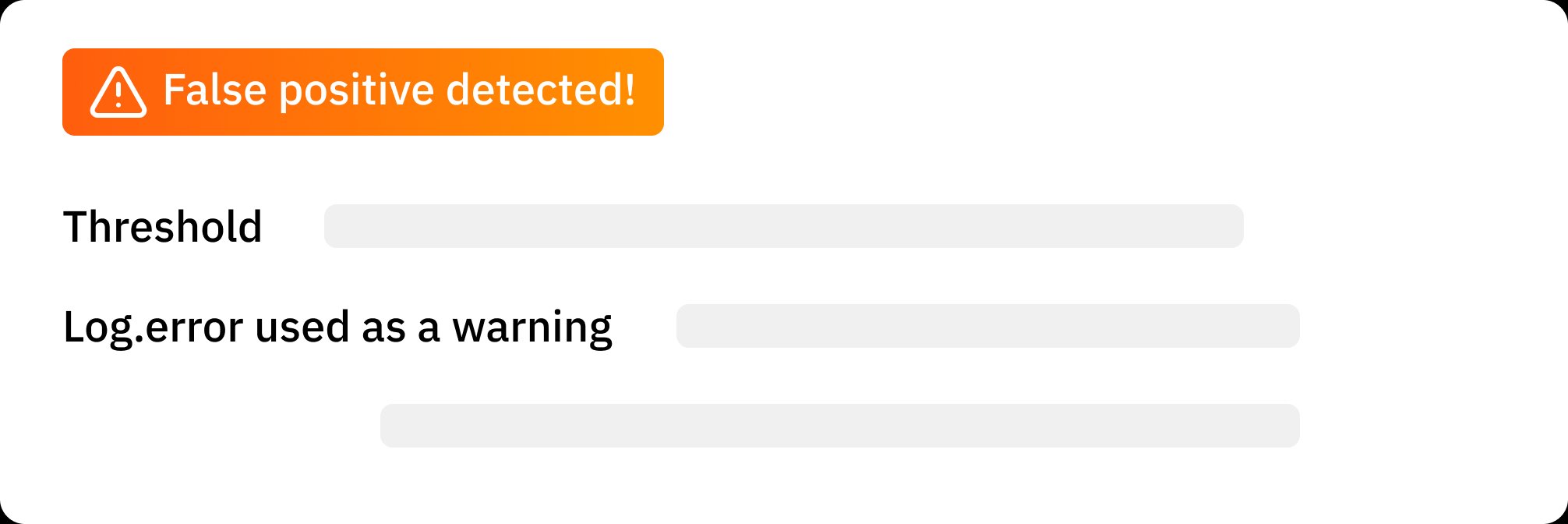

This automation trend responds to legitimate pain points. Modern distributed systems generate overwhelming alert volumes, with studies showing up to 80% being false positives or low-priority noise. Sonarly's process illustrates the ambition: After ingesting alerts with full context—stack traces, logs, and user data—it clusters duplicates and filters false positives.  Crucially, it then attempts to correlate errors with codebase changes, diagnose root causes, and generate fixes without human intervention.

Crucially, it then attempts to correlate errors with codebase changes, diagnose root causes, and generate fixes without human intervention.

The appeal is clear for overburdened teams. By reducing mean time to resolution (MTTR) through automated PRs, tools like this could potentially reclaim hundreds of engineering hours monthly. Sonarly's pricing tiers—including a free offering for small projects—signal a focus on accessibility.  Its claim of updating alert configurations over time to improve signal quality suggests adaptive learning, theoretically making systems smarter with each incident.

Its claim of updating alert configurations over time to improve signal quality suggests adaptive learning, theoretically making systems smarter with each incident.

Yet skepticism persists. Veteran engineers question whether AI agents can reliably understand nuanced business logic or complex system interactions well enough to safely deploy fixes. "Automating triage is achievable," notes DevOps lead Maria Chen, "but autonomous code changes introduce new risks. A misdiagnosed fix could cascade into worse failures." Others express concern about losing critical debugging skills if engineers become overly reliant on automated solutions.

Security implications also warrant scrutiny. While Sonarly mentions SOC 2 compliance and enterprise security options, the model of granting an AI agent write access to codebases demands rigorous access controls.  As with any system processing sensitive production data, the potential for data leakage or malicious manipulation requires robust safeguards.

As with any system processing sensitive production data, the potential for data leakage or malicious manipulation requires robust safeguards.

Despite these concerns, adoption signals are emerging. Early users report significant reductions in alert fatigue, with one startup CTO noting a 70% drop in manual triage tasks. But the most telling metric remains unresolved: Can such tools consistently deliver accurate fixes without human oversight?  As Sonarly and competitors evolve, they'll need to prove their solutions handle edge cases and high-stakes errors as effectively as routine bugs.

As Sonarly and competitors evolve, they'll need to prove their solutions handle edge cases and high-stakes errors as effectively as routine bugs.

The trajectory suggests a hybrid future. Rather than replacing engineers, these tools may shift developer focus from reactive firefighting to higher-value system design—but only if they demonstrably reduce risk instead of creating new failure modes. For now, the promise of autonomous alert resolution remains compelling yet cautiously received by teams balancing efficiency against operational control.

Comments

Please log in or register to join the discussion