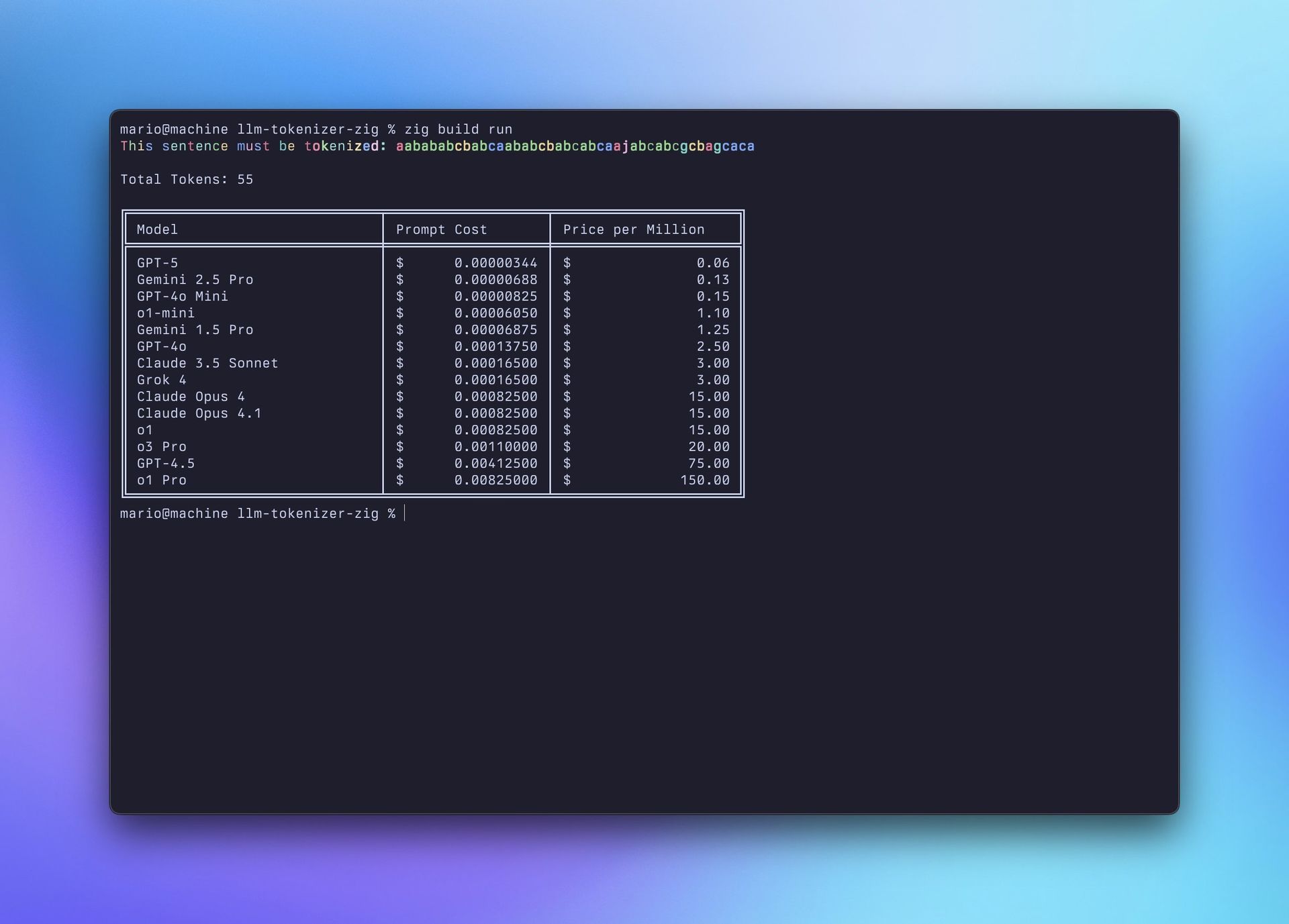

A new open-source project implements a Byte Pair Encoding tokenizer in Zig, offering developers a dependency-free way to tokenize text and estimate costs across major LLM providers. The tool visualizes tokenization with ANSI colors and calculates pricing per prompt, addressing a key pain point in AI development.

Zig Emerges in AI Tooling with LLM Tokenizer and Pricing Calculator

As large language models dominate AI workflows, developers face two persistent challenges: understanding tokenization behavior and predicting inference costs. A new GitHub project tackles both with a pure Zig implementation of Byte Pair Encoding (BPE) tokenization coupled with a multi-provider pricing calculator.

Why Tokenization Matters

Tokenization—the process of converting text into model-digestible chunks—directly impacts LLM performance and cost. Unexpected token counts can derail budgets, especially when working with proprietary models like GPT-4 or Claude. This tool demystifies the process by:

- Implementing BPE entirely in Zig's standard library

- Visualizing tokens with ANSI color coding

- Calculating costs per million tokens across providers

Inside the Implementation

The tokenizer works by iteratively merging the most frequent adjacent byte pairs until no pair occurs more than once. This approach mirrors commercial LLM tokenizers while avoiding external dependencies—a deliberate choice leveraging Zig's growing ecosystem. The real innovation lies in the integrated pricing module:

const models = [_]Model{

.{ .name = "GPT-4o", .price_per_million = 10.00 },

.{ .name = "Claude 3 Opus", .price_per_million = 75.00 },

.{ .name = "Llama 3 70B", .price_per_million = 0.90 }

};

Developers simply add their text to src/prompt.txt, run zig build run, and receive both token visualization and a cost comparison table like this:

The Zig Advantage

Choosing Zig offers tangible benefits for such infrastructure tools:

- Zero-Dependency Reliability: Avoids version conflicts in production pipelines

- Performance Characteristics: Native compilation enables rapid tokenization

- Emerging Ecosystem: Demonstrates Zig's viability for AI/ML tooling

Next Steps and Implications

The project roadmap includes file input flexibility and CLI arguments—critical for pipeline integration. For teams building LLM applications, this represents more than a utility; it's a template for creating cost-transparent AI tooling. As token economics increasingly dictate project feasibility, such open-source instrumentation becomes essential infrastructure.

Source: GitHub Repository

Comments

Please log in or register to join the discussion