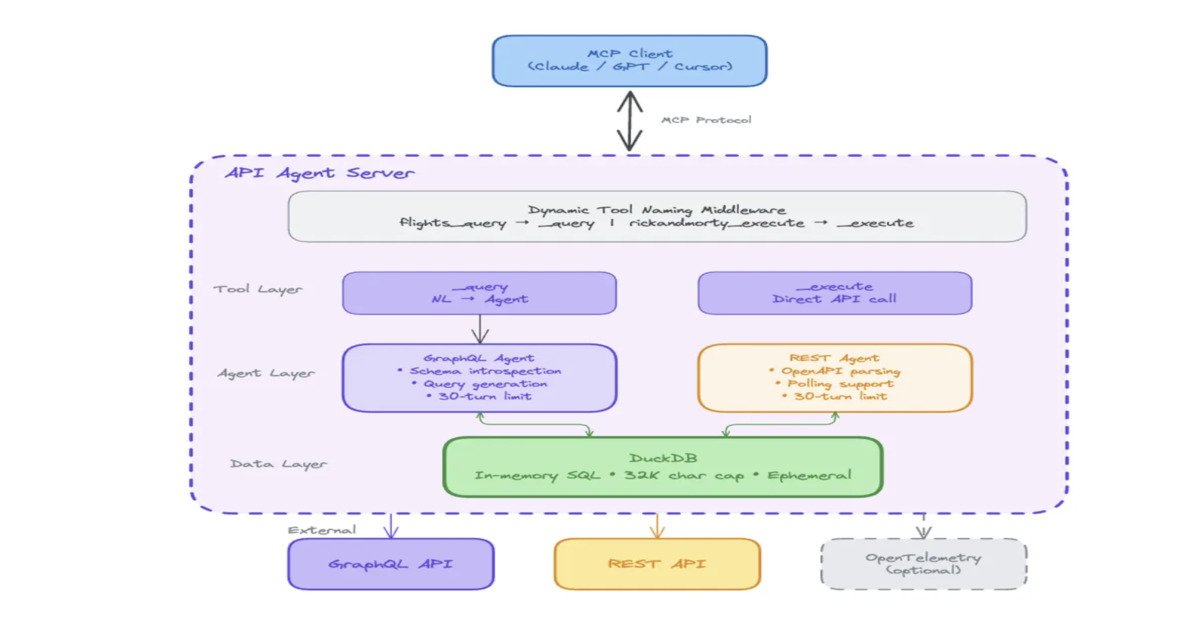

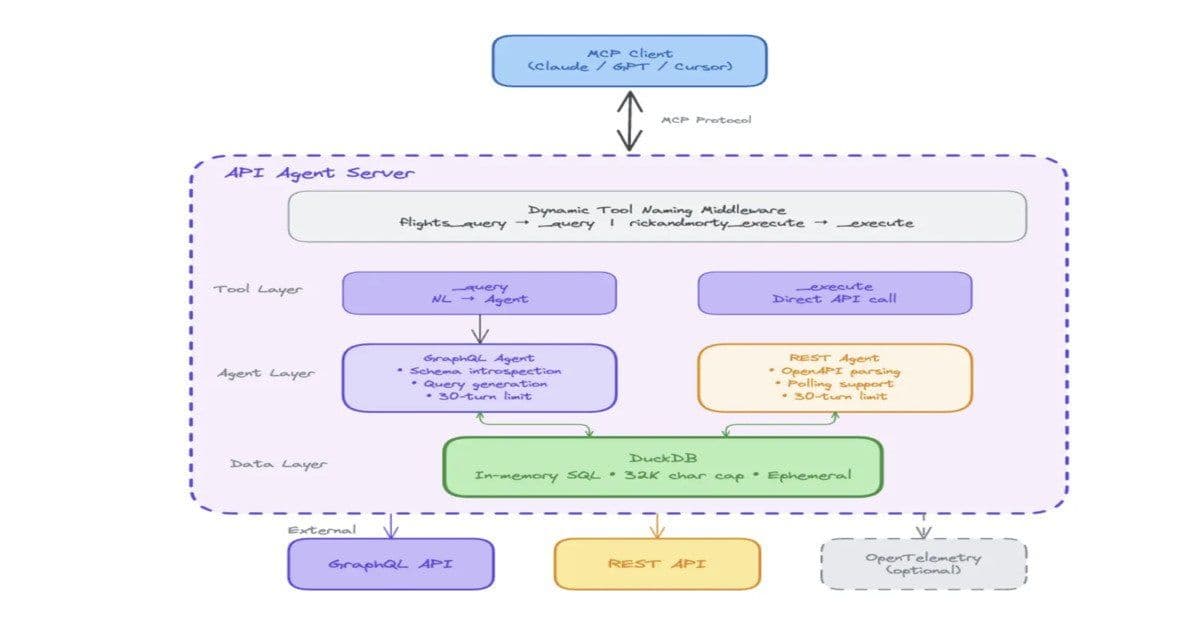

Agoda engineers have developed API Agent, a system that transforms any REST or GraphQL API into a Model Context Protocol (MCP) server without requiring code or deployments, enabling AI assistants to query internal services through natural language.

Agoda engineers have developed API Agent, a system that transforms any REST or GraphQL API into a Model Context Protocol (MCP) server without requiring code or deployments, enabling AI assistants to query internal services through natural language.

Zero-Code API Integration

The core innovation of API Agent lies in its ability to automatically introspect API schemas and generate queries from natural language input. Engineers configure the MCP client with a target URL and API type, and the agent handles the rest. This eliminates the need to build individual MCP servers for each API, significantly reducing operational overhead.

The system operates as a universal MCP server where a single deployment can serve multiple APIs simultaneously. Each API appears as a separate MCP server to clients while sharing the same instance. Adding new APIs requires only configuration updates, not code changes or redeployments.

Dynamic Schema Introspection

API Agent includes a sophisticated schema introspection module that works differently for GraphQL and REST APIs:

- GraphQL APIs: The agent extracts types, fields, and input parameters directly from the schema

- REST APIs: It uses OpenAPI specifications or JSON response examples to understand the API structure

This dynamic approach means the agent can construct queries without requiring prebuilt adapters or manual schema definitions. The system automatically adapts to API changes, maintaining compatibility without intervention.

Technical Stack and Architecture

The implementation leverages several key technologies:

- FastMCP: Provides the MCP server framework

- OpenAI Agents SDK: Handles language model orchestration

- DuckDB: Used for in-memory SQL post-processing

Additional features include dynamic tool naming, schema search for large APIs, turn tracking for multi-step queries, and comprehensive observability through OpenTelemetry, Jaeger, Zipkin, Grafana Tempo, or Arize Phoenix.

SQL-Based Context Management

One of the most innovative aspects of API Agent is its use of SQL for context management. Large language models have context limits, and API responses can easily exceed these limits with thousands of rows of data. API Agent addresses this by:

- Storing full API responses in DuckDB

- Using SQL to filter and aggregate data

- Sending only the concise, relevant results to the model

DuckDB runs in-process, handles JSON natively, and automatically infers schemas. This approach avoids arbitrary code execution while remaining compatible with LLM query generation. The declarative nature of SQL aligns well with how large language models process information.

Security by Default

Security is built into the system from the ground up. API Agent operates in read-only mode by default, with mutations blocked unless explicitly enabled and whitelisted for internal tools. This conservative approach ensures that AI assistants cannot accidentally modify data or trigger unwanted actions.

Operational Insights and Best Practices

Based on real-world usage, Agoda engineers have identified several operational lessons:

- Clear communication: When responses are truncated, the system clearly indicates what was omitted

- Schema prioritization: The system prioritizes schema information over sample data for better accuracy

- SQL handling: Special attention is paid to SQL quirks and edge cases

- Error transparency: Full error messages are exposed to help LLMs correct their queries

- Recipe optimization: Repeated queries are captured as parameterized "recipes," reducing reasoning time and latency

- Direct return options: Filtered data can bypass summarization when appropriate

Multi-Endpoint Query Support

API Agent supports complex queries across multiple endpoints in a single session. This includes handling joins and aggregations across different services. The SQL-based post-processing approach avoids the need for sandboxing, network isolation, and dependency management that would be required with traditional code-based approaches.

Open Source Availability

The project is open-sourced at api-agent for REST and GraphQL experimentation. This allows other organizations to leverage the technology for their own AI integration needs without building similar systems from scratch.

Industry Context and Related Developments

API Agent represents a significant advancement in the Model Context Protocol ecosystem. As organizations increasingly seek to integrate AI assistants with their internal tools and data sources, solutions like API Agent that reduce the barrier to entry become increasingly valuable.

The system aligns with broader industry trends toward agentic AI and the need for standardized protocols that enable AI systems to interact with diverse data sources. Similar developments include:

- Google's push for gRPC support in MCP: Expanding protocol capabilities

- Microsoft's Azure Functions support for MCP servers: Enterprise integration

- OpenAI and Anthropic's healthcare-focused AI platforms: Industry-specific applications

Technical Deep Dive: How It Works

When a user submits a natural language query, API Agent follows this process:

- Query Analysis: The language model interprets the natural language request

- Schema Retrieval: The agent introspects the target API to understand available endpoints and data structures

- Query Generation: Based on the schema and user intent, the agent generates appropriate API calls

- Data Collection: Multiple API calls may be executed to gather all necessary data

- SQL Processing: Results are stored in DuckDB and processed using SQL to filter, join, and aggregate as needed

- Response Generation: The final, concise result is returned to the user

This approach allows for complex multi-step reasoning while maintaining performance and staying within LLM context limits.

Benefits for Development Teams

API Agent offers several advantages for development teams looking to integrate AI capabilities:

- Reduced development time: No need to write custom MCP servers for each API

- Lower maintenance burden: Automatic schema introspection handles API changes

- Improved security: Read-only default mode with explicit mutation controls

- Better performance: SQL-based processing optimizes data handling

- Enhanced observability: Built-in monitoring and tracing capabilities

- Scalability: Single deployment can handle multiple APIs

Future Implications

The success of API Agent suggests a future where AI assistants can seamlessly integrate with any data source without requiring custom development work. This could accelerate the adoption of AI in enterprise environments where data often resides in diverse, legacy systems with varying API standards.

As the Model Context Protocol ecosystem evolves, solutions like API Agent that bridge the gap between natural language interfaces and structured data sources will likely become increasingly important. The combination of zero-code deployment, automatic schema handling, and SQL-based processing represents a compelling approach to this challenge.

The open-source nature of the project also means that the broader community can contribute to its evolution, potentially expanding support for additional API types, improving performance, and adding new features based on real-world usage patterns.

Comments

Please log in or register to join the discussion