Alibaba's Tongyi-MAI team has released Z-Image-Turbo, an open-source text-to-image model that generates photorealistic outputs in just 8 diffusion steps with sub-second latency. Ranked #1 among open-source models globally, it combines breakthroughs like Decoupled-DMD distillation and bilingual text rendering to make professional-grade image synthesis accessible on consumer GPUs.

In a significant leap for generative AI, Alibaba's Tongyi-MAI research team has unveiled Z-Image-Turbo, an open-source text-to-image model that achieves photorealistic image generation in under a second using only eight diffusion steps. Released on November 26, 2025, and now ranked eighth overall on the Artificial Analysis Text-to-Image Leaderboard (and first among open-source models), this 6-billion-parameter innovation promises to democratize high-fidelity AI art by running efficiently on consumer-grade hardware like 16GB GPUs.

At its core, Z-Image-Turbo leverages a novel architecture called S3-DiT (Scalable Single-Stream DiT), which unifies text, visual tokens, and image data into a single input stream. This design minimizes computational overhead while enabling the model to handle complex prompts with what developers describe as "robust instruction adherence." The breakthrough speed stems from Decoupled-DMD distillation, a technique that separates conditional guidance from distribution matching—slashing the typical 50+ step generation process to a mere eight steps without sacrificing quality. As Marcus Thompson, an AI researcher quoted on the project site, notes:

"Z-Image-Turbo's S3-DiT architecture is impressive. Running a 6B parameter model on 16GB VRAM while maintaining sub-second inference is exactly what the industry needed."

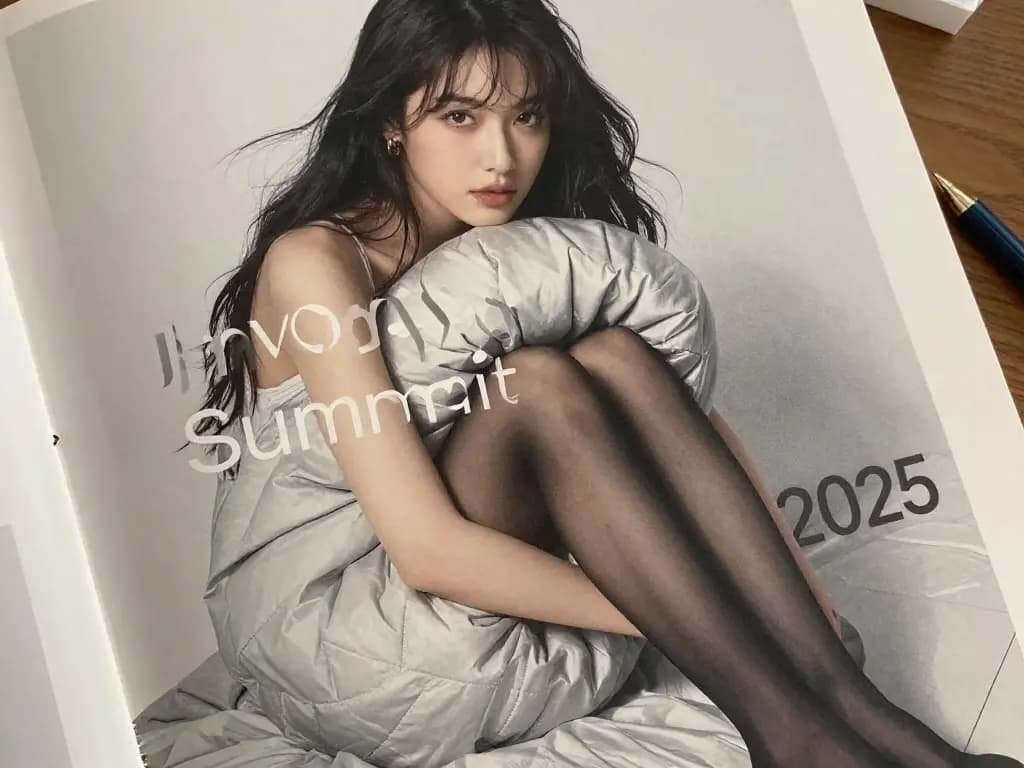

Beyond raw speed, Z-Image-Turbo addresses a persistent pain point in generative AI: accurate text rendering. Unlike many models that struggle with typography, it excels at embedding bilingual English and Chinese text directly into images—ideal for designers creating posters, logos, or marketing materials.  showcases this capability with crisp, professional typography in a minimalist design. The model also includes a Prompt Enhancer that interprets nuanced creative intent by tapping into underlying world knowledge, reducing the trial-and-error often associated with AI image tools.

showcases this capability with crisp, professional typography in a minimalist design. The model also includes a Prompt Enhancer that interprets nuanced creative intent by tapping into underlying world knowledge, reducing the trial-and-error often associated with AI image tools.

For developers, the implications are profound. Z-Image-Turbo is released under the Apache-2.0 license, allowing full commercial use and customization via platforms like Hugging Face and ModelScope. Its memory efficiency—enhanced by Flash Attention support—enables deployment on affordable hardware, with API access priced at $0.005 per megapixel. This accessibility could accelerate adoption in e-commerce, where sellers generate product visuals without photoshoots, or social media, where creators produce scroll-stopping content in seconds. {{IMAGE:3}} illustrates a high-resolution product catalog image created with the tool.

The model's DMDR framework (combining distribution matching distillation with reinforcement learning) further elevates output quality by refining semantic alignment and aesthetic details, as seen in complex scenes like the time-travel visualization in {{IMAGE:4}}. While competitors focus on scaling parameters, Tongyi-MAI's approach prioritizes efficiency and practicality—signaling a shift toward leaner, faster generative AI that doesn't compromise on realism. As open-source models like this lower barriers to entry, they could reshape industries reliant on visual content, turning what once required specialized infrastructure into an on-demand resource. Source: zimageturbo.com

Comments

Please log in or register to join the discussion