Modern e-commerce demands sub-second order processing, but traditional architectures struggle with the fundamental tension between speed and consistency. This article explores a distributed systems approach using Taubyte that separates hot path customer interactions from cold path reconciliation, achieving both low latency and strong eventual consistency without compromising operational control.

In modern e-commerce, latency is a revenue killer. When a user clicks "Buy," they expect instant feedback. From a systems perspective, the goal is to keep the hot path (customer interaction) short, predictable, and failure-tolerant without compromising inventory correctness or auditability. Inspired by the article Serverless Order Management using AWS Step Functions and DynamoDB, we'll take a sovereignty- and security-first approach to build a high-speed, resilient order workflow using Taubyte, optimized for the moment that matters: when a customer presses "Buy."

Separating Concerns: Hot vs Cold Path

Instead of coupling checkout to a centralized database write (or a long orchestration chain), we separate concerns:

- Hot path: Accept the order, take payment, reserve stock (fast, close to users)

- Cold path: Reconcile final state into the system of record, with retries and observability

The Challenge: Speed vs. Consistency

Traditional order processing systems face a fundamental trade-off:

- Fast systems can become risky (overselling, partial failures, messy recovery)

- Strongly consistent systems often feel slow because every step synchronizes on a central system of record

- Orchestrated workflows add hop count, state management overhead, and complicated failure handling

Our Taubyte-based architecture solves all three problems simultaneously.

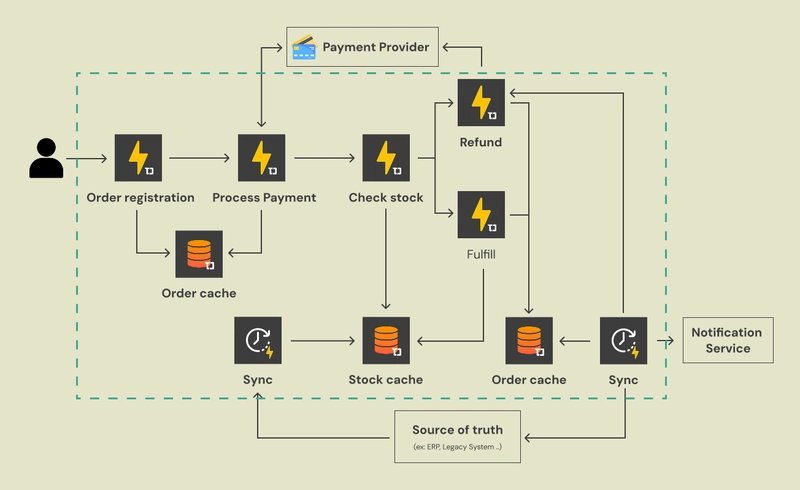

Architecture Overview

We remove the central database bottleneck from the checkout experience using a familiar distributed-systems pattern:

- Write-ahead state in a fast distributed store (orders, payment status, reservation state)

- Background convergence to the system of record (ERP/OMS/warehouse DB) with retries

Taubyte building blocks (architect view):

- Functions: Stateless request handlers and workflow steps (hot path + background steps)

- Distributed caches: Low-latency, globally available key-value stores for orders and stock state

- Scheduled jobs: Periodic reconciliation tasks (inbound inventory refresh, outbound order finalization)

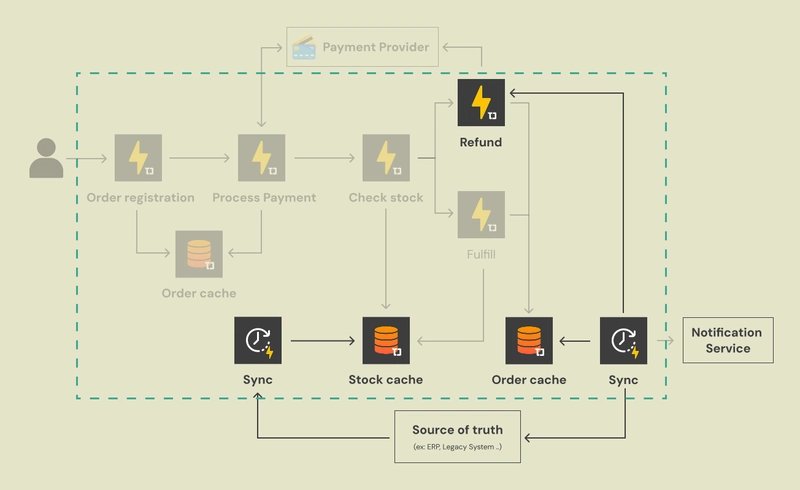

Below is the complete workflow we will be implementing:

Caption: The complete asynchronous order processing and synchronization workflow.

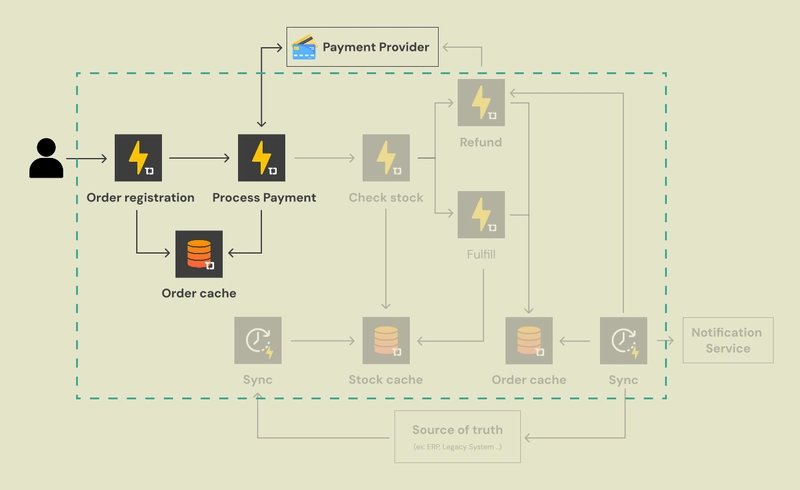

The Workflow: The Hot Path

The initial steps must be fast, because they directly impact conversion rate. Key design decision: Avoid blocking checkout on synchronous writes to the system of record.

1. Order Registration & Caching

User submits an order. A Taubyte function handles the request and responds immediately. Instead of a heavyweight database, the order is written to the Order Cache, which acts as the workflow's durable working set.

2. Payment Processing

A function calls your payment provider (e.g., Stripe). The result (success/failure) is written back into the Order Cache, keeping the workflow state centralized.

Caption: High-speed intake. User requests are stored in a fast distributed cache, decoupling the user from backend complexity.

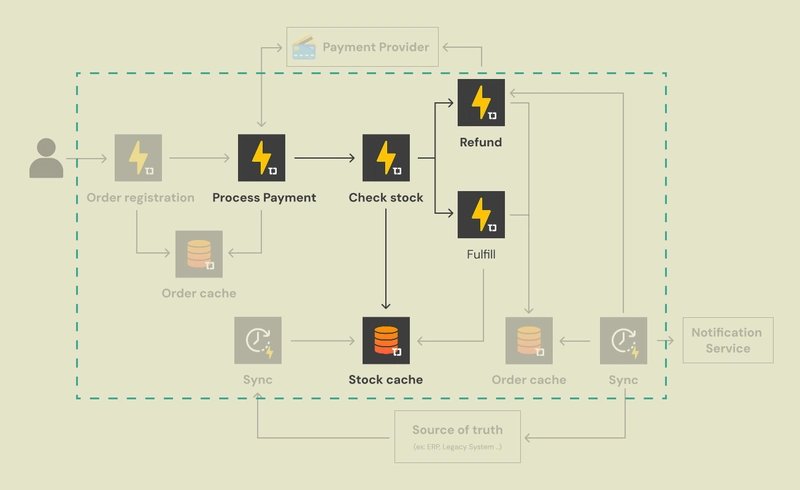

Inventory Decision Engine (Preventing Oversell)

Risk: Overselling.

Solution: Use a fast inventory working set and reservation semantics in the hot path, then reconcile with the back-office system afterward.

3. Inventory Check

Query the Stock Cache, a fast working set holding the latest item counts.

Logic:

- If inventory ≥ 1: Proceed to fulfillment

- If inventory = 0 or payment failed: Proceed to refund

Branch A: Fulfillment (Happy Path)

- Reserve inventory immediately in the Stock Cache

- Release reservations on failure or timeout

Branch B: Refund (Failure Path)

- Trigger refund and notify the customer

Failure handling is built into the workflow, not an afterthought

Caption: Decision engine uses a fast cache lookup to split the workflow into fulfillment or refund paths.

The Secret Sauce: Asynchronous Synchronization

Question: How do we guarantee correctness using a distributed working set?

Answer: Background reconciliation. Accept and process orders fast, then converge final state into the system of record with retryable, observable workers.

Inbound Sync: Keeping Stock Accurate

- Scheduled Taubyte job runs periodically (e.g., every 5 minutes)

- Pulls latest inventory from system of record (ERP/warehouse DB/API)

- Refreshes the Stock Cache to bound drift

Outbound Sync: Finalizing Orders

- Once an order reaches Fulfill or Refund state, background sync writes it to the system of record

- Ensures exactly-once accounting, idempotent updates, and audit trails

Caption: Background scheduled functions ensure caches are eventually consistent with the persistent database.

Why This Architecture Wins

Taubyte advantages:

- Unmatched Speed: Lightweight functions + distributed caches → minimal startup overhead

- Resilience: Orders can be processed even if the main database is offline

- Operational Simplicity: Entire workflow is code-defined → deterministic and instant deployments

AWS vs Taubyte: Data Sovereignty & Control

| Topic | AWS | Taubyte |

|---|---|---|

| Control plane ownership | Provider-operated | Autopilot under your governance |

| Residency boundaries | Region selection + constraints | Hard boundaries: country/region/operator/on-prem |

| Compliance | Shared responsibility | Aligned to internal controls |

| Portability / lock-in | Higher lock-in | Lower lock-in, runs across environments you control |

| Failure domains | Provider regions/services | Architected around chosen failure domains |

AWS: great if "region choice" is enough

Taubyte: compelling if you need operational control & enforceable residency boundaries

Architectural Considerations

State model: Order Cache as workflow state machine (registered → paid → reserved → fulfilled/refunded → synced)

Idempotency: Safe retries for all steps

Delivery semantics: At-least-once for background jobs; use dedup keys

Reservation policy: TTLs, release rules, reconciliation behavior

Consistency guarantees: Strong consistency (reservation) vs eventual convergence (sync)

Back-pressure & throttling: Protect payment & system-of-record APIs

Observability: Correlation IDs, workflow metrics, alerts

Security boundaries: Isolate secrets, minimize PII, encryption + access controls

Conclusion

With Taubyte, you can build a resilient, low-latency order system that:

- Keeps the customer path fast (hot path with lightweight functions & caches)

- Maintains accuracy in the system of record (background reconciliation)

This architecture scales with your business while minimizing operational complexity.

Next Steps

- Set up Taubyte locally: Dream for local cloud development

- Define your functions: Start with the order registration function (HTTP functions)

- Configure distributed caches: Order Cache & Stock Cache (databases)

- Implement sync workers: Scheduled functions for bidirectional sync

- Test & deploy: Use Taubyte local tools before production

Comments

Please log in or register to join the discussion