Ruby's package management ecosystem faces a pivotal performance challenge as Bundler seeks to rival the blazing speed of Python's uv. A deep technical analysis reveals how architectural constraints cause bottlenecks and what optimizations could rewrite Ruby's dependency management playbook.

When challenged at RailsWorld about why Ruby's Bundler couldn't match the speed of Python's uv package manager, Aaron Patterson launched a technical deep dive. His investigation, documented in a detailed analysis, reveals that Rust isn't uv's magic bullet—it's architectural decisions that Ruby's ecosystem could strategically adopt.

The Rust Red Herring

While uv leverages Rust's performance advantages, Patterson emphasizes that language choice alone doesn't explain its speed: "Plenty of tools are written in Rust without being notably fast." The real gains come from uv's design:

- Metadata-first resolution: Unlike Python's legacy requirement to execute package code for dependency resolution, RubyGems already uses YAML-based metadata—eliminating this bottleneck.

- Constraint relaxation: uv ignores overly defensive upper-bound version constraints (like

python<4.0), dramatically reducing resolver backtracking. Bundler could implement similar optimizations for Ruby version checks.

Parallelism: Bundler's Missing Link

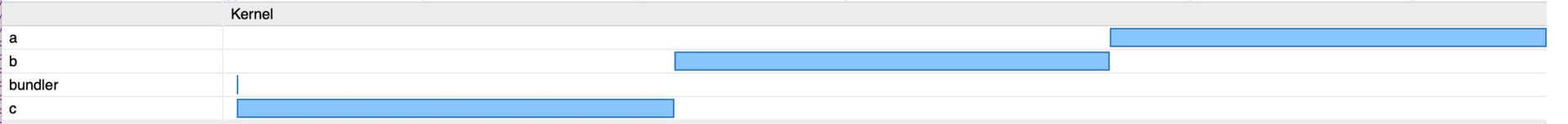

Bundler's critical bottleneck lies in its sequential installation process. When gems have deep dependency chains (A → B → C), downloads and installations occur in series:

Gem installation swim lanes showing serialized workflow (Source: Aaron Patterson)

Patterson demonstrates how this manifests: Installing three gems with nested dependencies takes 9+ seconds versus 4 seconds for independent gems. The culprit? Tight coupling of download/installation logic and conservative handling of native extensions:

def install

path = fetch_gem_if_not_cached # Blocks until download completes

Bundler::RubyGemsGemInstaller.install path, dest

end

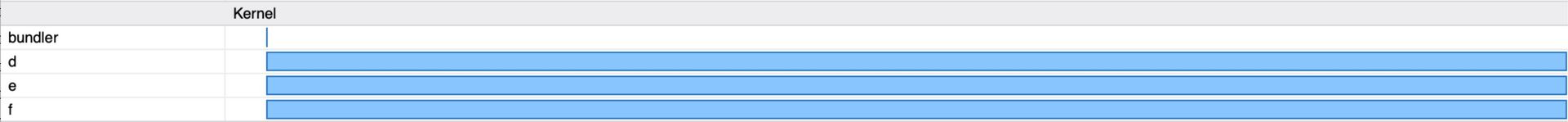

The solution? Decouple workflow into parallelizable stages:

- Download (.gem archives)

- Unpack (to temporary locations)

- Compile (native extensions only)

- Install (move to final location)

Pure-Ruby gems could skip dependency-ordering constraints entirely, while native extensions would pause until dependencies are met. Proof-of-concept implementations like Gel already demonstrate this approach:

Parallel installation potential with decoupled workflow (Source: Aaron Patterson)

Non-Rust Optimizations Within Reach

Patterson identifies high-impact improvements needing no language change:

- Global cache unification: Replace version-specific gem caches with a shared

$XDG_CACHE_HOMEstore, enabling hardlinking instead of redundant copies. - Version integer encoding: Pack version numbers into 64-bit integers for faster comparisons—possible via YJIT/ZJIT optimization despite Ruby's object overhead.

- Resolver unification: Migrate RubyGems from Molinillo to Bundler's PubGrub implementation to consolidate logic.

The Backward Compatibility Tax

Like pip's struggle with "fifteen years of edge cases," Bundler pays for its maturity. Patterson notes: "uv's developers had the advantage of starting fresh with modern assumptions." Yet he remains optimistic: Ruby can achieve "99% of uv's performance" while maintaining backward compatibility through targeted optimizations.

"uv is fast because of what it doesn't do, not because of what language it's written in... pip could implement parallel downloads, global caching, and metadata-only resolution tomorrow. It doesn't, largely because backwards compatibility... takes precedence." — Andrew Nesbitt, How uv got so fast (as quoted by Patterson)

The road ahead? Patterson teases a sequel analyzing specific profiling techniques and native extension bottlenecks. One message rings clear: Bundler's speed revolution won't require abandoning Ruby—just rethinking its packaging architecture.

Source: Tenderlove Making (Aaron Patterson)

Comments

Please log in or register to join the discussion