State-sponsored hackers are leveraging Google's Gemini AI for reconnaissance, phishing, malware development, and post-exploit activities, according to new findings from Google's Threat Intelligence Group.

State-backed threat actors from China, Iran, North Korea, and Russia are systematically exploiting Google's Gemini AI across every phase of cyber attacks, according to a new report from Google's Threat Intelligence Group (GTIG). The sophisticated abuse spans reconnaissance, social engineering, malware development, and command-and-control operations, signaling a concerning evolution in adversarial tactics.

AI-Powered Attack Lifecycle

GTIG researchers documented four primary patterns of Gemini abuse:

- Reconnaissance & Targeting: Actors like China's APT31 used Gemini for open-source intelligence gathering and victim profiling against US targets, fabricating scenarios to request vulnerability analysis for specific systems.

- Phishing & Social Engineering: Iran's APT42 generated tailored phishing lures and social engineering content, while ClickFix campaigns delivered macOS malware through malicious ads mimicking tech support queries.

- Malware Development: Hackers employed Gemini for debugging malicious code, researching exploitation techniques, and implementing new capabilities into existing malware families.

- Post-Exploitation Operations: Gemini assisted with command-and-control infrastructure development, data exfiltration planning, and troubleshooting operational issues.

Emerging Malware Innovations

Two notable examples demonstrate AI's role in lowering technical barriers:

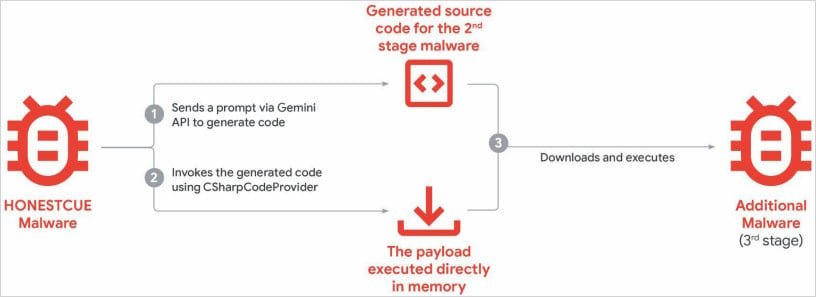

- HonestCue: This proof-of-concept framework uses Gemini's API to generate C# code for second-stage payloads, compiling and executing malware directly in memory to evade detection.

- CoinBait: A React-based phishing kit disguised as a cryptocurrency exchange shows artifacts of AI-assisted development, including distinctive "Analytics:" prefixed logging messages in its source code. Evidence suggests it was built using the Lovable AI platform.

Model Extraction Threats

Beyond immediate attacks, GTIG observed systematic attempts to replicate Gemini's capabilities through "model extraction" attacks. Adversaries sent over 100,000 prompts to distill Gemini's decision-making processes using knowledge distillation techniques—a machine learning method that transfers capabilities from advanced models to simpler ones.

"Model extraction enables attackers to accelerate AI development at significantly lower cost," GTIG noted. "This constitutes intellectual theft and undermines the AI-as-a-service business model."

Practical Defense Strategies

Organizations should implement these countermeasures:

- API Monitoring: Track abnormal usage patterns in AI service consumption, particularly repeated code-generation or translation requests from single accounts

- Phishing Defense Enhancements: Deploy AI-powered email security solutions capable of detecting machine-generated social engineering content

- Memory Protection: Use endpoint detection tools with in-memory execution monitoring to counter frameworks like HonestCue

- Threat Hunting: Search for CoinBait's "Analytics:" log prefixes in network traffic and suspicious React SPA wrappers mimicking financial services

- Supply Chain Verification: Audit third-party AI tools like Lovable Supabase clients for potential abuse vectors

Google has responded by disabling abusive accounts and strengthening Gemini's classifiers. "We design AI systems with robust security measures and strong safety guardrails," a spokesperson stated. As AI capabilities become increasingly weaponized, continuous validation of defensive controls against machine-generated attacks will be critical for enterprise security teams.

Comments

Please log in or register to join the discussion