John Carmack suggests using a long fiber optic loop to store AI model weights, potentially replacing DRAM with near-zero latency and massive bandwidth. The concept draws parallels to historical delay-line memory while offering modern power efficiency benefits.

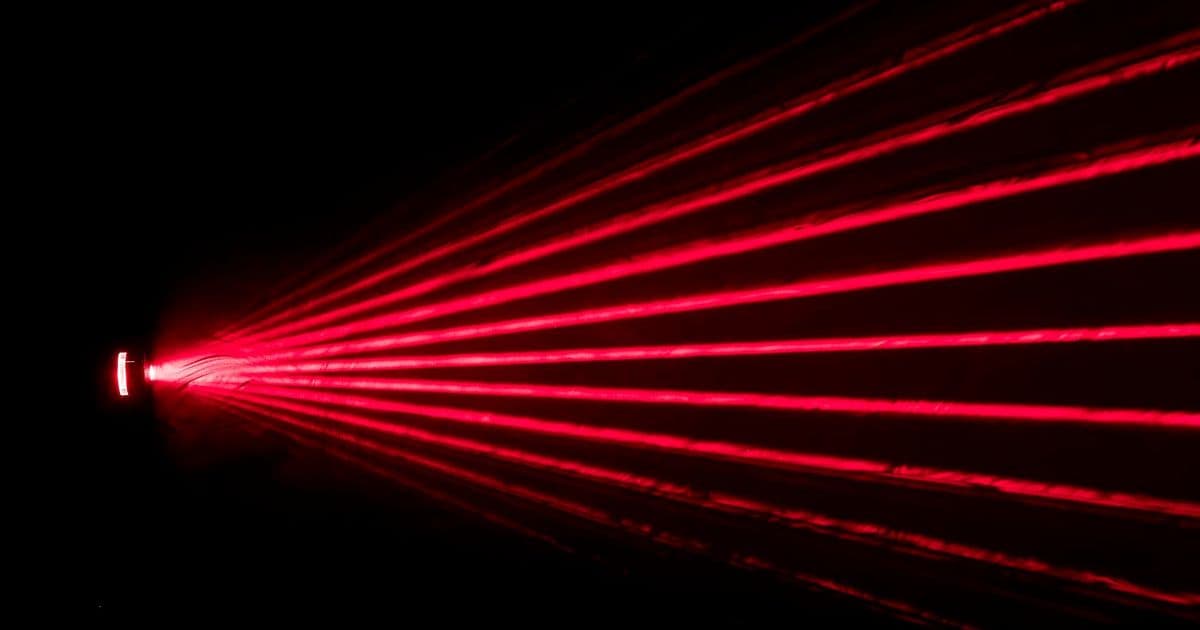

John Carmack, the legendary programmer and co-founder of id Software, has proposed an unconventional solution to AI's memory bottleneck: using a long fiber optic loop as an L2 cache for streaming AI data. His idea, shared on X (formerly Twitter), suggests that fiber optics could potentially replace traditional DRAM for certain AI workloads, offering near-zero latency and massive bandwidth advantages.

The Technical Foundation

Carmack's concept stems from recent advancements in fiber optic technology. Single-mode fiber has demonstrated data rates of 256 terabits per second over distances of 200 kilometers. Through some quick calculations, Carmack determined that this translates to approximately 32 gigabytes of data being "in flight" within the fiber cable at any given moment, with a theoretical bandwidth of 32 terabytes per second.

This massive bandwidth potential caught Carmack's attention because neural network inference and training operations can have deterministic weight reference patterns. The sequential and predictable nature of these operations makes them ideal candidates for streaming data from a continuous fiber loop rather than traditional random-access memory.

Historical Parallels and Modern Advantages

The concept isn't entirely new. Several commenters pointed out that Carmack's idea bears similarities to delay-line memory, a technology used in the mid-20th century that stored data as sound waves traveling through mercury. Alan Turing himself had proposed using a gin mixture as an alternative medium due to mercury's practical challenges.

However, modern fiber optics offer significant advantages over these historical approaches. Light is predictable and easy to work with, requiring minimal power to maintain compared to DRAM, which consumes substantial energy to keep data refreshed. This power efficiency could be a major selling point, especially as AI workloads continue to scale and energy costs become increasingly important.

Practical Considerations and Alternatives

While the fiber optic concept is intriguing, several practical challenges exist. Optical amplifiers and digital signal processors (DSPs) required to maintain signal integrity over long distances could potentially offset some of the energy savings. Additionally, 200 kilometers of fiber optic cable represents a significant infrastructure investment, even if fiber transmission continues to improve faster than DRAM technology.

Some commenters, including Elon Musk, suggested even more ambitious alternatives like using vacuum as the medium (essentially space-based laser communication), though the practicality of such approaches remains questionable.

Carmack himself acknowledged that a more practical near-term solution might involve using existing flash memory chips. By wiring enough flash memory directly to AI accelerators with careful timing considerations, similar benefits could be achieved without the infrastructure requirements of a massive fiber loop. This approach would require standardization of interfaces between flash memory manufacturers and AI accelerator makers, but given the massive investment in AI infrastructure, such standardization seems increasingly likely.

Existing Research and Development

Interestingly, Carmack's idea aligns with several research projects that have explored similar concepts. Projects like Behemoth (2021), FlashGNN, and FlashNeuron (both from 2021) have investigated using flash memory for AI model storage and processing. More recently, the Augmented Memory Grid project has continued this line of research.

These projects suggest that the industry is already moving in directions that could make Carmack's vision more practical, even if the specific implementation differs from his fiber optic proposal.

Implications for AI Infrastructure

The broader implication of Carmack's musing is that the AI industry may need to fundamentally rethink how it approaches memory architecture. Traditional DRAM has served computing well for decades, but the unique characteristics of AI workloads—particularly the sequential and predictable access patterns of neural network weights—may benefit from entirely different approaches.

Whether through fiber optics, advanced flash memory configurations, or other innovative solutions, the goal remains the same: ensuring AI accelerators have constant access to the data they need without the bottlenecks and power consumption of traditional memory systems. As AI models continue to grow in size and complexity, finding more efficient ways to feed them data will become increasingly critical.

Carmack's proposal, while perhaps not immediately practical, serves as a valuable thought experiment that challenges conventional thinking about memory architecture. In an industry that often focuses on incremental improvements, such radical ideas can spark innovation and lead to unexpected breakthroughs in how we approach the fundamental challenges of AI computing.

Comments

Please log in or register to join the discussion