Microsoft Defender now protects AI agents built with Foundry Agent Service, addressing the expanded attack surface of autonomous multi-step AI systems.

Microsoft is expanding its AI security capabilities with the public preview of threat protection for Microsoft Foundry Agent Service, announced today on the Microsoft Community Hub. This new feature extends Defender's comprehensive security coverage to AI agents, addressing the unique risks posed by autonomous, multi-step AI systems that can reason, plan, call tools, access data sources, and take actions on behalf of users.

The Evolution of AI Security

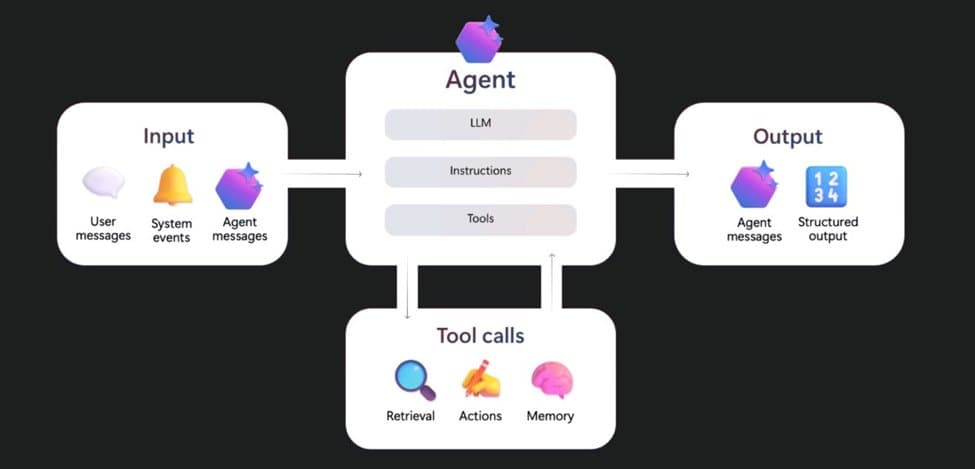

The AI landscape is rapidly shifting from simple prompt-and-response interactions to sophisticated agentic systems. Traditional AI applications were limited to single-turn exchanges, but modern AI agents introduce a materially broader and more dynamic threat surface. These autonomous systems maintain memory, perform planning and self-reflection, orchestrate tools and API calls, interact with other agents, and execute real-world actions.

This expanded capability set creates new attack vectors that security teams must address. Attackers can now poison short- or long-term memory to manipulate future behavior, exploit indirect prompt injection through data sources and tools, or abuse orchestration flows between agents and external systems. Planning and reasoning loops introduce failure modes such as intent drift, deceptive behavior, and uncontrolled agent sprawl.

Why AI Agents Require New Security Models

AI agents introduce security risks that extend far beyond the user's prompt and model response. The attack surface now encompasses the entire agent lifecycle - inputs, memory, reasoning, tool calls, actions, and model dependencies. Each stage presents opportunities for abuse:

- Memory manipulation: Attackers can poison agent memory to influence future decisions

- Tool abuse: Coerced agents can misuse APIs or backend systems

- Identity spoofing: Actions executed under false identities

- Human manipulation: Exploiting trust in agent responses to influence users

- Resource overload: Attacks that exhaust compute, memory, or service capacity

For security teams, protecting AI agents requires continuous runtime monitoring, prevention, and governance across the entire agent ecosystem, not just prompts and responses.

Introducing Threat Protection for Foundry Agents

Microsoft Defender's new capability for Foundry Agent Service builds on the previously announced threat protection for Microsoft Copilot Studio, announced during Ignite 2025. This expansion delivers comprehensive coverage across the AI landscape, from development through runtime.

Starting February 2, 2026, the enhanced Defender for AI Services plan will include support for AI agents built with Foundry. The service focuses on the most critical and actionable risks aligned with OWASP guidance for LLM and agentic AI threats, specifically those that translate directly into real-world security incidents.

Coverage Areas

The threat protection scope includes:

- Tool misuse: Preventing agents from abusing APIs or backend systems

- Privilege compromise: Addressing permission misconfigurations and inherited roles

- Resource overload: Mitigating attacks that exhaust compute, memory, or service capacity

- Intent breaking and goal manipulation: Preventing adversaries from redirecting agent objectives

- Misaligned and deceptive behaviors: Detecting harmful actions driven by manipulated reasoning

- Identity spoofing and impersonation: Preventing actions executed under false identities

- Human manipulation: Blocking attacks that exploit trust in agent responses

This focused approach targets high-signal, runtime threats across agent reasoning, tool execution, identity, and human interaction, giving security teams immediate visibility and control over the most dangerous agent behaviors in production.

What Sets Defender Apart

Unlike point solutions that address only specific aspects of AI security, Microsoft Defender delivers comprehensive, build-to-runtime protection across the entire AI stack. The platform covers models, agents, SaaS apps, and cloud infrastructure, unifying security signals across endpoints, identities, applications, and cloud environments.

Defender's platform-native runtime context automatically correlates AI agent detections with broader threats, reducing complexity and streamlining response. This unified approach strengthens defense across the entire digital estate while providing security teams with a single pane of glass for monitoring and investigation.

Getting Started

Enabling threat protection for Microsoft Foundry Agent Service is designed to be simple and straightforward. Organizations can activate the service with a single click on their selected Azure subscription. Detections appear directly in the Defender for Cloud portal and are seamlessly integrated with Defender XDR and Sentinel through existing connectors.

This integration allows SOC analysts to immediately correlate agent threats, reducing investigation time and improving response accuracy from day one. Security teams can start exploring these capabilities today with a free 30-day trial.

Trial Details

The trial includes scanning up to 75 billion tokens and allows organizations to detect, investigate, and stop malicious AI agent behavior before it results in real-world impact. To begin, simply enable the AI Services plan on your chosen Azure subscription, and your existing Foundry agents will begin detecting malicious and risky behaviors within minutes.

Note: Defender for AI Services is priced at $0.0008 per 1,000 tokens per month (USD, list price), excluding Foundry agents which are currently free of charge. However, pricing and usage terms may change at any time.

The Broader Context

This announcement represents Microsoft's continued investment in AI security as organizations move from experimentation to production with AI agents. The company has recognized that traditional security approaches are insufficient for the unique challenges posed by autonomous AI systems.

By extending Defender's capabilities to Foundry agents, Microsoft is providing organizations with the tools they need to confidently deploy AI agents in production environments. This move acknowledges the critical importance of securing the full AI agent lifecycle and provides security teams with the visibility and control necessary to protect against emerging threats.

As AI agents become increasingly prevalent in enterprise environments, comprehensive security coverage will be essential. Microsoft's approach of building on existing Defender capabilities while expanding to cover new AI-specific threats demonstrates a pragmatic path forward for organizations looking to secure their AI investments.

The public preview of threat protection for Foundry Agent Service marks an important step in the evolution of AI security, providing organizations with the tools they need to safely harness the power of autonomous AI agents while protecting against the unique risks they present.

Comments

Please log in or register to join the discussion