New research warns that AI-assisted 'vibe coding' is eroding the foundations of open source software by disconnecting developers from communities, reducing forum engagement, and creating a Spotify-like compensation model that leaves most projects underfunded.

A new pre-print paper by prominent researchers warns that "vibe coding"—software development where AI chatbots write most of the code—could be destroying the open source ecosystem. The study, titled "The Impact of Vibe Coding on Open Source Communities" (Koren et al., 2026), paints a concerning picture of how AI-assisted development is fundamentally altering the relationship between developers and the projects they rely on.

The Core Problem: Disconnection from Community

The fundamental issue, according to the researchers, is that vibe coding transforms developers from active community participants into passive consumers of AI-generated code. When developers ask chatbots to "write a function that does X," they're no longer engaging with documentation, browsing GitHub issues, or participating in community forums. They're simply receiving code that works—or appears to work.

This shift has cascading effects throughout the open source ecosystem. Project websites see fewer visits, documentation becomes less relevant, and the organic discovery of libraries and tools is replaced by whatever happened to be most prevalent in the AI's training data. The researchers note that this creates a feedback loop where popular projects become more popular simply because they were well-represented in training data, while newer or niche projects struggle to gain visibility.

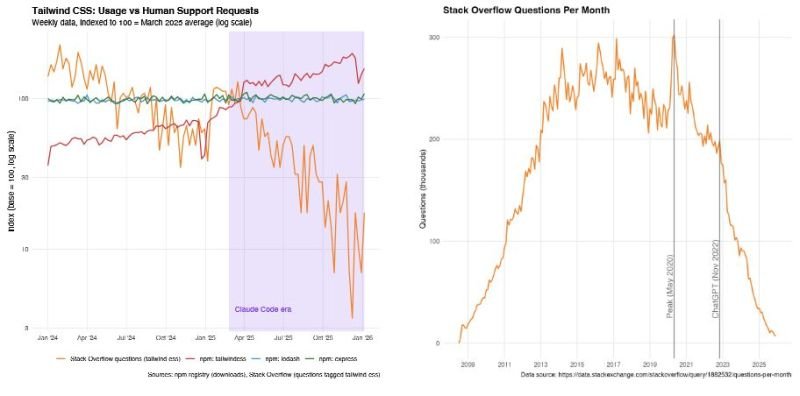

The impact on community forums is particularly stark. Stack Overflow, once the go-to resource for developers, has seen a dramatic decline in usage as developers turn to AI chatbots for immediate answers. This isn't just about convenience—it represents a fundamental shift in how knowledge is shared and problems are solved within the developer community.

The Spotify Problem: Compensation and Sustainability

One of the paper's most provocative arguments draws a parallel between vibe coding compensation and Spotify's royalty model. Just as 80% of artists on Spotify rarely have their tracks played and receive virtually no compensation, the researchers warn that a similar dynamic could emerge in open source.

If AI companies were to compensate open source projects when their code is used in training data or generated outputs, the distribution would likely mirror Spotify's: a tiny fraction of projects would receive the majority of compensation, while the vast majority of maintainers would see little to no benefit. This is because AI models are statistically more likely to generate code based on the most prevalent dependencies in their training data, creating a winner-takes-all scenario.

Quality Concerns and Productivity Myths

The paper also addresses the persistent question of whether AI-assisted coding actually improves productivity or code quality. The evidence suggests otherwise. A 2024 report found that vibe coding using tools like GitHub Copilot offered no real benefits unless you count adding 41% more bugs as a measure of success. By 2025, the mood had become even more negative, with studies showing that LLM chatbots reduced productivity by 19% and experienced developers reporting scathing reviews after trying them.

These findings reinforce the notion that the "AI revolution" in software development might be more of a stress test for human intelligence than an actual boost to productivity or code quality. The researchers argue that delegating engineering and development to statistical models removes the critical thinking and problem-solving that traditionally drove software innovation.

Which Ecosystems Are Most Vulnerable?

JavaScript, Python, and web technologies appear to be most at risk, according to the paper. These ecosystems have large, active communities that are early adopters of new tools, and they also have the largest training datasets for AI models. This combination makes them particularly susceptible to the negative effects of vibe coding.

The researchers note that this isn't just a theoretical concern—it's already happening. The decline in community engagement, the shift toward AI-generated code, and the reduction in meaningful developer interactions are observable trends that are likely to accelerate as AI tools become more sophisticated and widely adopted.

The Path Forward: A Call for Conscious Development

While the authors remain proponents of AI technology in general, they're calling for a more conscious approach to its implementation in software development. The paper suggests that the industry needs to find ways to preserve the collaborative, community-driven nature of open source while still leveraging the benefits that AI tools can provide.

This might involve developing AI tools that encourage rather than discourage community engagement, creating compensation models that actually support the broader ecosystem of open source maintainers, or establishing best practices for using AI assistance without losing the human element of software development.

The question remains: can we harness the power of AI without destroying the very communities that made modern software development possible? The researchers' warning is clear—without careful consideration and deliberate action, vibe coding could indeed kill open source as we know it.

Comments

Please log in or register to join the discussion