Andrej Karpathy reveals a paradigm shift in AI engineering, arguing that forcing LLMs to handle deterministic tasks like file searches leads to rampant errors. His Claude Code architecture delegates execution to specialized tools, reserving AI for contextual decisions—a blueprint for reliable AI-augmented development.

The programming profession is undergoing a seismic shift, described by AI pioneer Andrej Karpathy as a "magnitude 9 earthquake." In a detailed analysis of his year-long project building Claude Code—a system with 35 agents and 68 skills—Karpathy dismantles the industry's default approach to AI integration. The culprit? The "Master Prompt" pattern, where a single LLM agent handles everything from file operations to complex diagnostics, resulting in compounding hallucinations and unreliable outputs.

The Flawed Default: Jack-of-All-Trades AI

Most developers start with simplicity: a sparse system prompt like "You are a helpful assistant" granting raw access to tools. But as Karpathy observes, this collapses under complexity. When an LLM is tasked with both executing commands (like grep) and interpreting results, error rates soar. For instance, asking Claude to search a codebase without constraints might yield guessed command flags or encoding mishaps—problems solved decades ago by tools like ripgrep.

Karpathy's division: Programs handle deterministic tasks (search, tests, parsing), while LLMs focus on understanding and decisions.

Karpathy's division: Programs handle deterministic tasks (search, tests, parsing), while LLMs focus on understanding and decisions.

The core insight? Separate solved from unsolved problems:

- Solved problems are deterministic: File searches (

ripgrep), test execution (pytest), build validation, or YAML parsing. These have reliable implementations. - Unsolved problems require contextual nuance: Diagnosing a failing Kubernetes pod, interpreting error messages, or connecting symptoms across systems. Here, LLMs excel.

"The variance is in the wrong place," Karpathy writes. "The LLM is varying its execution when it should only vary its decisions."

A Four-Layer Architecture for Precision

Karpathy's alternative is a hierarchical framework that constrains AI's role, ensuring deterministic execution where possible:

The hierarchy: Router → Agent → Skill → Program, each layer adding specificity.

The hierarchy: Router → Agent → Skill → Program, each layer adding specificity.

Layer 1: The Router A switchboard that classifies inputs (e.g., "debug the auth service") and routes them to domain-specific agents. It prevents context pollution—no more bloated prompts trying to be everything everywhere.

Layer 2: The Agent Not a persona, but a dense context container. For a

golang-engineeragent, this includes Go 1.22+ idioms, project architecture, and concurrency rules. It provides knowledge but not methodology.Layer 3: The Skill The methodology applied across agents. A

systematic-debuggingskill enforces steps: reproduce, isolate, identify, verify. Phase gates (e.g., "Do NOT proceed to IDENTIFY until reproduction is demonstrated") prevent LLMs from jumping to conclusions.Layer 4: Deterministic Programs The foundation. Tools like

code_search()wrapripgrepwith optimized flags;read_file()handles encoding. The LLM selects the tool, but the tool executes the logic. As Karpathy states: "The execution is deterministic. The variance is confined to the selection."

Case Study: Debugging a Kubernetes Failure

Consider diagnosing a pod with ImagePullBackOff:

- Router directs to a

kubernetes-engineeragent withsystematic-debuggingskill. - Agent loads K8s knowledge: pod states, failure patterns, event relationships.

- Skill enforces reproduction first, blocking premature diagnosis.

- Programs execute:

get_pod_description()runskubectl describe, returning structured data.

Raw pod data transformed into diagnosis through LLM interpretation.

Raw pod data transformed into diagnosis through LLM interpretation.

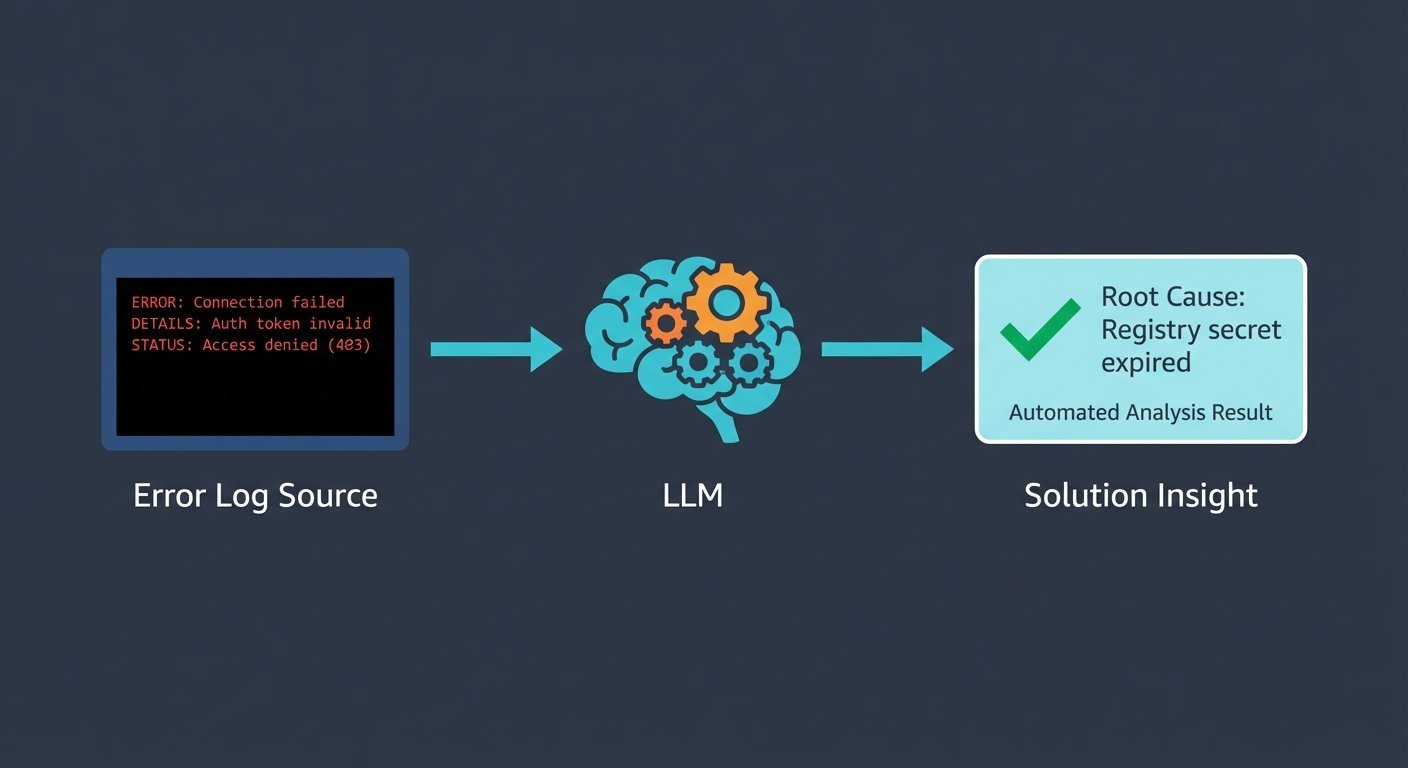

The LLM then interprets: "ImagePullBackOff because the registry secret expired. The secret registry-creds was last rotated 91 days ago." The mechanical data gathering is automated; the contextual insight is AI-driven.

The Future: Orchestration, Not Execution

Karpathy’s conclusion reshapes AI integration: "Everything that can be a program, should be." LLMs belong in decisions deterministic systems can’t make—diagnoses, interpretations, connections. Tools like Claude Code prove that power lies in context density over tool breadth, with stochastic orchestration wrapping deterministic execution. As developers navigate this refactored profession, the mantra is clear: Let programs handle the known; let AI illuminate the unknown.

Source: Andrej Karpathy's analysis in Everything That Can Be Deterministic Should Be.

Comments

Please log in or register to join the discussion