While structured outputs promise reliable data extraction from LLMs, new research reveals they come at a significant cost to response quality. This trade-off between format compliance and accuracy could impact critical applications relying on AI data extraction.

Structured Outputs in LLMs: The Hidden Cost of Guaranteed Format Compliance

In the rapidly evolving landscape of large language models, developers have increasingly turned to structured outputs APIs to guarantee reliable data extraction. These tools promise to transform free-form LLM responses into clean, predictable JSON or other structured formats. However, a growing body of evidence suggests this convenience comes with a hidden cost: degraded response quality and false confidence in the extracted data.

As AI systems become more integrated into critical business processes, understanding this trade-off between format compliance and output accuracy has become essential for developers and organizations building on LLM technology.

The Allure of Structured Outputs

Structured outputs—offered by major providers like OpenAI and Anthropic—use a technique called constrained decoding to force LLMs to generate responses that strictly adhere to predefined schemas. For developers building applications that require predictable data formats, this seems like a godsend. No more wrestling with inconsistent text responses or building complex parsing logic to extract information.

The promise is simple: give the LLM a schema, and it will return data perfectly formatted to match it. This approach eliminates the need for post-processing and reduces the chance of parsing errors. Or so it seems.

A Case Study in Receipt Extraction

To understand the practical implications, let's examine a concrete example: extracting data from receipts. When processing a receipt with fractional quantities—such as 0.46 bananas—a well-tuned LLM should correctly identify this detail.

However, when using OpenAI's structured outputs API with their latest model (gpt-5.2), the system incorrectly reports the banana quantity as 1.0:

{

"establishment_name": "PC Market of Choice",

"date": "2007-01-20",

"total": 0.32,

"currency": "USD",

"items": [

{

"name": "Bananas",

"price": 0.32,

"quantity": 1

}

]

}

In contrast, when using the standard completions API and parsing the free-form response manually, the same model correctly identifies the fractional quantity:

{

"establishment_name": "PC Market of Choice",

"date": "2007-01-20",

"total": 0.32,

"currency": "USD",

"items": [

{

"name": "Bananas",

"price": 0.69,

"quantity": 0.46

}

]

}

This discrepancy reveals a fundamental issue: structured outputs can sacrifice accuracy for the sake of format compliance.

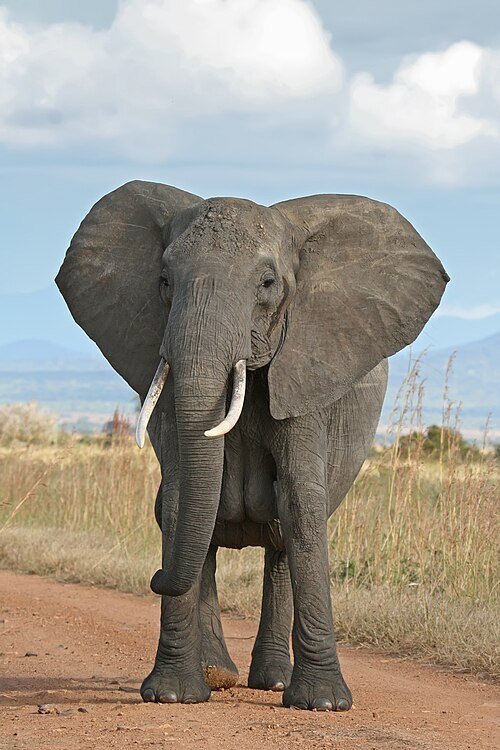

The Elephant in the Room: Handling Edge Cases

The problems compound when considering edge cases. What happens when a user submits a picture of an elephant instead of a receipt? Or a currency exchange receipt with mixed currencies?

With free-form outputs, an LLM can appropriately respond with: "You've asked me to parse a receipt, but this image shows an elephant. I cannot extract receipt data from this image."

However, structured outputs force the model to return an object that matches the schema, even when the input is completely inappropriate. The result is meaningless data that satisfies the format but provides no value—akin to filling in arbitrary information in mandatory form fields that don't apply to your situation.

"It's like when you file a bug report, and the form has 5 mandatory fields about things that have nothing to do with your bug, but you have to put something in those fields to file the bug report: the stuff you put in those fields will probably be useless."

The Schema Design Dilemma

Developers might respond by designing better schemas that include error handling. For example, a schema that returns either { receipt_data } or { error }. But this approach introduces new challenges:

- What constitutes an error? Missing totals? Partial parses? Completely inappropriate inputs?

- How granular should error handling be? Should a partially successful parse return partial results or fail entirely?

- As prompts increasingly discuss potential errors, models may become more likely to respond with errors rather than attempting to provide useful information.

This creates a paradox: the more we try to anticipate and handle every possible error scenario in our schemas, the more we constrain the model's ability to provide helpful responses.

Crippling Chain-of-Thought Reasoning

Structured outputs also undermine a powerful technique for improving LLM performance: chain-of-thought reasoning. This approach, where models are prompted to "explain your reasoning step by step," has been shown to significantly enhance accuracy on complex tasks.

However, when constrained to structured outputs, this technique breaks down. The model can't provide its reasoning in natural language while simultaneously adhering to a strict schema.

Consider how a model might respond when asked to extract order status from an email:

If we think step by step we can see that:

1. The email is from Amazon, confirming the status of a specific order.

2. The subject line says "Your Amazon.com order of 'Wood Dowel Rods...' has shipped!" which indicates that the order status is 'SHIPPED'.

3. [...]

Combining all these points, the output JSON is:

```json

{

"order_status": "SHIPPED",

[...]

}

This response contains the requested JSON but isn't valid JSON itself due to the preceding reasoning text. While developers could add a "reasoning" field to their schema, this forces the model to perform additional formatting work—essentially creating a "cover page" for its response rather than allowing natural reasoning.

## The Technical Reality: Constrained Decoding

To understand why structured outputs degrade quality, we need to examine how they're implemented. Model providers use a technique called constrained decoding:

> "By default, when models are sampled to produce outputs, they are entirely unconstrained and can select any token from the vocabulary as the next output. This flexibility is what allows models to make mistakes; for example, they are generally free to sample a curly brace token at any time, even when that would not produce valid JSON. In order to force valid outputs, we constrain our models to only tokens that would be valid according to the supplied schema, rather than all available tokens."

In essence, constrained decoding applies a filter during sampling that restricts the model's choices to only tokens that would maintain validity according to the schema. While this ensures format compliance, it can prevent the model from generating its most accurate response.

For example, if an LLM has produced `{"quantity": 51,` and is constrained to a schema requiring an integer quantity:

* `{"quantity": 51.2` would be invalid, so `.2` is not allowed

* `{"quantity": 51,` would be valid, so `,` is allowed

* `{"quantity": 510` would be valid, so `0` is allowed

If the model's most accurate answer is 51.2, it cannot provide it due to the constraint.

## A Better Approach: Schema-Aligned Parsing

Given these limitations, what's the alternative? The solution, according to experts at BoundaryML, is surprisingly simple: let the LLM respond in a natural, free-form style and parse the output afterward.

This approach allows models to:

* Refuse to perform tasks when appropriate

* Warn about contradictory information

* Provide explanations for their reasoning

* Handle edge cases gracefully

> "Using structured outputs, via constrained decoding, makes it much harder for the LLM to do any of these. Even though you've crafted a guarantee that the LLM will return a response in exactly your requested output format, that guarantee comes at the cost of the quality of that response, because you're forcing the LLM to prioritize complying with your output format over returning a high-quality response."

This parsing approach also provides an additional security benefit. When combined with proper input validation, it serves as an effective defense against prompt injection attacks that might attempt to manipulate the model into revealing sensitive data.

## Implications for AI Development

The trade-off between structured outputs and free-form parsing has significant implications for AI development:

1. **Accuracy vs. Consistency**: Developers must decide whether they prioritize guaranteed format compliance or higher response accuracy.

2. **Error Handling**: Structured outputs make it difficult to model complex error scenarios that might occur in real-world applications.

3. **Reasoning Capabilities**: Techniques like chain-of-thought reasoning are compromised when models are forced to adhere to strict output formats.

4. **Security**: While structured outputs might seem more secure due to their predictable nature, the inability to handle edge cases appropriately could create vulnerabilities.

As the BoundaryML team notes, their open-source DSL uses schema-aligned parsing specifically because "letting the LLM respond in as natural a fashion as possible is the most effective way to get the highest quality response from it."

## The Path Forward

For developers working with LLMs, the key takeaway is to carefully consider the trade-offs when choosing between structured outputs and free-form parsing. While structured outputs offer convenience and guaranteed format compliance, they come at the cost of response quality and flexibility.

The optimal approach likely depends on the specific use case:

* For applications where format consistency is absolutely critical and minor inaccuracies are acceptable, structured outputs might be appropriate.

* For applications requiring high accuracy, complex reasoning, or robust error handling, free-form parsing with post-processing may be preferable.

As LLM technology continues to evolve, we may see improvements that allow models to provide both structured outputs and high-quality responses. Until then, developers must make informed decisions based on their specific requirements and constraints.

The false confidence created by structured outputs serves as an important reminder: in AI development, as in software engineering, there's no such thing as a free lunch. Every design choice involves trade-offs, and understanding these trade-offs is essential for building effective, reliable systems.

Comments

Please log in or register to join the discussion